23 Nov 2025

Planet Grep

Planet Grep

Lionel Dricot: Nos comptoirs virtuels

Nos comptoirs virtuels

La façade d'un grand café parisien. Zoom sur l'enseigne un peu décrépie ornée d'un pouce blanc sur fond de peinture écaillée : « Le Facebook ».

Intérieur bondé. Moyenne d'âge : 55-60 ans. Les murs sont recouverts de publicité. Les clients sont visiblement tous des habitués et alternent entre ballons de rouge et bières.

- Depuis qu'on peut plus fumer, c'est quand même plus pareil.

- Tout ça c'est la faute de communisses sissgenres !

- Les quois ?

- Les sissgenres. C'est un mot qu'y disent pour légaliser la pédophilie.

- Je croyais qu'on disait transse ?

- C'pareil. Enfin, je crois. Un truc de tarlouzes.

- En tout cas, on peut même plus se rouler une clope en paix !

Une voix résonne provenant d'une table voisine :

- Mon petit fils a fait premier au concours de poésie de son lycée.

Toute la salle crie « Bravo ! » et applaudit pendant 3 secondes avant de reprendre les conversations comme si rien ne s'était passé.

Fondu

Une cafétéria aux murs blancs couverts de posters motivationnels dont les images sont très visiblement générées par IA. Les clients portent tous des costumes-cravates ou des tailleurs un peu cheap, mais qui font illusion de loin. Tous consomment du café dans un gobelet en plastique qu'ils remuent légèrement avec une touillette en bois. Un petit pot contient des touillettes usagées sous une inscription « Pour sauver la planète, recyclez vos touillettes ! »

Gros plan sur Armand, visage bien rasé, lunettes, pommettes saillantes. Il a l'air stressé, mais essaie d'en imposer avec son sourire nerveux.

- Depuis que je fréquente « Le Linkedin », mon rendement de conversion client a augmenté de 3% et j'ai été officiellement nommé Marketing Story Customers Deputy Manager. C'est une belle réussite que je dois à mon réseau.

La caméra s'éloigne. On constate que, comme tous les autres clients, il est seul à sa table et en train de parler à un robot qui acquiesce machinalement.

Fondu

L'endroit branché avec des lumières colorées qui clignotent et de la musique tellement à fond que tu ne sais pas passer commande autrement qu'en hurlant. Des néons hyper design dessinent le nom du bar : « Instagram »

Les cocktails coûtent un mois de salaire, sont faits avec des jus de fruits en boîte. De temps en temps, un client fait une crise d'épilepsie, mais tout le monde trouve ça normal. Et puis les murs sont recouverts de posters géants représentant des paysages somptueux.

La barbe de trois jours soigneusement travaillée, Youri-Maxime pointe un poster à sa compagne.

- Cette photo est magnifique, on doit absolument aller là-bas !

Estelle n'a pas 30 ans, mais son visage est gonflé par la chirurgie esthétique. Sans regarder son compagnon, elle répond :

- Excellente idée, on prendra une photo là, je mettrai mon bikini jaune MachinBazar(TM) et je me ferai un maquillage BrolTruc(TM).

- Trop génial, répond le mec sans quitte des yeux son smartphone. Il me tarde de pouvoir partager la photo !

Fondu

Un ancien entrepôt qui a été transformé en loft de luxe. Briques nues, tuyauteries apparentes. Mais c'est intentionnel. Cependant, on sent que l'endroit n'est plus vraiment entretenu. Il y a des la poussière. Des détritus s'accumulent dans un coin. Les toilettes refoulent. Ça pue la merde dans tout le bar.

Au mur, un grand panneau bleu est barré d'un grand X noir. En dessous, on peut lire, à moitié effacé : « Twitter ».

Dans des pulls élimés et des pantalons de velours, une bande d'habitués est assise à une table. Chacun tape frénétiquement sur le clavier repliable de sa tablette.

Une bande de voyous s'approchent. Ils ont des tatouages en forme de croix gammées, d'aigles, de symboles vaguement nordiques. Ils interpellent les habitués.

- Eh, les mecs ! Vous faites quoi ?

- Nous sommes des journalistes, on écrit des articles. Ça fait 15 ans qu'on vient ici pour travailler.

- Et vous écrivez sur quoi ?

- Sur la démocratie, les droits des trans…

Un nazi a violemment donné un coup de batte de baseball sur la table, éclatant les verres des journalistes.

- Euh, enchaine aussitôt un autre journaliste, on écrit surtout sur le grand remplacement, sur les dangers du wokisme.

Le nazi renifle.

- Vous êtes cool les mecs, continuez !

Fondu

Exactement le même entrepôt sauf que cette fois-ci tout est propre. Le panneau, tout nouveau, indique « Bluesky ». Quand on s'approche des murs, on se rend compte qu'ils sont en fait en carton. Il s'agit d'un décor de cinéma !

Il n'y a pas d'habitués, le bar vient d'ouvrir.

- Bienvenue, lance le patron a la foule qui entre. Je sais que vous ne voulez pas rester à côté, car c'est devenu sale et rempli de nazis. Ici, pas de risque. Tout est pareil, mais décentralisé.

La foule pousse un soupir de satisfaction. Un client fronce les sourcils.

- En quoi est-ce décentralisé ? C'est pareil que…

Il n'a pas le temps de finir sa phrase. Le patron a claqué des doigts et deux cerbères sortis de nulle part le poussent dehors.

- C'est décentralisé, continue le patron, et c'est moi qui prends les commandes.

- Chouette, murmure un client. On va pouvoir avoir l'impression de faire un truc nouveau sans rien changer.

- En plus, on peut lui faire confiance, réplique un autre. C'est le patron de l'ancien bar. Il l'a revendu à un nazi et a pris une partie de l'argent pour ouvrir celui-ci.

- Alors, c'est clairement un gage de confiance !

Fondu

Une vielle grange avec de la paille par terre. Il y a des poules, on entend un mouton bêler.

Un type dans une chemise à carreaux élimée appuie sur un vieux thermo pour en tirer de la bouillasse qu'il tend à ses clients.

- C'est du bio issu du commerce équitable, dit-il. Du Honduras. Ou du Nicaragua ? Faut que je vérifie…

- Merci, répond une grande femme aux cheveux mauves d'un côté du crâne, rasés de l'autre côté.

Elle a un énorme piercing dans le nez, une jupe en voilettes, des bas résille troués et des chaussettes aux couleurs du drapeau trans qui lui remontent jusqu'aux genoux. Elle va s'asseoir devant une vieille table en tréteaux sur laquelle un type barbu en t-shirt « FOSDEM 2004 » tape fiévreusement sur le clavier d'un ordinateur Atari qui prend la moitié de la table. Des câbles sortent de partout.

Arrive une vieille dame aux yeux pétillants. Elle s'appuie sur une canne d'une main, tire un cabas à roulettes de l'autre.

- Bonjour tout le monde ! Vous allez bien aujourd'hui ?

Tout le monde répond des trucs différents en même temps, une poule s'affole et s'envole sur la table en caquetant. La vieille dame ouvre son cabas, faisant tomber une pile de livres de la Pléiade, un Guillaume Musso et une botte de poireaux.

- Regardez ce que je nous ai fait ! Une enseigne pour mettre devant le portail.

Elle déplie un ouvrage au crochet de plusieurs mètres de long. Inscrit en lettres de laine aux coloris plus que douteux, on peut vaguement déchiffrer « Mastodon ». Si on penche la tête et qu'on cligne des yeux.

- Bravo ! C'est magnifique ! entonne une cliente.

- Il fallait dire « Fediverse » dit un autre.

- Est-ce que ça ne rend pas l'endroit un peu trop commercial ? Faudrait pas qu'on devienne comme le bar à néon d'en face.

- Ouais, c'est sûr, c'est le risque. Faudrait que les clients d'en face viennent ici, mais sans que ce soit commercial.

- C'est de la laine bio, continue la vieille dame.

Dans l'étable, une vache mugit.

Je suis Ploum et je viens de publier Bikepunk, une fable écolo-cycliste entièrement tapée sur une machine à écrire mécanique. Pour me soutenir, achetez mes livres (si possible chez votre libraire) !

Recevez directement par mail mes écrits en français et en anglais. Votre adresse ne sera jamais partagée. Vous pouvez également utiliser mon flux RSS francophone ou le flux RSS complet.

23 Nov 2025 2:40am GMT

Frederic Descamps: Deploying on OCI with the starter kit – part 3 (applications)

We saw in part 1 how to deploy our starter kit in OCI, and in part 2 how to connect to the compute instance. We will now check which development languages are available on the compute instance acting as the application server. After that, we will see how easy it is to install a new […]

We saw in part 1 how to deploy our starter kit in OCI, and in part 2 how to connect to the compute instance. We will now check which development languages are available on the compute instance acting as the application server. After that, we will see how easy it is to install a new […]

23 Nov 2025 2:40am GMT

Dries Buytaert: DrupalCon Nara keynote Q&A

DrupalCon Nara just wrapped up, and it left me feeling energized.

During the opening ceremony, Nara City Mayor Gen Nakagawa shared his ambition to make Nara the most Drupal-friendly city in the world. I've attended many conferences over the years, but I've never seen a mayor talk about open source as part of his city's long-term strategy. It was surprising, encouraging, and even a bit surreal.

Because Nara came only five weeks after DrupalCon Vienna, I didn't prepare a traditional keynote. Instead, Pam Barone, CTO of Technocrat and a member of the Drupal CMS leadership team, led a Q&A.

I like the Q&A format because it makes space for more natural questions and more candid answers than a prepared keynote allows.

We covered a lot: the momentum behind Drupal CMS, the upcoming Drupal Canvas launch, our work on a site template marketplace, how AI is reshaping digital agencies, why governments are leaning into open source for digital sovereignty, and more.

If you want more background, my DrupalCon Vienna keynote offers helpful context and includes a video recording with product demos.

The event also featured excellent sessions with deep dives into these topics. All session recordings are available on the DrupalCon Nara YouTube playlist.

Having much of the Drupal CMS leadership team together in Japan also turned the week into a working session. We met daily to align on our priorities for the next six months.

On top of that, I spent most of my time in back-to-back meetings with Drupal agencies and end-users. Hearing about their ambitions and where they need help gave me a clearer sense of where Drupal should go next.

Thank you to the organizers and to everyone who took the time to meet. The commitment and care of the community in Japan really stood out.

23 Nov 2025 2:40am GMT

LXer Linux News

LXer Linux News

KDE Plasma: Set Transparency for Specific Apps | Easy Guide

A brief guide on configuring transparency for selected applications in KDE Plasma using Window Rules, without affecting other windows.

23 Nov 2025 2:11am GMT

Self-Hosters Confirm It Again: Linux Dominates the Homelab OS Space

According to the 2025 Self-Host survey from selfh.st, Linux dominates self-hosting setups and homelab operating systems.

23 Nov 2025 12:06am GMT

22 Nov 2025

LXer Linux News

LXer Linux News

Linux 6.18 To Enable Both Touchscreens On The AYANEO Flip DS Dual-Screen Handheld

Sent out today were a set of input subsystem fixes for the near-final Linux 6.18 kernel. A bit of a notable addition via this "fixes" pull is getting both touchscreens working on the AYANEO Flip DS, a dual-screen gaming handheld device that can be loaded up with Linux...

22 Nov 2025 10:34pm GMT

Fedora People

Fedora People

Kevin Fenzi: infra weeksly recap: Late November 2025

Another busy week in fedora infrastructure. Here's my attempt at a recap of the more interesting items.

Inscrutable vHMC

We have a vHMC vm. This is a virtual Hardware Management Console for our power10 servers. You need one of these to do anything reasonably complex on the servers. I had initially set it up on one of our virthosts just as a qemu raw image, since thats the way the appliance is shipped. But that was making the root filesystem on that server be close to full, so I moved it to a logical volume like all our other vm's. However, after I did that, it started getting high packet loss talking to the servers. Nothing at all should have changed network wise, and indeed, it was the only thing seeing this problem. The virthost, all the other vm's on it, they were all fine. I rebooted it a bunch, tried changing things with no luck.

Then, we had our mass update/reboot outage thursday. After rebooting that virthost, everything was back to normal with the vHMC. Very strange. I hate problems that just go away where you don't know what actually caused them, but at least for now the vHMC is back to normal.

Mass update/reboot cycle

We did a mass update/reboot cycle this last week. We wanted to:

-

Update all the RHEL9 instances to 9.7 which just came out

-

Update all the RHEL10 instances to 10.1 which just came out.

-

Update all the fedora builders from f42 to f43

-

Update all our proxies from f42 to f43

-

Update a few other fedora instances from f42 to f43

This overall went pretty smoothly and everything should be updated and working now. Please do file an issue if you see anything amiss (as always).

AI Scrapers / DDoSers

The new anubis is working I think quite well to keep the ai scrapers at bay now. It is causing some problems for some clients however. It's more likely to find a client that has no user-agent or accept header might be a bot. So, if you are running some client that hits our infra and are seeing anubis challenges, you should adjust your client to send a user-agent and accept header and see if that gets you working again.

The last thing we are seeing thats still anoying is something I thought was ai scraping, but now I am not sure the motivation of it, but here's what I am seeing:

-

LOTS of requests from a large amount of ip's

-

fetching the same files

-

all under forks/$someuser/$popularpackage/ (so forks/kevin/kernel or the like)

-

passing anubis challenges

My guess is that these may be some browser add on/botnet where they don't care about the challenge, but why fetch the same commit 400 times? Why hit the same forked project for millions of hits over 8 or so hours?

If this is a scraper, it's a very unfit one, gathering the same content over and over and never moving on. Perhaps it's just broken and looping?

In any case currently the fix seems to be just to block requests to those forks, but of course that means the user who's fork it is cannot access them. ;( Will try and come up with a better solution.

RDU2-CC to RDU3 move

This datacenter move is still planned to happen. :) I was waiting for a new machine to migrate things to, but it's stuck in process, so instead I just repurposed for now a older server that we still had around. I've setup a new stg.pagure.io on it and copied all the staging data to it, it seems to be working as expected, but I haven't moved it in dns yet.

I then setup a new pagure.io there and am copying data to it now.

The current plan if all goes well is to have an outage and move pagure.io over on december 3rd.

Then, on December 8th, the rest of our RDU2-CC hardware will be powered off and moved. The rest of the items we have there shouldn't be very impactful to users and contributors. download-cc-rdu01 will be down, but we have a bunch of other download servers. Some proxies will be down, but we have a bunch of other proxy servers. After stuff comes back up on the 8th or 9th we will bring things back on line.

US Thanksgiving

Next week is the US Thanksgiving holiday (on thursday). We get thursday and friday as holidays at Red Hat, and I am taking the rest of the week off too. So, I might be around some in community spaces, but will not be attending any meetings or doing things I don't want to.

comments? additions? reactions?

As always, comment on mastodon: https://fosstodon.org/@nirik/115595437083693195

22 Nov 2025 8:48pm GMT

Planet Debian

Planet Debian

Dirk Eddelbuettel: RcppArmadillo 15.2.2-1 on CRAN: Upstream Update, OpenMP Updates

Armadillo is a powerful and expressive C++ template library for linear algebra and scientific computing. It aims towards a good balance between speed and ease of use, has a syntax deliberately close to Matlab, and is useful for algorithm development directly in C++, or quick conversion of research code into production environments. RcppArmadillo integrates this library with the R environment and language-and is widely used by (currently) 1286 other packages on CRAN, downloaded 42.6 million times (per the partial logs from the cloud mirrors of CRAN), and the CSDA paper (preprint / vignette) by Conrad and myself has been cited 659 times according to Google Scholar.

This versions updates to the 15.2.2 upstream Armadillo release made two days ago. It brings a few changes over the RcppArmadillo 15.2.0 release made only to GitHub (and described in this post), and of course even more changes relative to the last CRAN release described in this earlier post. As described previously, and due to both the upstream transition to C++14 coupled with the CRAN move away from C++11, the package offers a transition by allowing packages to remain with the older, pre-15.0.0 'legacy' Armadillo yet offering the current version as the default. During the transition we did not make any releases to CRAN allowing both the upload cadence to settle back to the desired 'about six in six months' that the CRAN Policy asks for, and for packages to adjust to any potential changes. Most affected packages have done so (as can be seen in the GitHub issues #489 and #491) which is good to see. We appreciate all the work done by the respective package maintainers. A number of packages are still under a (now formally expired) deadline at CRAN and may get removed. Our offer to help where we can still stands, so please get in touch if we can be of assistance. As a reminder, the meta-issue #475 regroups all the resources for the transition.

With respect to changes in the package, we once more overhauled the OpenMP detection and setup, following the approach take by package data.table but sticking with an autoconf-based configure. The detailed changes since the last CRAN release follow.

Changes in RcppArmadillo version 15.2.2-1 (2025-11-21)

Upgraded to Armadillo release 15.2.2 (Medium Roast Deluxe)

- Improved reproducibility of random number generation when using OpenMP

Skip a unit test file under macOS as complex algebra seems to fail under newer macOS LAPACK setting

Further OpenMP detection rework for macOS (Dirk in #497, #499)

Define ARMA_CRIPPLED_LAPACK on Windows only if 'LEGACY' Armadillo selected

Changes in RcppArmadillo version 15.2.1-0 (2025-10-28) (GitHub Only)

Upgraded to Armadillo release 15.2.1 (Medium Roast Deluxe)

- Faster handling of submatrices with one row

Changes in RcppArmadillo version 15.2.0-0 (2025-10-20) (GitHub Only)

Upgraded to Armadillo release 15.2.0 (Medium Roast Deluxe)

Added

rande()for generating matrices with elements from exponential distributions

shift()has been deprecated in favour ofcircshift(), for consistency with Matlab/OctaveReworked detection of aliasing, leading to more efficient compiled code

OpenMP detection in

configurehas been simplified

Courtesy of my CRANberries, there is a diffstat report relative to previous release. More detailed information is on the RcppArmadillo page. Questions, comments etc should go to the rcpp-devel mailing list off the Rcpp R-Forge page.

This post by Dirk Eddelbuettel originated on his Thinking inside the box blog. If you like this or other open-source work I do, you can sponsor me at GitHub.

22 Nov 2025 3:44pm GMT

Linuxiac

Linuxiac

KDE Plasma 6.6 Will Introduce Per-Window Screen-Recording Exclusions

KDE Plasma 6.6 desktop environment will introduce per-window screen-recording exclusions, richer blur effects for dark themes, and more.

22 Nov 2025 3:28pm GMT

Bottles 60.0 Launches with Native Wayland Support

Bottles 60.0, a Wine prefix manager for running Windows apps on Linux, adds native Wayland support, a refreshed UI, and more.

22 Nov 2025 1:20pm GMT

Self-Hosters Confirm It Again: Linux Dominates the Homelab OS Space

According to the 2025 Self-Host survey from selfh.st, Linux dominates self-hosting setups and homelab operating systems.

22 Nov 2025 12:04pm GMT

Planet KDE | English

Planet KDE | English

This Week in Plasma: UI and performance improvements

Welcome to a new issue of This Week in Plasma!

This week there were many user interface and performance improvements - some quite consequential. So let's get right into it!

Notable New Features

Plasma 6.6.0

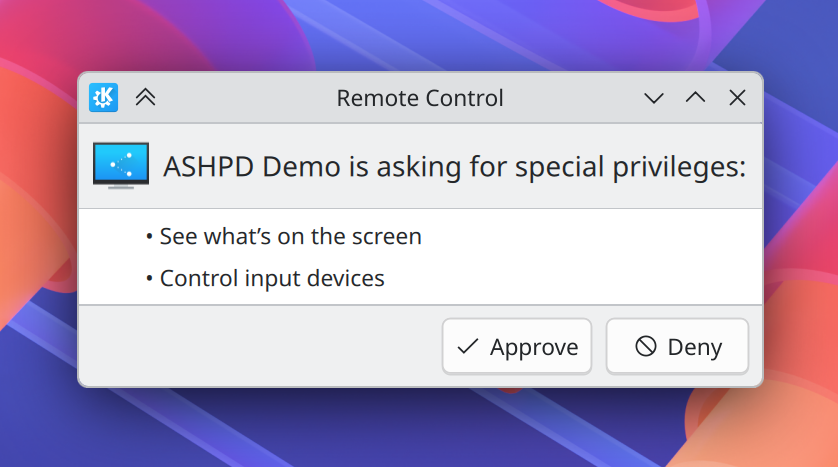

Windows can now be selectively excluded from screen recording! This can be invoked from the titlebar context menu, Task Manager context menu, and window rules. (Stanislav Aleksandrov, link)

Notable UI Improvements

Plasma 6.6.0

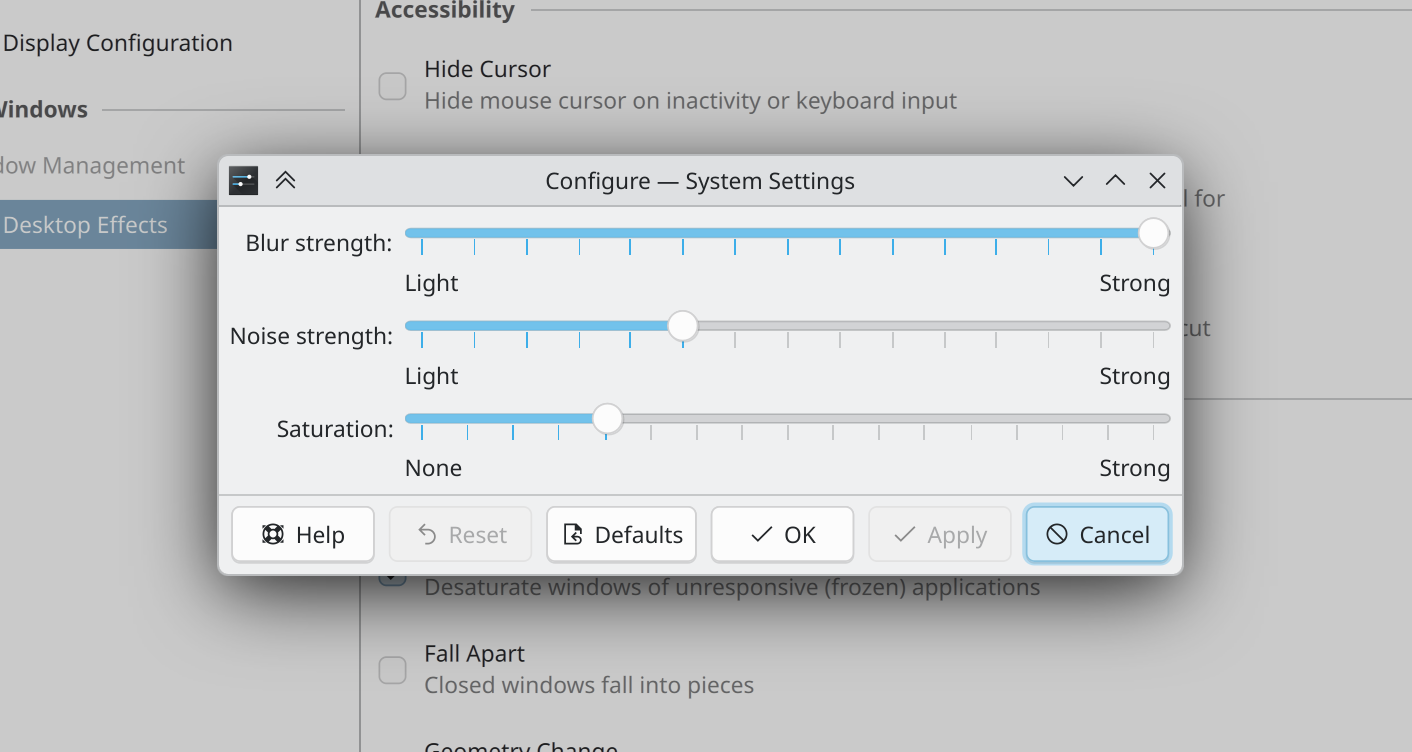

With a dark color scheme, the blur effect now produces a blur that's darker (ideally back to the level seen in Plasma 6.4) and also more vibrant in cases where there are bright colors behind it. People seemed to like this! But for those who don't, the saturation value of the blur effect is now user-configurable, so you can dial it in to your preferred level. (Vlad Zahorodnii, link 1, link 2, and link 3)

When clicking on grouped Task Manager icons to cycle through their windows, full-screen windows will no longer always be raised first. Now, windows will be raised in the order of their last use. (Grégori Mignerot, link)

Did a round of UI polishing on the portal remote control dialog to make it look better and read more naturally. (Nate Graham and Joshua Goins, link 1 link 2, link 3 and link 4)

When you open the Kickoff Application Launcher and your pointer happens to end up right on top of one of the items in the Favorites view, it won't be selected automatically. (Christoph Wolk, link)

The Kickoff Application Launcher widget now tries very hard to keep the first item of the search results view selected - at least until the point where you focus the list and start navigating to another item. (Christoph Wolk, link)

Discover now uses more user-friendly language when it's being used to find apps that can open a certain file type. (Taras Oleksy, link)

You're now far less likely to accidentally raise an unintended app when a notification happens to appear right underneath something you're dragging-and-dropping. (Kai Uwe Broulik, link)

KMenuEdit now lets you select multiple items at a time for faster deletion. (Alexander Wilms, link)

The QR code dialog invokable from the clipboard has been removed, and instead the QR code is shown inline in the widget. This makes it large enough to actually use and also reduces unnecessary code. (Fushan Wen, link)

Notable Bug Fixes

Plasma 6.5.3

Fixed a rare case where KWin could crash when the system wakes from sleep. (Xaver Hugl, link)

Worked around a QML compiler bug in Qt that made the power and session buttons in the Application Launcher widget overlap with the tab bar if you resized its popup. (Christoph Wolk, link)

Plasma 6.5.4

Fixed a regression in menu sizing that got accidentally backported to Plasma 6.5.3. All should be well in 6.5.4, and some distros have backported the fix already. (Akseli Lahtinen and Nate Graham, link)

Fixed a Plasma 6 regression that broke the ability to activate the System Tray's expanded items popup with a keyboard shortcut. (Cursor AI, operated by Mikhail Sidorenko, link)

Fixed a regression caused by a Qt change that broke the clipboard's Actions menu from being able to appear when the configuration dialog wasn't open. (Fushan Wen, link)

Fixed a bug that could make the Plasma panel's custom size chooser appear on the wrong screen. (Vlad Zahorodnii, link)

Fixed a bug that could make the clipboard contents get sent many times when it's being set programmatically in a portal-using app. (David Redondo, link)

Fixed a memory leak in Plasma's desktop. (Vlad Zahorodnii, link)

Fixed a memory leak in the clipboard Actions menu. (Fushan Wen, link)

KWin's zoom effect now saves its current zoom level a little bit after you change it, rather than at logout. This prevents a situation where the system is inappropriately zoomed in (or not zoomed in) after a KWin crash or power loss. (Ritchie Frodomar, link)

Fixed a bug that made the optional Textual List representation of multiple windows in the Task Manager widget fail to get focus when using medium focus stealing prevention. (David Redondo, link)

Plasma 6.6.0

Worked around a bug in some XWayland-using games that made it impossible type text into certain popups. (Xaver Hugl, link)

Clearing KRunner's search history now takes effect immediately, rather than only after KRunner was restarted. (Nate Graham, link)

With a very narrow display and a high scale factor, the buttons on the login, lock, and logout screens can no longer get cut off; now they wrap onto the next line. (Nate Graham, link)

Frameworks 6.21

Fixed a bug that could confuse KWallet - when being used as a Secret Service proxy for KeePassXC - into becoming convinced that it needed to create a new wallet. (Marco Martin, link)

Fixed two memory leaks affecting QML-based System Settings pages. (Vlad Zahorodnii, link 1 and link 2)

Other bug information of note:

- 4 very high priority Plasma bugs (Same as last week). Current list of bugs

- 34 15-minute Plasma bugs (Up from 31 last week). Current list of bugs

Notable in Performance & Technical

Plasma 6.5.3

Apps that use the Keyboard Shortcuts Portal to set shortcuts can now remove them in the same way. (David Redondo, link)

You can now use Spectacle's Active Window mode to take a screenshot of WINE windows. (Xaver Hugl, link)

Plasma 6.6.0

Made a major improvement to the smoothness of animations throughout Plasma and KWin for people using screens with a refresh rate higher than 60 Hz! (David Edmundson, link)

Reduced the amount of unnecessary work KWin does during its compositing pipeline. (Xaver Hugl, link)

When you delete a whole category's worth of shortcuts on System Settings' Shortcuts page, all the shortcuts get grayed out and cease to be interactive, and a warning message tells you they'll seen be deleted and gives you a chance to undo that before it happens. (Nate Graham, link)

Frameworks 6.21

KConfig now parses config files in a stream rather than opening them all at once, which allows it to notice early when a file is corrupted or improperly formatted. This prevents freezes in several places. (Méven Car, link 1, link 2, and link 3)

When using the Systemd integration functionality (which is on by default if Systemd is present), programs will no longer fail to launch while there are any environment variables beginning with a digit, as this is something Systemd doesn't support. (Christoph Cullmann, link)

How You Can Help

Donate to KDE's 2025 fundraiser! It really makes a big difference. Believe it or not, we've already hit out our €75k stretch goal and are €5k towards the final one. I'm just in awe of the generosity of the KDE community and userbase. Thank you all for helping KDE to grow and prosper!

If money is tight, you can help KDE by directly getting involved. Donating time is actually more impactful than donating money. Each contributor makes a huge difference in KDE - you are not a number or a cog in a machine! You don't have to be a programmer, either; many other opportunities exist.

To get a new Plasma feature or a bugfix mentioned here, feel free to push a commit to the relevant merge request on invent.kde.org.

22 Nov 2025 12:01am GMT

21 Nov 2025

OMG! Ubuntu

OMG! Ubuntu

The Raspberry Pi 500+ Works as a Standalone Keyboard (Well, Kinda)

Can the Raspberry Pi 500+ work as a standalone Bluetooth keyboard? Yes, using the open-source btferret project - but not without limitations, as I report.

Can the Raspberry Pi 500+ work as a standalone Bluetooth keyboard? Yes, using the open-source btferret project - but not without limitations, as I report.

You're reading The Raspberry Pi 500+ Works as a Standalone Keyboard (Well, Kinda), a blog post from OMG! Ubuntu. Do not reproduce elsewhere without permission.

21 Nov 2025 11:12pm GMT

Fedora People

Fedora People

Fedora Badges: New badge: Let's have a party (Fedora 43) !

21 Nov 2025 3:38pm GMT

Kernel Planet

Kernel Planet

Brendan Gregg: Intel is listening, don't waste your shot

Intel's new CEO, Lip-Bu Tan, has made listening to customers a top priority, saying at Intel Vision earlier this year: "Please be brutally honest with us. This is what I expect of you this week, and I believe harsh feedback is most valuable."

I'd been in regular meetings with Intel for several years before I joined, and I had been giving them technical direction on various projects, including at times some brutal feedback. When I finally interviewed for a role at Intel I was told something unexpected: that I had already accomplished so much within Intel that I qualified to be an Intel Fellow candidate. I then had to pass several extra interviews to actually become a fellow (and was told I may only be the third person in Intel's history to be hired as a Fellow) but what stuck with me was that I had already accomplished so much at a company I'd never worked for.

If you are in regular meetings with a hardware vendor as a customer (or potential customer) you can accomplish a lot by providing firm and tough feedback, particularly with Intel today. This is easier said than done, however.

Now that I've seen it from the other side I realize I could have accomplished more, and you can too. I regret the meetings where I wasn't really able to have my feedback land as the staff weren't really getting it, so I eventually gave up. After the meeting I'd crack jokes with my colleagues about how the product would likely fail. (Come on, at least I tried to tell them!)

Here's what I wish I had done in any hardware vendor meeting:

- Prep before meetings: study the agenda items and look up attendees on LinkedIn and note what they do, how many staff they say they manage, etc.

- Be aware of intellectual property risks: Don't accept meetings covered by some agreement that involves doing a transfer of intellectual property rights for your feedback (I wrote a post on this); ask your legal team for help.

- Make sure feedback is documented in the meeting minutes (e.g., a shared Google doc) and that it isn't watered down. Be firm about what you know and don't know: it's just as important to assert when you haven't formed an opinion yet on some new topic.

- Stick to technical criticisms that are constructive (uncompetitive, impractical, poor quality, poor performance, difficult to use, of limited use/useless) instead of trash talk (sucks, dumb, rubbish).

- Check minutes include who was present and the date.

- Ask how many staff are on projects if they say they don't have the resources to address your feedback (they may not answer if this is considered sensitive) and share industry expectations, for example: "This should only take one engineer one month, and your LinkedIn says you have over 100 staff."

- Decline freeloading: If staff ask to be taught technical topics they should already know (likely because they just started a new role), decline, as I'm the customer and not a free training resource.

- Ask "did you Google it?" a lot: Sometimes staff join customer meetings to elevate their own status within the company, and ask questions they could have easily answered with Google or ChatGPT.

- Ask for staff/project bans: If particular staff or projects are consistently wasting your time, tell the meeting host (usually the sales rep) to take them off the agenda for at least a year, and don't join (or quit) meetings if they show up. Play bad cop, often no one else will.

- Review attendees. From time to time, consider: Am I meeting all the right people? Review the minutes. E.g., if you're meeting Intel and have been talking about a silicon change, have any actual silicon engineers joined the call?

- Avoid peer pressure: You may meet with the entire product team who are adamant that they are building something great, and you alone need to tell them it's garbage (using better words). Many times in my life I've been the only person to speak up and say uncomfortable things in meetings, yet I'm not the only person present who could.

- Ask for status updates: Be prepared that even if everyone appears grateful and appreciative of your feedback, you may realize six months later that nothing was done with it. Ask for updates and review the prior meeting minutes to see what you asked for and when.

- Speak to ELT/CEO: Once a year or so, ask to speak to someone on the executive leadership team (ELT; the leaders on the website) or the CEO. Share brutal feedback, and email them a copy of the meeting minutes showing the timeline of what you have shared and with whom. This may be the only way your feedback ever gets addressed, in particular for major changes. Ask to hear what they have been told about you and be prepared to refute details: your brutal feedback may have been watered down.

I'm now in meetings from the other side where we'd really appreciate brutal feedback, but some customers aren't comfortable doing this, even when prompted. It isn't easy to tell someone their project is doomed, or that their reasons for not doing something are BS. It isn't easy dealing with peer pressure and a room of warm and friendly staff begging you say something, anything nice about their terrible product for fear of losing their jobs -- and realizing you must be brutal to their faces otherwise you're not helping the vendor or your own company. And it's extra effort to check meeting minutes and to push for meetings with the ELT or the CEO. Giving brutal feedback takes brutal effort.

21 Nov 2025 1:00pm GMT

Planet KDE | English

Planet KDE | English

Web Review, Week 2025-47

Let's go for my web review for the week 2025-47.

In 1982, a physics joke gone wrong sparked the invention of the emoticon - Ars Technica

Tags: tech, history, culture

If you're wondering where emoticons and emojis are coming from, this is a nice little piece about that.

Screw it, I'm installing Linux

Tags: tech, linux, foss, gaming

Clearly something is brewing right now. We're seeing more and more people successfully switching.

https://www.theverge.com/tech/823337/switching-linux-gaming-desktop-cachyos

Lawmakers Want to Ban VPNs-And They Have No Idea What They're Doing

Tags: tech, vpn, privacy, law

This is totally misguided… Let's hope no one will succeed passing such dangerously stupid bills.

Learning with AI falls short compared to old-fashioned web search

Tags: tech, ai, machine-learning, gpt, learning, teaching

If there's one area where people should stay clear from LLMs, it's definitely when they want to learn a topic. That's one more study showing the knowledge you retain from LLMs briefs is shallower. The friction and the struggle to get to the information is a feature, our brain needs it to remember properly.

https://theconversation.com/learning-with-ai-falls-short-compared-to-old-fashioned-web-search-269760

The Psychogenic Machine: Simulating AI Psychosis, Delusion Reinforcement and Harm Enablement in Large Language Models

Tags: tech, ai, machine-learning, gpt, psychology, safety

The findings in this paper are chilling… especially considering what fragile people are doing with those chat bots.

https://arxiv.org/abs/2509.10970v1

Feeds, Feelings, and Focus: A Systematic Review and Meta-Analysis Examining the Cognitive and Mental Health Correlates of Short-Form Video Use

Tags: tech, social-media, cognition, psychology

Unsurprisingly the news ain't good on the front of social media and short form videos. Better stay clear of those.

https://psycnet.apa.org/fulltext/2026-89350-001.html

Do Not Put Your Site Behind Cloudflare if You Don't Need To

Tags: tech, cloud, decentralized, web

Friendly reminder following the Cloudflare downtime earlier this week.

https://huijzer.xyz/posts/123/do-not-put-your-site-behind-cloudflare-if-you-dont

Cloudflare outage on November 18, 2025

Tags: tech, cloud, complexity, safety, rust

Wondering what happened at Cloudflare? Here is their postmortem, this is an interesting read. Now for Rust developers… this is a good illustration of why you should stay clear from unwrap() in production code.

https://blog.cloudflare.com/18-november-2025-outage/

Needy Programs

Tags: tech, ux, notifications

Kind of ignore the security impact of the needed upgrades, but apart from this I largely agree. Most applications try to push more features in your face nowadays, unneeded notifications and all… this is frankly exhausting the users.

https://tonsky.me/blog/needy-programs/

I think nobody wants AI in Firefox, Mozilla

Tags: tech, browser, ai, machine-learning, gpt, mozilla

Looks like Mozilla is doing everything it can to alienate the current Firefox user base and to push forward its forks.

https://manualdousuario.net/en/mozilla-firefox-window-ai/

DeepMind's latest: An AI for handling mathematical proofs

Tags: tech, ai, machine-learning, mathematics, google

That's an interesting approach. Early days on this one, it clearly requires further work but it seems like the proper path for math related problems.

https://arstechnica.com/ai/2025/11/deepminds-latest-an-ai-for-handling-mathematical-proofs/

Production-Grade Container Deployment with Podman Quadlets

Tags: tech, systemd, containers, linux, system, podman

Podman is really a nice option for deploying containers nowadays.

https://blog.hofstede.it/production-grade-container-deployment-with-podman-quadlets/

Match it again Sam

Tags: tech, regex, rust

Nice alternative syntax to the good old regular expressions. Gives nice structure to it all. There's a Rust crate to try it out.

https://www.sminez.dev/match-it-again-sam/

10 Smart Performance Hacks For Faster Python Code

Tags: tech, python, performance

Some of this might sound obvious I guess. Still there are interesting lesser known nuggets proposed here.

https://blog.jetbrains.com/pycharm/2025/11/10-smart-performance-hacks-for-faster-python-code/

Floodfill algorithm in Python

Tags: tech, python, algorithm, graphics

This is a nice little algorithm and it shows how to approach it in Python while keeping it efficient in term of operations.

https://mathspp.com/blog/floodfill-algorithm-in-python

AMD vs. Intel: a Unicode benchmark

Tags: tech, amd, intel, hardware, simd, performance

Clearly AMD is now well above Intel in performance around AVX-512. This is somewhat unexpected.

https://lemire.me/blog/2025/11/16/amd-vs-intel-a-unicode-benchmark/

Memory is slow, Disk is fast

Tags: tech, memory, storage, performance, system

No, don't go assuming you can use disks instead of ram. This is not what it is about. It shows ways to get more out of your disks though. It's not something you always need, but sometimes it can be a worth endeavor.

https://www.bitflux.ai/blog/memory-is-slow-part2/

Compiler Options Hardening Guide for C and C++

Tags: tech, c++, security

Good list of hardening options indeed. That's a lot to deal with of course, let's hope this spreads and some defaults are changed to make it easier.

The problem with inferring from a function call operator is that there may be more than one

Tags: tech, c++, type-systems, safety

The type inference in C++ can indeed lead to this kind of traps. Need to be careful as usual.

https://devblogs.microsoft.com/oldnewthing/20251002-00/?p=111647

There's always going to be a way to not code error handling

Tags: tech, programming, safety, failure

Depending on the ecosystem it's more or less easy indeed. Let's remember that error handling is one of the hard problems to solve.

https://utcc.utoronto.ca/~cks/space/blog/programming/AlwaysUncodedErrorHandling

Disallow code usage with a custom clippy.toml

Tags: tech, rust, tools, quality

Didn't know about that clippy feature. This is neat, allows to precisely target some of your project rules.

https://www.schneems.com/2025/11/19/find-accidental-code-usage-with-a-custom-clippytoml/

The Geometry Behind Normal Maps

Tags: tech, 3d, graphics, shader

Struggling to understand tangent space and normal maps? This post does a good job to explain where this comes from.

https://www.shlom.dev/articles/geometry-behind-normal-maps/

Know why you don't like OOP

Tags: tech, object-oriented

I don't get why object oriented programming gets so much flack these days… It brings interesting tools and less interesting ones. Just pick and choose wisely like for any other paradigm.

https://zylinski.se/posts/know-why-you-dont-like-oop/

Ditch your (mut)ex, you deserve better

Tags: tech, multithreading, safety

If you're dealing with multithreading you should not turn to mutexes by default indeed. Consider higher level primitives and patterns first.

https://chrispenner.ca/posts/mutexes

Brownouts reveal system boundaries

Tags: tech, infrastructure, reliability, failure, resilience

Interesting point of view. Indeed, you probably want things to not be available 100% of the time. This forces you to see how resilient things really are.

https://jyn.dev/brownouts-reveal-system-boundaries/

Tech Leads in Scrum

Tags: tech, agile, scrum, tech-lead, leadership

Interesting move on the Scrum definitions to move from roles to accountabilities. The article does a good job explaining it but then falls back into talking about roles somehow. Regarding the tech leads indeed they can work in Scrum teams. Scrum don't talk about them simply because Scrum don't talk about technical skills.

https://www.patkua.com/blog/tech-leads-in-scrum/

How to Avoid Solo Product Leadership Failure with a Product Value Team

Tags: tech, agile, product-management

I wonder what the whole series will give. Anyway I very much agree with this first post. Too often projects have a single product manager and that's a problem.

Bye for now!

21 Nov 2025 10:46am GMT

Fedora People

Fedora People

Fedora Community Blog: Community Update – Week 47

This is a report created by CLE Team, which is a team containing community members working in various Fedora groups for example Infratructure, Release Engineering, Quality etc. This team is also moving forward some initiatives inside Fedora project.

Week: 17 November - 21 November 2025

Fedora Infrastructure

This team is taking care of day to day business regarding Fedora Infrastructure.

It's responsible for services running in Fedora infrastructure.

Ticket tracker

- The intermittent 503 timeout issues plaguing the infra appear to finally be resolved, kudos to Kevin and the Networking team for tracking it down.

- The Power10 hosts which caused the outage last week are now installed and ready for use.

- Crashlooping OCP worker caused issues with log01 disk space

- Monitoring migration to Zabbix is moving along, with discussions of when to make it "official".

- AI scrapers continue to cause significant load. A change has been made to bring some of the hits to src.fpo under the Varnish cache, which may help.

- Update/reboot cycle planned for this week.

CentOS Infra including CentOS CI

This team is taking care of day to day business regarding CentOS Infrastructure and CentOS Stream Infrastructure.

It's responsible for services running in CentOS Infratrusture and CentOS Stream.

CentOS ticket tracker

CentOS Stream ticket tracker

- HDD issue on internal storage server for CentOS Stream build infra

- Update Kmods SIG Tags (remove EPEL)

- cbs signing queue stuck

- Verify postfix spam checks

- Deploy new x86_64/aarch64 koji builders for CBS

- Prepare new signing host for cbs in RDU3

- https://cbs.centos.org is now fully live from RDU3 (DC-move) : kojihub/builders in rdu3 and/or remote AWS VPC isolated network, and also signing/releng process

Release Engineering

This team is taking care of day to day business regarding Fedora releases.

It's responsible for releases, retirement process of packages and package builds.

Ticket tracker

- EPEL 10.1 ppc64le buildroot broken

- Transfer device-mapper-multipath ownership to real person

- Transfer repo ownership to real person

- fcitx5-qt update didn't get to updates repository

- Fedora 43 branch for pgbouncer stuck

- Help with rebuilds

- Follow up on Packages owned by invalid users fesco#3475

RISC-V

- F43 RISC-V rebuild status: the delta for F43 RISC-V is still about ~2.5K packages compared to F43 primary. Current plan: once we hit ~2K package delta, we'll start focusing on the quality of the rebuild and fix whatever important stuff that needs fixing. (Here is the last interim update to the community.)

- Community highlight: David Abdurachmanov (Rivos Inc) has been doing excellent work on Fedora 43 rebuild, doing a lot of heavy-lifting. He also provides quite some personal hardware for Koji rebuilders.

Forgejo

Updates of the team responsible for Fedora Forge deployment and customization.

Ticket tracker

- Redirects for attachments retention during repository migration [A] [B] [C] [D]

- Fedora -> Forgejo talk for Fedora 43 release party has been recorded

- Valkey self managed cluster deployed for Forgejo caching on staging - prod next

- Private issues - Analysis on database schema, access protection, API layer

- Forgejo support for Konflux explored -Weighed in on Konflux roadmap meeting

- Action runners - cleanup for finished runners and workaround for service routes, demo of declarativeness recorded

- Migration request for Commops SIG, Cloud SIG and AI/ML SIG completed

- Fixes for user creation issue upstreamed, fixed Anubis problem in the production

List of new releases of apps maintained by I&R Team

Minor update of FMN from 3.3.0 to 3.4.0

Minor update of FASJSON from 1.6.0 to 1.7.0

Minor update of Noggin from 1.10.0 to 1.11.0

If you have any questions or feedback, please respond to this report or contact us on #admin:fedoraproject.org channel on matrix.

The post Community Update - Week 47 appeared first on Fedora Community Blog.

21 Nov 2025 10:00am GMT

Planet Ubuntu

Planet Ubuntu

Ubuntu Blog: Open design: the opportunity design students didn’t know they were missing

What if you could work on real-world projects, shape cutting-edge technology, collaborate with developers across the world, make a meaningful impact with your design skills, and grow your portfolio… all without applying for an internship or waiting for graduation?

That's what we aim to do with open design: an opportunity for universities and students of any design discipline.

What is open design, and why does it matter?

Before we go further, let's talk about what open design is. Many open source tools are built by developers, for developers, without design in mind. When open source software powers 90% of the digital world (PDF), it leaves everyday users feeling overwhelmed or left out. Open design wants to bridge that gap.

We aim to introduce human-centred thinking into open source development, enhancing these tools to be more intuitive, inclusive, and user-friendly. Most open source projects focus on code contributions, neglecting design contributions. That leaves a vast number of projects without a design system, accessibility audits, or onboarding documentation. That's where designers come in, helping shape better user experiences and more welcoming communities.

Open design is about more than just aesthetics. Open design helps to make technology work for people; that's exactly what open source needs. Learn more about open design on our webpage.

We want to raise awareness for the projects, the problems that currently exist, and how we can fix them together, and encourage universities and students to become advocates of open design.

We want universities to connect their students to real-world, meaningful design opportunities in a field that is currently lacking the creativity of designers. Our goal is to help and motivate students to bring their design skills into open source projects and become advocates, to make open design accessible, practical, and empowering!

How Canonical helps universities access open design

We want to help universities help students to access:

- Real-world experiences: Students apply their design skills to global projects to create valuable, demonstrable outcomes, beyond hypothetical briefs

- Interdisciplinary growth: Empower students to gain collaborative experience with developers, and navigate real tech workflows

- Accessible opportunities: No interviews, no barriers; just impact, experience, and learning

We have provided universities with talks and project briefs, enabling them to prepare students to utilise their expertise and design a brighter future for open source. If you're a department leader, instructor, or coordinator, exploring open source and open design will help you to give your students unique access to industry-aligned experiences, while embedding values of collaboration, open contribution, and inclusive design.

Why should students care?

If you're a student in UX, UI, interaction, service, visual, HCI design, or any other field with design influence, you've been told how important it is to build your portfolio, gain hands-on experience, and collaborate with cross-functional teams. Open design is your opportunity to do so.

The best part is, you don't have to write a single line of code to make a difference! Open source projects are looking for:

- UX/UI improvements

- Accessibility and heuristic audits

- User research and persona development

- User flows and wireframes

- Information architecture reviews

- Design documentation and feedback systems

If you're in a design course, you already have, or are developing, the skills that open-source projects need.

Open design is an opportunity to develop by collaborating across disciplines, navigating ambiguity, and advocating for users: skills employers value. With open design, you'll gain confidence in presenting ideas, working with international teams, and handling feedback in a real-world setting, growing in ways that classroom projects and internships often don't offer.

If you're aiming for a tech-focused design career, open design is one of the most impactful and distinctive ways to stand out!

How can you start?

Getting started is easier than you think, even if GitHub looks scary at first. Here's how:

- Learn the basics of GitHub

We've made a video guide to understanding GitHub, and curated a list of other videos to get to grips with GitHub.

- Find a project on contribute.design

It's like a job board for design contributions. These projects are waiting for you.

- Understand the project's needs

Most projects on contribute.design list what they're looking for in .design file or DESIGN.md guidelines.

- Pick an issue, or propose your own

Navigate to the Issues tab of the project repo, where you can filter for issues labelled for design. You can also use this tab to propose any issues you discover in the project.

- Contribute, collaborate, grow

Start adding your ideas, questions, and solutions to issues. You'll be collaborating, communicating, and making meaningful contributions.

You can explore more projects through the GitHub Explore page, but not every project will have a design process in place; that's where your skills are especially valuable. If you don't see design issues, treat the project as a blank canvas. Suggest checklists, organise a design system, or improve documentation. The power is in your hands!

Reach out to maintainers, join community discussions, and don't hesitate to introduce design-focused thinking. Your initiative can spark meaningful change and help open source become more user-friendly, one project at a time.

View every project as an opportunity; you don't need an invitation to contribute, just curiosity, creativity, and the willingness to collaborate.

Interested?

We're looking for universities and departments interested in introducing open design to their students. Whether that's through a talk, module project briefs, or anything else you'd like to see, we're excited to find ways to work together and bring open design to campus.

Are you a program director, a design department, a student group, or an interested student? Let's talk!

Reach out at opendesign@canonical.com

21 Nov 2025 9:39am GMT

Ubuntu Blog: Anbox Cloud 1.28.0 is now available!

Enhanced Android device simulation, smarter diagnostics, and OIDC-enforced authentication

The Anbox Cloud team has been working around the clock to release Anbox Cloud 1.28.0! We're very proud of this release that adds robust authentication, improved diagnostic tools, and expanded simulation options, making Anbox Cloud even more secure, flexible, and developer-friendly for running large-scale Android workloads.

Let's go over the most significant changes in this new version.

Strengthened authentication and authorization

Our OpenID Connect (OIDC)-based authentication and authorization framework is now stable with Anbox Cloud 1.28.0. This new framework provides a standardized approach for controlling access across web and command-line clients. Operators can now assign permissions through entitlements with fine-grained control, define authorization groups, and create and manage identities.

Configuring user permissions, understanding the idea of identities and groups, and looking through the entire list of available entitlements are all thoroughly covered in the new guides that come with this release. This represents a significant advancement in the direction of a more uniform and standards-based access model for all Anbox Cloud deployments.

Simulated SMS support

This is one of our most exciting new features: developers testing telephony-enabled applications in Anbox Cloud can now simulate incoming SMS messages using the Anbox runtime HTTP API.

This new functionality allows messages to trigger notifications the same way they would on a physical device, generating more realistic end-to-end scenarios. A new how-to guide in our documentation provides detailed instructions on how to enable and use this feature.

Protection against accidental deletions

Because we know accidents happen (especially in production environments…), in order to reduce operational risk, this release introduces the ability to protect instances from accidental deletion. This option can be enabled directly in the dashboard either when creating a new instance or later from the Instance details page under the Security section.

Once this protection option is turned on, the instance cannot be deleted, even during bulk delete operations, until the configuration is reset. This simple safeguard helps operators preserve important data and prevents costly mistakes in busy environments.

Improved ADB share management

Working with ADB (the Android Debug Bridge) has also become more flexible. Anbox Cloud now allows up to five ADB shares to be managed directly from the dashboard. For those who prefer the command line, the new amc connect command provides an alternative to the existing anbox-connect tool. Together, these improvements make it easier for developers to manage and maintain multiple debugging or testing sessions at once.

New diagnostic facility for troubleshooting

With version 1.28.0, we're introducing a new diagnostic facility in the dashboard. This tool is designed to simplify troubleshooting for both the instances and the streaming sessions themselves.

This feature helps collect relevant diagnostic data automatically, thereby reducing the work needed to identify and resolve issues. It also makes collaboration with our Canonical support teams more efficient, as users can now provide consistent and accurate diagnostic information in a structured, standard format.

Sensor support in the Streaming SDK

Here's another hotly anticipated feature: the Anbox Streaming SDK gains expanded sensor support in this release. Our SDK now includes gyroscope, accelerometer and orientation sensors, allowing developers to test applications more interactively.

Sensor support is disabled by default but can be easily enabled in the streaming client configuration. This addition opens up new possibilities for interactive use cases, such as gaming.

Upgrade now and stay tuned!

We think that Anbox Cloud 1.28.0 is our best release to date, and we are pleased to keep providing a feature-rich, scalable, and safe solution for managing Android workloads on a large scale.

This latest version makes it easier than ever for developers and operators to create and test Android apps by introducing more precise device simulation, improved troubleshooting tools, and stricter access controls, as we've explained above.

Try it now and stay tuned for further developments in our upcoming releases. For detailed instructions on how to upgrade your existing deployment, please refer to the official documentation.

Further reading

Official documentation

Anbox Cloud Appliance

Learn more about Anbox Cloud or contact our team to discuss your use case

Android is a trademark of Google LLC. Anbox Cloud uses assets available through the Android Open Source Project.

21 Nov 2025 8:00am GMT

Planet GNOME

Planet GNOME

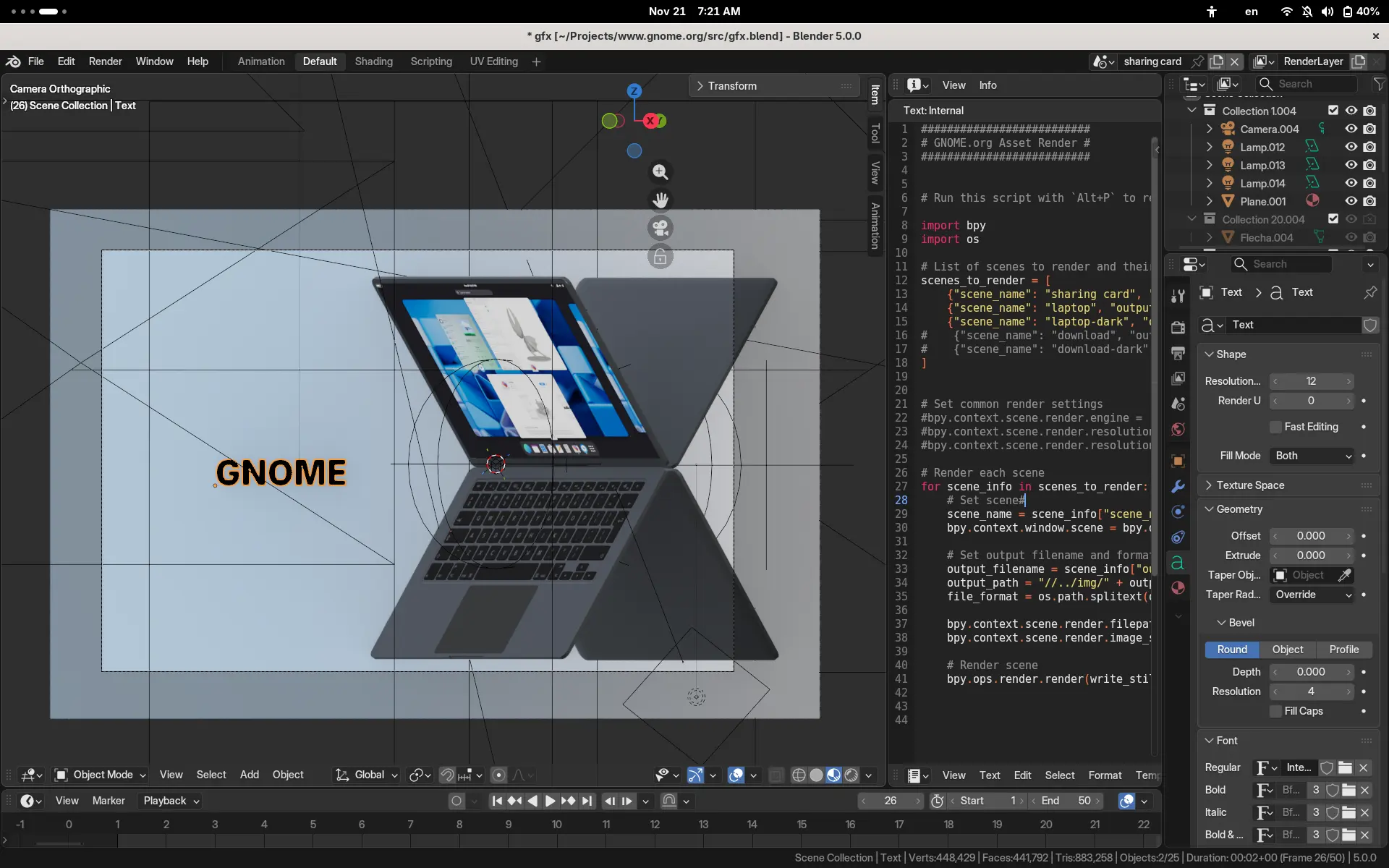

Jakub Steiner: 12 months instead of 12 minutes

Hey Kids! Other than raving about GNOME.org being a static HTML, there's one more aspect I'd like to get back to in this writing exercise called a blog post.

I've recently come across an apalling genAI website for a project I hold deerly so I thought I'd give a glimpse on how we used to do things in the olden days. It is probably not going to be done this way anymore in the enshittified timeline we ended up in. The two options available these days are - a quickly generated slop website or no website at all, because privately owned social media is where it's at.

The wanna-be-catchy title of this post comes from the fact the website underwent numerous iterations (iterations is the core principle of good design) spanning over a year before we introduced the redesign.

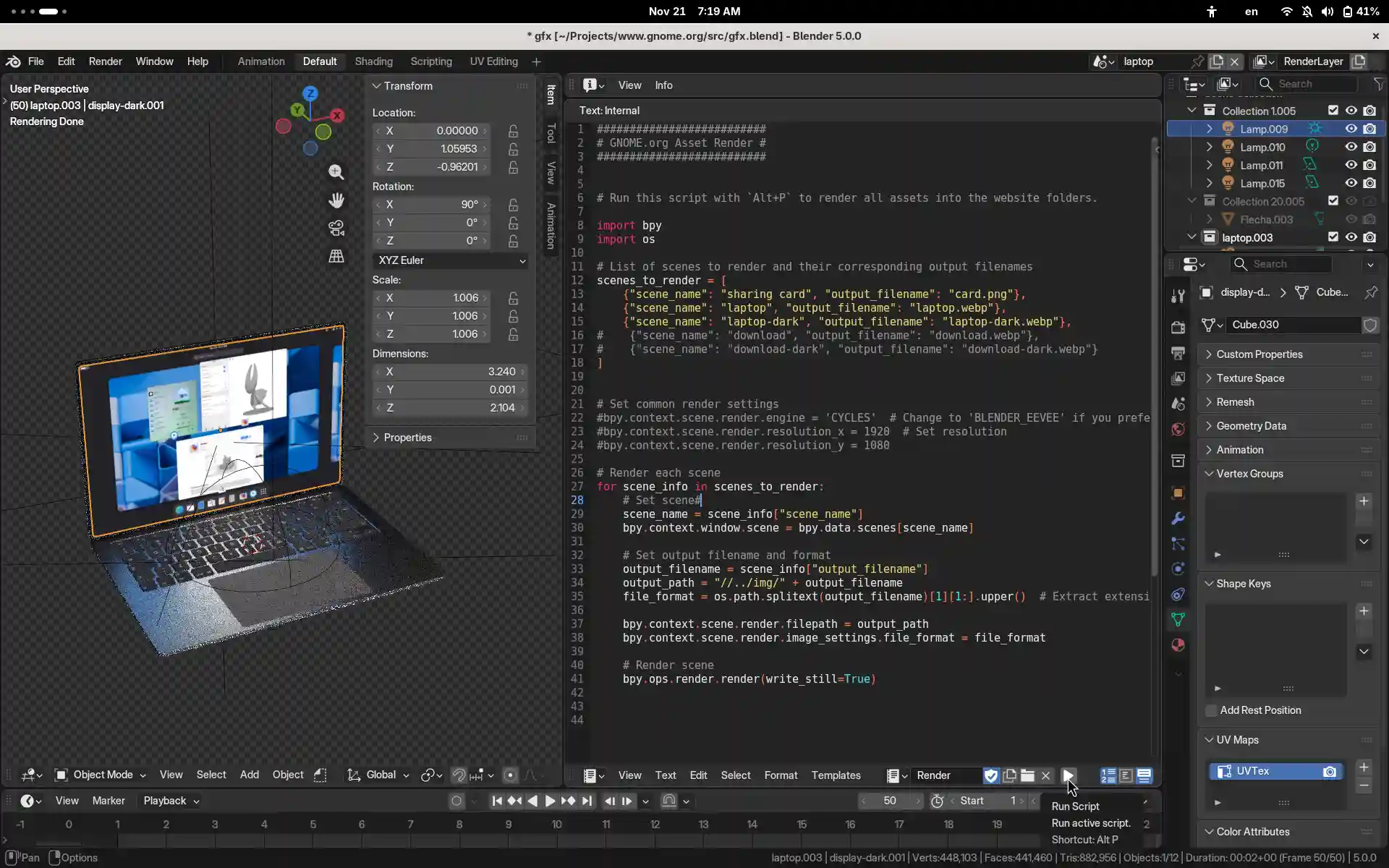

So how did we end up with a 3D model of a laptop for the hero image on the GNOME website, rather than something generated in a couple of seconds and a small town worth of drinking water or a simple SVG illustration?

The hero image is static now, but used to be a scroll based animation at the early days. It could have become a simple vector style illustration, but I really enjoy the light interaction of the screen and the laptop, especially between the light and dark variants. Toggling dark mode has been my favorite fidget spinner.

Creating light/dark variants is a bit tedious to do manually every release, but automating still a bit too hard to pull off (the taking screenshots of a nightly OS bit). There's also the fun of picking a theme for the screenshot rather than doing the same thing over and over. Doing the screenshooting manually meant automating the rest, as a 6 month cycle is enough time to forget how things are done. The process is held together with duct tape, I mean a python script, that renders the website image assets from the few screenshots captured using GNOME OS running inside Boxes. Two great invisible things made by amazing individuals that could go away in an instant and that thought gives me a dose of anxiety.

This does take a minute to render on a laptop (CPU only Cycles), but is a matter of a single invocation and a git commit. So far it has survived a couple of Blender releases, so fingers crossed for the future.

Sophie has recently been looking into translations, so we might reconsider that 3D approach if translated screenshots become viable (and have them contained in an SVG similar to how os.gnome.org is done). So far the 3D hero has always been in sync with the release, unlike in our Wordpress days. Fingers crossed.

21 Nov 2025 7:44am GMT

Planet Debian

Planet Debian

Daniel Kahn Gillmor: Transferring Signal on Android

Transferring a Signal account between two Android devices

I spent far too much time recently trying to get a Signal Private Messenger account to transfer from one device to another.

What I eventually found worked was a very finicky path to enable functioning "Wi-Fi Direct", which I go into below.

I also offer some troubleshooting and recovery-from-failure guidance.

All of this blogpost uses "original device" to refer to the Android pocket supercomputer that already has Signal installed and set up, and "new device" to mean the Android device that doesn't yet have Signal on it.

Why Transfer?

Signal Private Messenger is designed with the expectation that the user has a "primary device", which is either an iPhone or an Android pocket supercomputer.

If you have an existing Signal account, and try to change your primary device by backing up and restoring from backup, it looks to me like Signal will cause your long-term identity keys to be changed. This in turn causes your peers to see a message like "Your safety number with Alice has changed."

These warning messages are the same messages that they would get if an adversary were to take over your account. So it's a good idea to minimize them when there isn't an account takeover - false alarms train people to ignore real alarms.

You can avoid "safety number changed" warnings by using signal's "account transfer" process during setup, at least if you're transferring between two Android devices.

However, my experience was that the transfer between two Android devices was very difficult to get to happen at all. I ran into many errors trying to do this, until I finally found a path that worked.

Dealing with Failure

After each failed attempt at a transfer, my original device's Signal installation would need to be re-registered. Having set a PIN meant that i could re-register the device without needing to receive a text message or phone call.

Set a PIN before you transfer!

Also, after a failure, you need to re-link any "linked device" (i.e. any Signal Desktop or iPad installation). If any message came in during the aborted transfer, the linked device won't get a copy of that message.

Finally, after a failed transfer, i recommend completely uninstalling Signal from the new device, and starting over with a fresh install on the new device.

Permissions

My understanding is that Signal on Android uses Wi-Fi Direct to accomplish the transfer. But to use Wi-Fi Direct, Signal needs to have the right permissions.

On each device:

- Entirely stop the Signal app

- Go to

Settings » Apps » Signal » Permissions - Ensure that the following permissions are all enabled whenever the app is running:

- Location

- Nearby Devices

- Network

Preparing for Wi-Fi Direct

The transfer process depends on "Wi-Fi Direct", which is a bit of a disaster on its own.

I found that if i couldn't get Wi-Fi Direct to work between the two devices, then the Signal transfer was guaranteed to fail.

So, for clearer debugging, i first tried to establish a Wi-Fi Direct link on Android, without Signal being involved at all.

Setting up a Wi-Fi Direct connection directly failed, multiple times, until i found the following combination of steps, to be done on each device:

- Turn off Bluetooth

- Ensure Wi-Fi is enabled

- Disconnect from any Wi-Fi network you are connected to (go to the "Internet" or "Wi-Fi" settings page, long-press on the currently connected network, and choose "Disconnect"). If your device knows how to connect to multiple local Wi-Fi networks, disconnct from each of them in turn until you are in a stable state where Wi-Fi is enabled, but no network is connected.

- Close to the bottom of the "Inteernet" or "Wi-Fi" settings page, choose "Network Preferences" and then "Wi-Fi Direct"

- if there are any entries listed under "Remembered groups", tap them and choose to "Forget this group"

- If there are Peer devices that say "Invited", tap them and choose to "Cancel invitation"

I found that this configuration is the most likely to enable a successful Wi-Fi Direct connection, where clicking "invite" on one device would pop up an alert on the other asking to accept the connection, and result in a "Connected" state between the two devices.

Actually Transferring

Start with both devices fully powered up and physically close to one another (on the same desk should be fine).

On the new device:

- Reboot the device, and log into the profile you want to use

- Enable Internet access via Wi-Fi.

- Remove any old version of Signal.

- Install Signal, but DO NOT OPEN IT!

- Set up the permissions for the Signal app as described above

- Open Signal, and choose "restore or transfer" -- you still need to be connected to the network at this point.

- The new device should display a QR code.

On the original device:

- Reboot the device, and log into the profile that has the Signal account you're looking to transfer

- Enable Internet access via Wi-Fi, using the same network that the old device is using.

- Make sure the permissions for Signal are set up as described above

- Open Signal, and tap the camera button

- Point the camera at the new device's QR code

Now tap the "continue" choices on both devices until they both display a message that they are searching for each other. You might see the location indicator (a green dot) turn on during this process.

If you see an immediate warning of failure on either device, you probably don't have the permissions set up right.

You might see an alert (a "toast") on one of the devices that the other one is trying to connect. You should click OK on that alert.

In my experience, both devices are likely to get stuck "searching" for each other. Wait for both devices to show Signal's warning that the search has timed out.

At this point, leave Signal open on both devices, and go through all the steps described above to prepare for Wi-Fi Direct. Your Internet access will be disabled.

Now, tap "Try again" in Signal on both devices, pressing the buttons within a few seconds of each other. You should see another alert that one device is trying to connect to the other. Press OK there.

At this point, the transfer should start happening! The old device will indicate what percentag has been transferred, and the new device will indicate how many messages hav been transferred.

When this is all done, re-connect to Wi-Fi on the new device.

Temporal gap for Linked Devices

Note that during this process, if new messages are arriving, they will be queuing up for you.

When you reconnect to wi-fi, the queued messages will flow to your new device. But the process of transferring automatically unlinks any linked devices. So if you want to keep your instance of Signal Desktop with as short a gap as possible, you should re-link that installation promptly after the transfer completes.

Clean-up

After all this is done successfully, you probably want to go into the Permissions settings and turn off the Location and Nearby Devices permissions for Signal on both devices.

I recommend also going into Wi-Fi Direct and removing any connected devices and forgetting any existing connections.

Conclusion

This is an abysmally clunky user experience, and I'm glad I don't have to do it often. It would have been much simpler to make a backup and restore from it, but I didn't want to freak out my contacts with a safety number change.

By contrast, when i wanted extend a DeltaChat account across two devices, the transfer was prompt and entirely painless -- i just had to make sure the devices were on the same network, and then scanned a QR code from one to the other. And there was no temporal gap for any other deviees. And i could use Delta on both devices simultaneously until i was convinced that it would work on the new device -- Delta doesn't have the concept of a primary account.

I wish Signal made it that easy! Until it's that easy, i hope the processes described here are useful to someone.

21 Nov 2025 5:00am GMT

Planet GNOME

Planet GNOME

This Week in GNOME: #226 Exporting Events

Update on what happened across the GNOME project in the week from November 14 to November 21.

GNOME Core Apps and Libraries

Calendar ↗

A simple calendar application.

Hari Rana | TheEvilSkeleton (any/all) 🇮🇳 🏳️⚧️ says

Thanks to FineFindus, who previously worked on exporting events as

.icsfiles, GNOME Calendar can now export calendars as.icsfiles, courtesy of merge request !615! This will be available in GNOME 50.

Hari Rana | TheEvilSkeleton (any/all) 🇮🇳 🏳️⚧️ says

After two long and painful years, several design iterations, and more than 50 rebases later, we finally merged the infamous, trauma-inducing merge request !362 on GNOME Calendar. This changes the entire design of the quick-add popover by merging both pages into one and updating the style to conform better with modern GNOME designs. Additionally, it remodels the way the popover retrieves and displays calendars, reducing 120 lines of code.

The calendars list in the quick-add popover has undergone accessibility improvements, providing a better experience for assistive technologies and keyboard users. Specifically: tabbing from outside the list will focus the selected calendar in the list; tabbing from inside the list will skip the entire list; arrow keys automatically select the focused calendar; and finally, assistive technologies now inform the user of the checked/selected state.

Admittedly, the quick-add popover is currently unreachable via keyboard because we lack the resources to implement keyboard focus for month and week cells. We are currently trying to address this issue in merge request !564, and hope to get it merged for GNOME 50, but it's a significant undertaking for a single unpaid developer. If it is not too much trouble, I would really appreciate some donations, to keep me motivated to improve accessibility throughout GNOME and sustain myself: https://tesk.page/#donate

This merge request allowed us to close 4 issues, and will be available in GNOME 50.

Files ↗

Providing a simple and integrated way of managing your files and browsing your file system.

Peter Eisenmann says

Files landed two big changes by Khalid Abu Shawarib this week.

The first change adds a bunch of tests, bringing the total coverage of the huge code base close to 30%. This will prevent regressions in previously uncovered areas such as bookmarking or creating files.

The second change is more noticeable as the way thumbnails are loaded was largely rewritten to finally make full use of GTK4's recycling views. It took a lot of code detangling to get thumbnails to load asynchronously, but the result is a great speedup, making thumbnails show as fast as never before. 🚀

Attached is a comparison of reloading a folder before and after the change

Libadwaita ↗

Building blocks for modern GNOME apps using GTK4.

Alice (she/her) 🏳️⚧️🏳️🌈 announces

as of today, libadwaita has support for the new reduced motion preference, both supporting the

@media (prefers-reduced-motion: reduce)query from CSS, and using simple crossfade transitions where appropriate (e.g. inAdwDialog,AdwNavigationViewandAdwTabOverview

Alice (she/her) 🏳️⚧️🏳️🌈 reports

libadwaita has deprecated the

style-dark.css,style-hc.cssandstyle-hc-dark.cssresources thatAdwApplicationautomatically loads. They still work, but will be removed in 2.0. Applications are recommended to switch tostyle.cssand media queries for dark and high contrast styles

GTK ↗

Cross-platform widget toolkit for creating graphical user interfaces.

Matthias Clasen reports

This weeks GTK 4.21.2 release includes initial support for the CSS backdrop-filter property. The GSK APIs enabling this are new copy/paste and composite render nodes, which allow flexible reuse of the 'background' at any point in the scene graph. We are looking forward to your experiments with this!

GLib ↗

The low-level core library that forms the basis for projects such as GTK and GNOME.

Philip Withnall says

Luca Bacci has dug into an intermittent output buffering issue with GLib on Windows, which should fix some CI issues and opt various GLib utilities into more modern features on Windows - https://gitlab.gnome.org/GNOME/glib/-/merge_requests/4788

Third Party Projects

Alain announces

Planify 4.16.0 - Natural dates, smoother flows, and smarter task handling

This week, Planify released version 4.16.0, bringing several improvements that make task management faster, more intuitive, and more predictable on GNOME.

The highlight of this release is natural language date parsing, now enabled by default in Quick Add. You can type things like "tomorrow 3pm", "next Monday", "25/12/2024", or "ahora", and Planify will automatically convert it into a proper scheduled date. Spanish support has also been added, including expressions like mañana, pasado mañana, próxima semana, and more.

Keyboard navigation got a boost too:

- Ctrl + D now opens the date picker instantly

- Ctrl + K toggles "Keep adding" mode

- And several shortcuts were cleaned up for more predictable behavior

Planify also adds label management in the task context menu, making it easier to add or remove labels without opening the full editor.

For calendar users, event items now open a richer details popover, with automatic detection of Google Meet and Microsoft Teams links, making online meetings just one click away.

As always, translations, bug fixes, and general UI refinements round out the update.

Planify 4.16.0 is available now on Flathub

Jan-Willem reports

This week I released Java-GI version 0.13.0, a Java language binding for GNOME and other libraries that support GObject-Introspection, based on OpenJDK's new FFM functionality. Some of the highlights in this release are:

- Bindings for LibRsvg, GstApp (for GStreamer) and LibSecret have been added

- The website for Java-GI has its own domain name now: java-gi.org, and this is also used in all module- and package names

- Thanks to GObject-Introspection's extensive testsuite, I've implemented over 900 testcases to test the Java bindings, and fixed many bugs along the way.

I hope that Java-GI will help Java (or Kotlin, Scala, Clojure, …) developers to create awesome new GNOME apps!

Quadrapassel ↗

Fit falling blocks together.

Will Warner says

Quadrapassel 49.2 is out! Here is whats new:

- Updated translations: Ukrainian, Russian, Brazilian Portuguese, Chinese (China), Slovenian, Georgian

- Made the 'P' key pause the game

- Replaced the user help docs with a 'Game Rules' dialog

- Stopped the menu button taking focus

- Fixed a bug where the game's score would not be recorded when the app was quit

- Added total rows and level information to scores

Phosh ↗

A pure wayland shell for mobile devices.

Guido announces

Phosh 0.51.0 is out:

There's a new quick setting that allows to toggle location services on/off and the ☕ quick setting can now disable itself after a certain amount of time (check here on how to configure the intervals). We also add added a toggle to enable automatic brightness from the top panel and when enabled the brightness slider acts as an offset to the current brightness value.

The minimum brightness of the 🔦 brightness slider can now be configured via

hwdb/udevallowing one go to lower values then the former hard coded 40%. The configuration is maintained in gmobile.If you're using Phosh on a Google Pixel 3A XL you can now enjoy haptic feedback when typing on the on screen keyboard (like users on other devices) and creating notch configurations for new devices should now be simpler as our tooling can take screen shots of the resulting UI element layout in Phosh for you.

There's more, see the full details at here

GNOME Websites

Emmanuele Bassi says

After a long time, the new user help website is now available and up to date with the latest content. The new help website replaces the static snapshot of the old library-web project, but it is still a work in progress, and contributions are welcome. Just like in the past, the content is sourced from each application, as well as from the gnome-user-docs repository. If you want to improve the documentation of GNOME components and core applications, make sure to join the #docs:gnome.org room.

Shell Extensions

Pedro Sader Azevedo announces

Foresight is a GNOME Shell extension that automatically enters the activities view on empty workspaces, making it faster to open apps and start using your computer!