25 Feb 2026

Planet Debian

Planet Debian

Sahil Dhiman: Publicly Available NKN Data Traffic Graphs

National Knowledge Network (NKN) is one of India's main National Research and Educational Network (NREN). The other being the less prevalent Education and Research Network (ERNET).

This post grew out of this Mastodon thread where I kept on adding various public graphs (from various global research and educational entities) that peer or connect with NKN. This was to get some purview about traffic data between them and NKN.

CERN

CERN, birthplace of the World Wide Web (WWW) and home of the Large Hadron Collider (LHC).

- Graph - https://monit-grafana-open.cern.ch/d/XoND3VQ4k/nkn?orgId=16&from=now-30d&to=now&timezone=browser&var-source=raw&var-bin=5m

- Connection seems to be 2x10 G through CERNLight.

India participates in the LHCONE project, which carries LHC data over these links for scientific research purposes. This presentation from Vikas Singhal from Variable Energy Cyclotron Centre (VECC), Kolkata, at the 8th Asian Tier Center Forum in 2024 gives some details.

GÉANT

GÉANT is pan European Union's collaboration of NRENs.

- Graph https://public-brian.geant.org/d/ZyVgYFgVk/nkn?orgId=5&from=now-30d&to=now

- NKN connects at Amsterdam POP.

- From the 2016 press release from GÉANT, NKN seems to have 2x10 G capacity towards GÉANT. Things might have changed since.

LEARN

Lanka Education and Research Network (LEARN) is Sri Lanka's NREN.

- Graph https://kirigal.learn.ac.lk/traffic/details.php?desc=slt-b1-new&data_id=1788&data_id_v6=&sub_bw=1000&name=National%20Knowledge%20Network%20of%20India%20(1G)&swap_inout=

- Connection seems to be 1 G.

NORDUnet

NREN for Nordic countries.

I couldn't find any public live data transfer graphs from NKN side. If you know any other graphs, do let me know.

25 Feb 2026 1:38pm GMT

24 Feb 2026

Planet Debian

Planet Debian

Louis-Philippe Véronneau: Montreal's Debian & Stuff - February 2026

Our Debian User Group met on February 22nd for our first meeting of the year!

Here's what we did:

pollo:

- reviewed and merged Lintian contributions:

- released lintian version

2.130.0 - upstreamed a patch for python-wilderness, fixed a few things and released version

0.1.10-3 - updated python-clevercsv to version

0.8.4 - updated python-mediafile to version

0.14.0

lelutin:

- opened up a RFH for co-maintenance for smokeping and added Marc Haber who responded really quickly to the call

- with mjeanson's help: prepped and uploaded a new smokeping version to release pending work

- opened a NM request to become DM

viashimo:

- fixed freshrss timer

- updated freshrss

- installed new navidrome container

- configured backups for new host (beelink mini s12)

tvaz:

- did NM work

- learned more about debusine and tested it

- uploaded antimony to debusine

- (co-)convinced lelutin to apply for DM (yay!)

lavamind:

- worked on autopkgtests for a new version of jruby

Pictures

This time around, we held our meeting at cégep du Vieux Montréal, the college where I currently work. Here is the view we had:

We also ordered some delicious pizzas from Pizzeria dei Compari, a nice pizzeria on Saint-Denis street that's been there forever.

Some of us ended up grabbing a drink after the event at l'Amère à boire, a pub right next to the venue, but I didn't take any pictures.

24 Feb 2026 9:45pm GMT

John Goerzen: Screen Power Saving in the Linux Console

I just made up a Debian trixie setup that has no need for a GUI. In fact, I rarely use the text console either. However, because the machine is dual boot and also serves another purpose, it's connected to my main monitor and KVM switch.

The monitor has three inputs, and when whatever display it's set to goes into powersave mode, it will seek out another one that's active and automatically switch to it.

You can probably see where this is heading: it's really inconvenient if one of the inputs never goes into powersave mode. And, of course, it wastes energy.

I have concluded that the Linux text console has lost the ability to enter powersave mode after an inactivity timeout. It can still do screen blanking - setting every pixel to black - but that is a distinct and much less useful thing.

You can do a lot of searching online that will tell you what to do. Almost all of it is wrong these days. For instance, none of these work:

- Anything involving vbetool. This is really, really old advice.

- Anything involving xset, unless you're actually running a GUI, which is not the point of this post.

- Anything involving setterm or the kernel parameters video=DPMS or consoleblank.

- Anything involving writing to paths under /sys, such as ones ending in dpms.

Why is this?

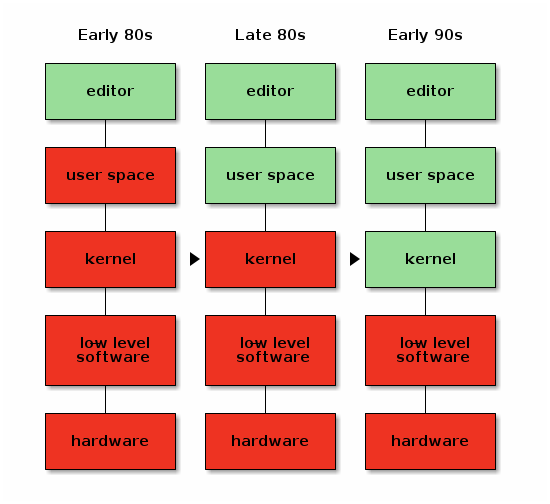

Well, we are on at least the third generation of Linux text console display subsystems. (Maybe more than 3, depending on how you count.) The three major ones were:

- The VGA text console

- fbdev

- DRI/KMS

As I mentioned recently in my post about running an accurate 80×25 DOS-style console on modern Linux, the VGA text console mode is pretty much gone these days. It relied on hardware rendering of the text fonts, and that capability simply isn't present on systems that aren't PCs - or even on PCs that are UEFI, which is most of them now.

fbdev, or a framebuffer console under earlier names, has been in Linux since the late 1990s. It was the default for most distros until more recently. It supported DPMS powersave modes, and most of the instructions you will find online reference it.

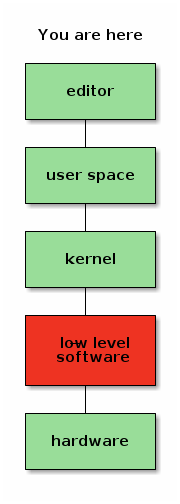

Nowadays, the DRI/KMS system is used for graphics. Unfortunately, it is targeted mainly at X11 and Wayland. It is also used for the text console, but things like DPMS-enabled timeouts were never implemented there.

You can find some manual workarounds - for instance, using ddcutil or similar for an external monitor, or adjusting the backlight files under /sys on a laptop. But these have a number of flaws - making unwanted brightness adjustments, and not automatically waking up on keypress among them.

My workaround

I finally gave up and ran apt-get install xdm. Then in /etc/X11/xdm/Xsetup, I added one line:

xset dpms 0 0 120

Now the system boots into an xdm login screen, and shuts down the screen after 2 minutes of inactivity. On the rare occasion where I want a text console from it, I can switch to it and it won't have a timeout, but I can live with that.

Thus, quite hopefully, concludes my series of way too much information about the Linux text console!

24 Feb 2026 2:22pm GMT

23 Feb 2026

Planet Debian

Planet Debian

Antoine Beaupré: PSA: North america changes time forward soon, Europe next

This is a copy of an email I used to send internally at work and now made public. I'm not sure I'll make a habit of posting it here, especially not twice a year, unless people really like it. Right now, it's mostly here to keep with my current writing spree going.

This is your bi-yearly reminder that time is changing soon!

What's happening?

For people not on tor-internal, you should know that I've been sending semi-regular announcements when daylight saving changes occur. Starting now, I'm making those announcements public so they can be shared with the wider community because, after all, this affects everyone (kind of).

For those of you lucky enough to have no idea what I'm talking about, you should know that some places in the world implement what is called Daylight saving time or DST.

Normally, you shouldn't have to do anything: computers automatically change time following local rules, assuming they are correctly configured, provided recent updates have been applied in the case of a recent change in said rules (because yes, this happens).

Appliances, of course, will likely not change time and will need to adjusted unless they are so-called "smart" (also known as "part of a bot net").

If your clock is flashing "0:00" or "12:00", you have no action to take, congratulations on having the right time once or twice a day.

If you haven't changed those clocks in six months, congratulations, they will be accurate again!

In any case, you should still consider DST because it might affect some of your meeting schedules, particularly if you set up a new meeting schedule in the last 6 months and forgot to consider this change.

If your location does not have DST

Properly scheduled meetings affecting multiple time zones are set in UTC time, which does not change. So if your location does not observer time changes, your (local!) meeting time will not change.

But be aware that some other folks attending your meeting might have the DST bug and their meeting times will change. They might miss entire meetings or arrive late as you frantically ping them over IRC, Matrix, Signal, SMS, Ricochet, Mattermost, SimpleX, Whatsapp, Discord, Slack, Wechat, Snapchat, Telegram, XMPP, Briar, Zulip, RocketChat, DeltaChat, talk(1), write(1), actual telegrams, Meshtastic, Meshcore, Reticulum, APRS, snail mail, and, finally, flying a remote presence drone to their house, asking what's going on.

(Sorry if I forgot your preferred messaging client here, I tried my best.)

Be kind; those poor folks might be more sleep deprived as DST steals one hour of sleep from them on the night that implements the change.

If you do observe DST

If you are affected by the DST bug, your local meeting times will change access the board. Normally, you can trust that your meetings are scheduled to take this change into account and the new time should still be reasonable.

Trust, but verify; make sure the new times are adequate and there are no scheduling conflicts.

Do this now: take a look at your calendar in two week and in April. See if any meeting need to be rescheduled because of an impossible or conflicting time.

When does time change, how and where?

Notice how I mentioned "North America" in the subject? That's a lie. ("The doctor lies", as they say on the BBC.) Other places, including Europe, also changes times, just not all at once (and not all North America).

We'll get into "where" soon, but first let's look at the "how". As you might already know, the trick is:

Spring forward, fall backwards.

This northern-centric (sorry!) proverb says that clocks will move forward by an hour this "spring", after moving backwards last "fall". This is why we lose an hour of work, sorry, sleep. It sucks, to put it bluntly. I want it to stop and will keep writing those advisories until it does.

To see where and when, we, unfortunately, still need to go into politics.

USA and Canada

First, we start with "North America" which, really, is just some parts of USA[1] and Canada[2]. As usual, on the Second Sunday in March (the 8th) at 02:00 local (not UTC!), the clocks will move forward.

This means that properly set clocks will flip from 1:59 to 3:00, coldly depriving us from an hour of sleep that was perniciously granted 6 months ago and making calendar software stupidly hard to write.

Practically, set your wrist watch and alarm clocks[3] back one hour before going to bed and go to bed early.

[1] except Arizona (except the Navajo nation), US territories, and Hawaii

[2] except Yukon, most of Saskatchewan, and parts of British Columbia (northeast), one island in Nunavut (Southampton Island), one town in Ontario (Atikokan) and small parts of Quebec (Le Golfe-du-Saint-Laurent), a list which I keep recopying because I find it just so amazing how chaotic it is. When your clock has its own Wikipedia page, you know something is wrong.

[3] hopefully not managed by a botnet, otherwise kindly ask your bot net operator to apply proper software upgrades in a timely manner

Europe

Next we look at our dear Europe, which will change time on the last Sunday in March (the 29th) at 01:00 UTC (not local!). I think it means that, Amsterdam-time, the clocks will flip from 1:59 to 3:00 AM local on that night.

(Every time I write this, I have doubts. I would welcome independent confirmation from night owls that observe that funky behavior experimentally.)

Just like your poor fellows out west, just fix your old-school clocks before going to bed, and go to sleep early, it's good for you.

Rest of the world with DST

Renewed and recurring apologies again to the people of Cuba, Mexico, Moldova, Israel, Lebanon, Palestine, Egypt, Chile (except Magallanes Region), parts of Australia, and New Zealand which all have their own individual DST rules, omitted here for brevity.

In general, changes also happen in March, but either on different times or different days, except in the south hemisphere, where they happen in April.

Rest of the world without DST

All of you other folks without DST, rejoice! Thank you for reminding us how manage calendars and clocks normally. Sometimes, doing nothing is precisely the right thing to do. You're an inspiration for us all.

Changes since last time

There were, again, no changes since last year on daylight savings that I'm aware of. It seems the US congress debating switching to a "half-daylight" time zone which is an half-baked idea that I should have expected from the current USA politics.

The plan is to, say, switch from "Eastern is UTC-4 in the summer" to "Eastern is UTC-4.5". The bill also proposes to do this 90 days after enactment, which is dangerously optimistic about our capacity at deploying any significant change in human society.

In general, I rely on the Wikipedia time nerds for this and Paul Eggert which seems to singlehandledly be keeping everything in order for all of us, on the tz-announce mailing list.

This time, I've also looked at the tz mailing list which is where I learned about the congress bill.

If your country has changed time and no one above noticed, now would be an extremely late time to do something about this, typically writing to the above list. (Incredibly, I need to write to the list because of this post.)

One thing that did change since last year is that I've implemented what I hope to be a robust calendar for this, which was surprisingly tricky.

If you have access to our Nextcloud, it should be visible under the heading "Daylight saving times". If you don't, you can access it using this direct link.

The procedures around how this calendar was created, how this email was written, and curses found along the way, are also documented in this wiki page, if someone ever needs to pick up the Time Lord duty.

23 Feb 2026 7:31pm GMT

Wouter Verhelst: On Free Software, Free Hardware, and the firmware in between

When the Free Software movement started in the 1980s, most of the world had just made a transition from free university-written software to non-free, proprietary, company-written software. Because of that, the initial ethical standpoint of the Free Software foundation was that it's fine to run a non-free operating system, as long as all the software you run on that operating system is free.

Initially this was just the editor.

But as time went on, and the FSF managed to write more and more parts of the software stack, their ethical stance moved with the times. This was a, very reasonable, pragmatic stance: if you don't accept using a non-free operating system and there isn't a free operating system yet, then obviously you can't write that free operating system, and the world won't move towards a point where free operating systems exist.

In the early 1990s, when Linus initiated the Linux kernel, the situation reached the point where the original dream of a fully free software stack was complete.

Or so it would appear.

Because, in fact, this was not the case. Computers are physical objects, composed of bits of technology that we refer to as "hardware", but in order for these bits of technology to communicate with other bits of technology in the same computer system, they need to interface with each other, usually using some form of bus protocol. These bus protocols can get very complicated, which means that a bit of software is required in order to make all the bits communicate with each other properly. Generally, this software is referred to as "firmware", but don't let that name deceive you; it's really just a bit of low-level software that is very specific to one piece of hardware. Sometimes it's written in an imperative high-level language; sometimes it's just a set of very simple initialization vectors. But whatever the case might be, it's always a bit of software.

And although we largely had a free system, this bit of low-level software was not yet free.

Initially, storage was expensive, so computers couldn't store as much data as today, and so most of this software was stored in ROM chips on the exact bits of hardware they were meant for. Due to this fact, it was easy to deceive yourself that the firmware wasn't there, because you never directly interacted with it. We knew it was there; in fact, for some larger pieces of this type of software it was possible, even in those days, to install updates. But that was rarely if ever done at the time, and it was easily forgotten.

And so, when the free software movement slapped itself on the back and declared victory when a fully free operating system was available, and decided that the work of creating a free software environment was finished, that only keeping it recent was further required, and that we must reject any further non-free encroachments on our fully free software stack, the free software movement was deceiving itself.

Because a computing environment can never be fully free if the low-level pieces of software that form the foundations of that computing environment are not free. It would have been one thing if the Free Software Foundation declared it ethical to use non-free low-level software on a computing environment if free alternatives were not available. But unfortunately, they did not.

In fact, something very strange happened.

In order for some free software hacker to be able to write a free replacement for some piece of non-free software, they obviously need to be able to actually install that theoretical free replacement. This isn't just a random thought; in fact it has happened.

Now, it's possible to install software on a piece of rewritable storage such as flash memory inside the hardware and boot the hardware from that, but if there is a bug in your software -- not at all unlikely if you're trying to write software for a piece of hardware that you don't have documentation for -- then it's not unfathomable that the replacement piece of software will not work, thereby reducing your expensive piece of technology to something about as useful as a paperweight.

Here's the good part.

In the late 1990s and early 2000s, the bits of technology that made up computers became so complicated, and the storage and memory available to computers so much larger and cheaper, that it became economically more feasible to create a small, tiny, piece of software stored in a ROM chip on the hardware, with just enough knowledge of the bus protocol to download the rest from the main computer.

This is awesome for free software. If you now write a replacement for the non-free software that comes with the hardware, and you make a mistake, no wobbles! You just remove power from the system, let the DRAM chips on the hardware component fully drain, return power, and try again. You might still end up with a brick of useless silicon if some of the things you sent to your technology make it do things that it was not designed to do and therefore you burn through some critical bits of metal or plastic, but the chance of this happening is significantly lower than the chance of you writing something that impedes the boot process of the piece of hardware and you are unable to fix it because the flash is overwritten. There is anecdotal evidence that there are free software hackers out there who do so. So, yay, right? You'd think the Free Software foundation would jump at the possibility to get more free software? After all, a large part of why we even have a Free Software Foundation in the first place, was because of some piece of hardware that was misbehaving, so you would think that the foundation's founders would understand the need for hardware to be controlled by software that is free.

The strange thing, what has always been strange to me, is that this is not what happened.

The Free Software Foundation instead decided that non-free software on ROM or flash chips is fine, but non-free software -- the very same non-free software, mind -- that touches the general storage device that you as a user use, is not. Never mind the fact that the non-free software is always there, whether it sits on your storage device or not.

Misguidedness aside, if some people decide they would rather not update the non-free software in their hardware and use the hardware with the old and potentially buggy version of the non-free software that it came with, then of course that's their business.

Unfortunately, it didn't quite stop there. If it had, I wouldn't have written this blog post.

You see, even though the Free Software Foundation was about Software, they decided that they needed to create a hardware certification program. And this hardware certification program ended up embedding the strange concept that if something is stored in ROM it's fine, but if something is stored on a hard drive it's not. Same hardware, same software, but different storage. By that logic, Windows respects your freedom as long as the software is written to ROM. Because this way, the Free Software Foundation could come to a standstill and pretend they were still living in the 90s.

An unfortunate result of the "RYF" program is that it means that companies who otherwise would have been inclined to create hardware that was truly free, top to bottom, are now more incentivised by the RYF program to create hardware in which the non-free low-level software can't be replaced.

Meanwhile, the rest of the world did not pretend to still be living in the nineties, and free hardware communities now exist. Because of how the FSF has marketed themselves out of the world, these communities call themselves "Open Hardware" communities, rather than "Free Hardware" ones, but the principle is the same: the designs are there, if you have the skill you can modify it, but you don't have to.

In the mean time, the open hardware community has evolved to a point where even CPUs are designed in the open, which you can design your own version of.

But not all hardware can be implemented as RISC-V, and so if you want a full system that builds RISC-V you may still need components of the system that were originally built for other architectures but that would work with RISC-V, such as a network card or a GPU. And because the FSF has done everything in their power to disincentivise people who would otherwise be well situated to build free versions of the low-level software required to support your hardware, you may now be in the weird position where we seem to have somehow skipped a step.

My own suspicion is that the universe is not only queerer than we suppose, but queerer than we can suppose.

-- J.B.S. Haldane

(comments for this post will not pass moderation. Use your own blog!)

23 Feb 2026 4:51pm GMT

22 Feb 2026

Planet Debian

Planet Debian

Benjamin Mako Hill: What makes online groups vulnerable to governance capture?

Note: I have not published blog posts about my academic papers over the past few years. To ensure that my blog contains a more comprehensive record of my published papers and to surface these for folks who missed them, I will be periodically (re)publishing blog posts about some "older" published projects. This post is closely based on a previously published post by Zarine Kharazian on the Community Data Science Blog.

For nearly a decade, the Croatian language version of Wikipedia was run by a cabal of far-right nationalists who edited articles in ways that promoted fringe political ideas and involved cases of historical revisionism related to the Ustaše regime, a fascist movement that ruled the Nazi puppet state called the Independent State of Croatia during World War II. This cabal seized complete control of the encyclopedia's governance, banned and blocked those who disagreed with them, and operated a network of fake accounts to create the appearance of grassroots support for their policies.

Thankfully, Croatian Wikipedia appears to be an outlier. Though both the Croatian and Serbian language editions have been documented to contain nationalist bias and historical revisionism, Croatian Wikipedia seems unique among Wikipedia editions in the extent to which its governance institutions were captured by a small group of users.

The situation in Croatian Wikipedia was well documented and is now largely fixed, but we still know very little about why it was taken over, while other language editions seem to have rebuffed similar capture attempts. In a paper published in the Proceedings of the ACM: Human-Computer Interaction (CSCW), Zarine Kharazian, Kate Starbird, and I present an interview-based study that provides an explanation for why Croatian was captured while several other editions facing similar contexts and threats fared better.

Based on insights from interviews with 15 participants from both the Croatian and Serbian Wikipedia projects and from the broader Wikimedia movement, we arrived at three propositions that, together, help explain why Croatian Wikipedia succumbed to capture while Serbian Wikipedia did not:

- Perceived Value as a Target. Is the project worth expending the effort to capture?

- Bureaucratic Openness. How easy is it for contributors outside the core founding team to ascend to local governance positions?

- Institutional Formalization. To what degree does the project prefer personalistic, informal forms of organization over formal ones?

The conceptual model from our paper, visualizing possible institutional configurations among Wikipedia projects that affect the risk of governance capture.

The conceptual model from our paper, visualizing possible institutional configurations among Wikipedia projects that affect the risk of governance capture. We found that both Croatian and Serbian Wikipedias were attractive targets for far-right nationalist capture due to their sizable readership and resonance with national identity. However, we also found that the two projects diverged early in their trajectories in how open they remained to new contributors ascending to local governance positions and in the degree to which they privileged informal relationships over formal rules and processes as the project's organizing principles. Ultimately, Croatian's relative lack of bureaucratic openness and rules constraining administrator behavior created a window of opportunity for a motivated contingent of editors to seize control of the governance mechanisms of the project.

Though our empirical setting was Wikipedia, our theoretical model may offer insight into the challenges faced by self-governed online communities more broadly. As interest in decentralized alternatives to Facebook and X (formerly Twitter) grows, communities on these sites will likely face similar threats from motivated actors. Understanding the vulnerabilities inherent in these self-governing systems is crucial to building resilient defenses against threats like disinformation.

For more details on our findings, take a look at the published version of our paper.

Citation for the full paper: Kharazian, Zarine, Kate Starbird, and Benjamin Mako Hill. 2024. "Governance Capture in a Self-Governing Community: A Qualitative Comparison of the Croatian, Serbian, Bosnian, and Serbo-Croatian Wikipedias." Proceedings of the ACM on Human-Computer Interaction 8 (CSCW1): 61:1-61:26. https://doi.org/10.1145/3637338.

This blog post and the paper it describes are collaborative work by Zarine Kharazian, Benjamin Mako Hill, and Kate Starbird.

22 Feb 2026 9:12pm GMT

Junichi Uekawa: AI generated code and its quality.

AI generated code and its quality. It's hard to get larger tasks done and smaller tasks I am faster myself. I suspect this will change soon, but as of today things are challenging. Large chunks of code that's generated by AI is hard to review and generally of not great quality. Possibly two layers that cause quality issues. One is that the instructions aren't clear for the AI, and the misunderstanding shows; I could sometimes reverse engineer the misunderstanding, and that could be resolved in the future. The other is that probably what the AI have learnt from is from a corpus that is not fit for the purpose. Which I suspect can be improved in the future with methodology and improvements in how they obtain the corpus, or redirect the learnings, or how it distills the learnings. I'm noting down what I think today, as the world is changing rapidly, and I am bound to see a very different scene soon.

AI generated code and its quality. It's hard to get larger tasks done and smaller tasks I am faster myself. I suspect this will change soon, but as of today things are challenging. Large chunks of code that's generated by AI is hard to review and generally of not great quality. Possibly two layers that cause quality issues. One is that the instructions aren't clear for the AI, and the misunderstanding shows; I could sometimes reverse engineer the misunderstanding, and that could be resolved in the future. The other is that probably what the AI have learnt from is from a corpus that is not fit for the purpose. Which I suspect can be improved in the future with methodology and improvements in how they obtain the corpus, or redirect the learnings, or how it distills the learnings. I'm noting down what I think today, as the world is changing rapidly, and I am bound to see a very different scene soon.

22 Feb 2026 5:45am GMT

Otto Kekäläinen: Do AI models still keep getting better, or have they plateaued?

The AI hype is based on the assumption that the frontier AI labs are producing better and better foundational models at an accelerating pace. Is that really true, or are people just in sort of a mass psychosis because AI models have become so good at mimicking human behavior that we unconsciously attribute increasing intelligence to them? I decided to conduct a mini-benchmark of my own to find out if the latest and greatest AI models are actually really good or not.

The problem with benchmarks

Every time any team releases a new LLM, they boast how well it performs on various industry benchmarks such as Humanity's Last Exam, SWE-Bench and Ai2 ARC or ARC-AGI. An overall leaderboard can be viewed at LLM-stats. This incentivizes teams to optimize for specific benchmarks, which might make them excel on specific tasks while general abilities degrade. Also, the older a benchmark dataset is, the more online material there is discussing the questions and best answers, which in turn increases the chances of newer models trained on more recent web content scoring better.

Thus I prefer looking at real-time leaderboards such as the LM Arena leaderboard (or OpenCompass for Chinese models that might be missing from LM Arena). However, even though the LM Arena Elo score is rated by humans in real-time, the benchmark can still be played. For example, Meta reportedly used a special chat-optimized model instead of the actual Llama 4 model when getting scored on the LM Arena.

Therefore I trust my own first-hand experience more than the benchmarks for gaining intuition. Intuition however is not a compelling argument in discussions on whether or not new flagship AI models have plateaued. Thus, I decided to devise my own mini-benchmark so that no model could have possibly seen it in its training data or be specifically optimized for it in any way.

My mini-benchmark

I crafted 6 questions based on my own experience using various LLMs for several years and having developed some intuition about what kinds of questions LLMs typically struggle with.

I conducted the benchmark using the OpenRouter.ai chat playroom with the following state-of-the-art models:

- Claude Opus 4.6 (Anthropic)

- GPT-5.2 (OpenAI)

- Grok 4.1 (xAI)

- Gemini 3.1 Pro Preview (Google)

- GLM 5 (Z.ai)

- MinMax M2.5 (MinMax)

- Qwen3.5 Plus 2026-02-15 (Alibaba)

- Kimi K2.5 (Moonshot.ai)

OpenRouter.ai is great as it very easy to get responses from multiple models in parallel to a single question. Also it allows to turn off web search to force the models to answer purely based on their embedded knowledge.

Common for all the test questions is that they are fairly straightforward and have a clear answer, yet the answer isn't common knowledge or statistically the most obvious one, and instead requires a bit of reasoning to get correct.

Some of these questions are also based on myself witnessing a flagship model failing miserably to answer it.

1. Which cities have hosted the Olympics more than just once?

This question requires accounting for both summer and winter Olympics, and for Olympics hosted across multiple cities.

The variance in responses comes from if the model understands that Beijing should be counted as it has hosted both summer and winter Olympics. Interestingly GPT was the only model to not mention Beijing at all. Some variance also comes from how models account for co-hosted Olympics. For example Cortina should be counted as having hosted the Olympics twice, in 1956 and 2026, but only Claude, Gemini and Kimi pointed this out. Stockholm's 1956 hosting of the equestrian games during the Melbourne Olympics is a special case, which GPT, Gemini and Kimi pointed out in a side note. Some models seem to have old training material, and for example Grok assumes the current year is 2024. All models that accounted for awarded future Olympics (e.g. Los Angeles 2028) marked them clearly as upcoming.

Overall I would judge that only GPT and MinMax gave incomplete answers, while all other models replied as the best humans could reasonably have.

2. If EUR/USD continues to slide to 1.5 by mid-2026, what is the likely effect on BMW's stock price by end of 2026?

This question requires mapping the currency exchange rate to historic value, dodging the misleading word "slide", and reasoning on where the revenue of a company comes from and how a weaker US dollar affects it in multiple ways. I've frequently witnessed flagship models get it wrong how interest rates and exchange rates work. Apparently the binary choice between "up" or "down" is somehow challenging to the internal statistical model in the LLMs on a topic where there are a lot of training material that talk about both things being likely to happen, and choosing between them requires specifically reasoning about the scenario at hand and disregarding general knowledge of the situation.

However, this time all the models concluded correctly that a weak dollar would have a negative overall effect on the BMW stock price. Gemini, GLM, Qwen and Kimi also mention the potential hedging effect of BMW's X-series production in South Carolina for worldwide export.

3. What is the Unicode code point for the traffic cone emoji?

This was the first question where the the flagship models clearly still struggle in 2026. The trap here is that there is no traffic cone emoji, so an advanced model should simply refuse to give any Unicode numbers at all. Most LLMs however have an urge to give some answer, leading to hallucinations. Also, as the answer has a graphical element to it, the LLM might not understand how the emoji "looks" in ways that would be obvious to a human, and thus many models claim the construction sign emoji is a traffic cone, which it is not.

By far the worst response was from GPT, that simply hallucinates and stops there:

While Gemini and Grok were among the three models not falling into this trap, the response from Claude was exemplary good:

4. Which languages are the 10 most commonly spoken in the world? For each language, count from 1 to 5.

This seemingly simple question can become quite complex when you account for the fact that other languages might also have their own character system, both for words and the numerals (e.g. Arabic واحد/one vs ١/1), and that numbers might have variations depending on what the thing being counted is (e.g in French object gender un/une), or whether plain numbers and occurrences have their own words (e.g. Russian один/odin vs раз/raz). I've also witnessed older models frequently mix up on what parts of the reply should be using what language.

For a human this question is trivial, assuming they know the language. For LLMs it is surprisingly challenging. Grok and MinMax only gave a list of the most popular languages and stopped there without showing any words in the languages. Qwen got a bit further by trying to list numbers in each language, but the lists were only the numbers 1 to 5:

I have seen Grok do something similar before, which in fact inspired me to include this question in my mini-benchmark.

GPT got a bit further, but for Hindi, Arabic and Bengali it listed the numerals in local script, not the number words. Gemini, GLM and Kimi gave a complete and correct answer as a list, while the absolute best answer and presentation was by Claude, that gave the table below:

Claude Opus 4.6

10 Most Commonly Spoken Languages (by Total Speakers)

# Language 1 2 3 4 5 1 English One Two Three Four Five 2 Mandarin Chinese 一 (yī) 二 (èr) 三 (sān) 四 (sì) 五 (wǔ) 3 Hindi एक (ek) दो (do) तीन (tīn) चार (chār) पाँच (pā̃ch) 4 Spanish Uno Dos Tres Cuatro Cinco 5 French Un Deux Trois Quatre Cinq 6 Arabic واحد (wāḥid) اثنان (ithnān) ثلاثة (thalātha) أربعة (arbaʿa) خمسة (khamsa) 7 Bengali এক (ek) দুই (dui) তিন (tin) চার (chār) পাঁচ (pā̃ch) 8 Portuguese Um Dois Três Quatro Cinco 9 Russian Один (odin) Два (dva) Три (tri) Четыре (chetyre) Пять (pyat') 10 Japanese 一 (ichi) 二 (ni) 三 (san) 四 (shi) 五 (go) Note: Rankings are based on estimated total speakers (native + non-native). Rankings can vary depending on the source and methodology used.

5. Count the number of drone launch pads in the picture.

Together with the question, I uploaded this picture:

A human can easily count that there are 10 rows and 30+ columns in the grid, but because the picture resolution isn't good enough, the exact number of columns can't be counted, and the answer should be that there are at least 300 launch pads in the picture.

GPT and Grok both guessed the count is zero. Instead of hallucinating some number they say zero, but it would have been better to not give any number at all, and just state that they are unable to perform the task. Gemini gave as its answer "101", which is quite odd, but reading the reasoning section, it seems to have tried counting items in the image without reasoning much about what it is actually counting and that there is clearly a grid that can make the counting much easier. Both Qwen and Kimi state they can see four parallel structures, but are unable to count drone launch pads.

The absolutely best answer was given by Claude, which counted 10-12 rows and 30-40+ columns, and concluded that there must be 300-500 drone launch pads. Very close to best human level - impressive!

This question applied only to multi-modal models that can see images, so GLM and MinMax could not give any response.

6. Explain why I am getting the error below, and what is the best way to fix it?

Together with the question above, I gave this code block:

$ SH_SCRIPTS="$(mktemp; grep -Irnw debian/ -e '^#!.*/sh' | sort -u | cut -d ':' -f 1 || true)" $ shellcheck -x --enable=all --shell=sh "$SH_SCRIPTS" /tmp/tmp.xQOpI5Nljx debian/tests/integration-tests: /tmp/tmp.xQOpI5Nljx debian/tests/integration-tests: openBinaryFile: does not exist (No such file or directory)

$ SH_SCRIPTS="$(mktemp; grep -Irnw debian/ -e '^#!.*/sh' | sort -u | cut -d ':' -f 1 || true)"

$ shellcheck -x --enable=all --shell=sh "$SH_SCRIPTS"

/tmp/tmp.xQOpI5Nljx

debian/tests/integration-tests: /tmp/tmp.xQOpI5Nljx

debian/tests/integration-tests: openBinaryFile: does not exist (No such file or directory)Older models would easily be misled by the last error message thinking that a file went missing, and focus on suggesting changes to the complex-looking first line. In reality the error is simply caused by having the quotes around the $SH_SCRIPTS, resulting in the entire multi-line string being passed as a single argument to shellcheck. So instead of receiving two separate file paths, shellcheck tries to open one file literally named /tmp/tmp.xQOpI5Nljx\ndebian/tests/integration-tests.

Incorrect argument expansion is fairly easy for an experienced human programmer to notice, but tricky for an LLM. Indeed, Grok, MinMax, and Qwen fell for this trap and focused on the mktemp, assuming it somehow fails to create a file. Interestingly GLM fails to produce an answer at all, as the reasoning step seems to be looping, thinking too much about the missing file, but not understanding why it would be missing when there is nothing wrong with how mktemp is executed.

Claude, Gemini, and Kimi immediately spot the real root cause of passing the variable quoted and suggested correct fixes that involve either removing the quotes, or using Bash arrays or xargs in a way that makes the whole command also handle correctly filenames with spaces in them.

Conclusion

| Model | Sports | Economics | Emoji | Languages | Visual | Shell | Score |

|---|---|---|---|---|---|---|---|

| Claude Opus 4.6 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 6/6 |

| GPT-5.2 | ✗ | ✓ | ✗ | ~ | ✗ | ✓ | 2.5/6 |

| Grok 4.1 | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ | 3/6 |

| Gemini 3.1 Pro | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | 5/6 |

| GLM 5 | ✓ | ✓ | ? | ✓ | N/A | ✗ | 3/5 |

| MinMax M2.5 | ✗ | ✓ | ✗ | ✗ | N/A | ✗ | 1/5 |

| Qwen3.5 Plus | ✓ | ✓ | ✗ | ~ | ✗ | ✗ | 2.5/6 |

| Kimi K2.5 | ✓ | ✓ | ✗ | ✓ | ✗ | ✓ | 4/6 |

Obviously, my mini-benchmark only had 6 questions, and I ran it only once. This was obviously not scientifically rigorous. However it was systematic enough to trump just a mere feeling.

The main finding for me personally is that Claude Opus 4.6, the flagship model by Anthropic, seems to give great answers consistently. The answers are not only correct, but also well scoped giving enough information to cover everything that seems relevant, without blurping unnecessary filler.

I used Claude extensively in 2023-2024 when it was the main model available at my day work, but for the past year I had been using other models that I felt were better at the time. Now Claude seems to be the best-of-the-best again, with Gemini and Kimi as close follow-ups. Comparing their pricing at OpenRouter.ai the Kimi K2.5 price of $0.6 / million tokens is almost 90% cheaper than the Claude Opus 4.6's $5.0 / million tokens suggests that Kimi K2.5 offers the best price-per-performance ratio. Claude might be cheaper with a monthly subscription directly from Anthropic, potentially narrowing the price gap.

Overall I do feel that Anthropic, Google and Moonshot.ai have been pushing the envelope with their latest models in a way that one can't really claim that AI models have plateaued. In fact, one could claim that at least Claude has now climbed over the hill of "AI slop" and consistently produces valuable results. If and when AI usage expands from here, we might actually not drown in AI slop as chances of accidentally crappy results decrease. This makes me positive about the future.

I am also really happy to see that there wasn't just one model crushing everybody else, but that there are at least three models doing very well. As an open source enthusiast I am particularly glad to see that Moonshot.ai's Kimi K2.5 is published with an open license. Given the hardware, anyone can run it on their own. OpenRouter.ai currently lists 9 independent providers alongside Moonshot.ai itself, showcasing the potential of open-weight models in practice.

If the pattern holds and flagship models continue improving at this pace we might look back at 2026 as the year AI stopped feeling like a call center associate and started to resemble a scientific researcher. While new models become available we need to keep testing, keep questioning, and keep our expectations grounded in actual performance rather than press releases.

Thanks to OpenRouter.ai for providing a great service that makes testing various models incredibly easy!

22 Feb 2026 12:00am GMT

21 Feb 2026

Planet Debian

Planet Debian

Jonathan Dowland: Lanzarote

I want to get back into the habit of blogging, but I've struggled. I've had several ideas of topics to try and write about, but I've not managed to put aside the time to do it. I thought I'd try and bash out a one-take, stream-of-conciousness-style post now, to get back into the swing.

I'm writing from the lounge of my hotel room in Lanzarote, where my family have gone for the School break. The weather at home has been pretty awful this year, and this week is traditionally quite miserable at the best of times. It's been dry with highs of around 25℃ .

It's been an unusual holiday in one respect: one of my kids is struggling with Autistic Burnout. We were really unsure whether taking her was a good idea: and certainly towards the beginning of the holiday felt we may have made a mistake. Writing now, at the end, I'm not so sure. But we're very unlikely to have anything resembling a traditional summer holiday for the foreseeable.

Managing Autistic Burnout and the UK ways the UK healthcare and education systems manage it (or fail to) has been a huge part of my recent life. Perhaps I should write more about that. This coming week the government are likely to publish plans for reforming Special Needs support in Education. Like many other parents, we wait in hope and fear to see what they plan.

In anticipation of spending a lot of time in the hotel room with my preoccupied daughter I (unusually) packed a laptop and set myself a nerd-task: writing a Pandoc parser ("reader") for the MoinMoin Wiki markup language. There's some unfinished prior art from around 2011 by Simon Michael (of hledger) to work from.

The motivation was our plan to migrate the Debian Wiki away from MoinMoin. We've since decided to approach that differently but I might finish the Reader anyway, it's been an interesting project (and a nice excuse to write Haskell) and it will be useful for others.

Unusually (for me) I've not been reading fiction on this trip: I took with me Human Compatible by Prof Stuart Russell: discussing how to solve the problem of controlling a future Artificial Intelligence. I've largely avoided the LLM hype cycle we're suffering through at the moment, and I have several big concerns about it (moral, legal, etc.), and felt it was time to try and make my concerns more well-formed and test them. This book has been a big help in doing so, although it doesn't touch on the issue of copyright, which is something I am particularly interested in at the moment.

21 Feb 2026 7:00pm GMT

Dirk Eddelbuettel: qlcal 0.1.0 on CRAN: Easier Calendar Switching

The eighteenth release of the qlcal package arrivied at CRAN today. There has been no calendar update in QuantLib 1.41 so it has been relatively quiet since the last release last summer but we now added a nice new feature (more below) leading to a new minor release version.

qlcal delivers the calendaring parts of QuantLib. It is provided (for the R package) as a set of included files, so the package is self-contained and does not depend on an external QuantLib library (which can be demanding to build). qlcal covers over sixty country / market calendars and can compute holiday lists, its complement (i.e. business day lists) and much more. Examples are in the README at the repository, the package page, and course at the CRAN package page.

This releases makes it (much) easier to work with multiple calendars. The previous setup remains: the package keeps one 'global' (and hidden) calendar object which can be set, queried, altered, etc. But now we added the ability to hold instantiated calendar objects in R. These are external pointer objects, and we can pass them to functions requiring a calendar. If no such optional argument is given, we fall back to the global default as before. Similarly for functions operating on one or more dates, we now simply default to the current date if none is given. That means we can now say

> sapply(c("UnitedStates/NYSE", "Canada/TSX", "Australia/ASX"),

\(x) qlcal::isBusinessDay(xp=qlcal::getCalendar(x)))

UnitedStates/NYSE Canada/TSX Australia/ASX

TRUE TRUE TRUE

> to query today (February 18) in several markets, or compare to two days ago when Canada and the US both observed a holiday

> sapply(c("UnitedStates/NYSE", "Canada/TSX", "Australia/ASX"),

\(x) qlcal::isBusinessDay(as.Date("2026-02-16"), xp=qlcal::getCalendar(x)))

UnitedStates/NYSE Canada/TSX Australia/ASX

FALSE FALSE TRUE

> The full details from NEWS.Rd follow.

Changes in version 0.1.0 (2026-02-18)

Invalid calendars return id 'TARGET' now

Calendar object can be created on the fly and passed to the date-calculating functions; if missing global one used

For several functions a missing date object now implies computation on the current date, e.g.

isBusinessDay()

Courtesy of my CRANberries, there is a diffstat report for this release. See the project page and package documentation for more details, and more examples.

This post by Dirk Eddelbuettel originated on his Thinking inside the box blog. If you like this or other open-source work I do, you can sponsor me at GitHub.

Edited 2026-02-21 to correct a minor earlier error: it referenced a QuantLib 1.42 release which does not (yet) exist.

21 Feb 2026 4:49pm GMT

Vasudev Kamath: Learning Notes: Debsecan MCP Server

Since Generative AI is currently the most popular topic, I wanted to get my hands dirty and learn something new. I was learning about the Model Context Protocol at the time and wanted to apply it to build something simple.

Idea

On Debian systems, we use debsecan to find vulnerabilities. However, the tool currently provides a simple list of vulnerabilities and packages with no indication of the system's security posture-meaning no criticality information is exposed and no executive summary is provided regarding what needs to be fixed. Of course, one can simply run the following to install existing fixes and be done with it:

apt-get install $(debsecan --suite sid --format packages --only-fixed)

But this is not how things work in corporate environments; you need to provide a report showing the system's previous state and the actions taken to bring it to a safe state. It is all about metrics and reports.

My goal was to use debsecan to generate a list of vulnerabilities, find more detailed information on them, and prioritize them as critical, high, medium, or low. By providing this information to an AI, I could ask it to generate an executive summary report detailing what needs to be addressed immediately and the overall security posture of the system.

Initial Take

My initial thought was to use an existing LLM, either self-hosted or a cloud-based LLM like Gemini (which provides an API with generous limits via AI Studio). I designed functions to output the list of vulnerabilities on the system and provide detailed information on each. The idea was to use these as "tools" for the LLM.

Learnings

- I learned about open-source LLMs using Ollama, which allows you to download and use models on your laptop.

- I used Llama 3.1, Llama 3.2, and Granite 4 on my laptop without a GPU. I managed to run my experiments, even though they were time-consuming and occasionally caused my laptop to crash.

- I learned about Pydantic and how to use it to parse custom JSON schemas with minimal effort.

- I learned about osv.dev, an open-source initiative by Google that aggregates vulnerability information from various sources and provides data in a well-documented JSON schema format.

- I learned about the EPSS (Exploit Prediction Scoring System) and how it is used alongside static CVSS scoring to detect truly critical vulnerabilities. The EPSS score provides an idea of the probability of a vulnerability being exploited in the wild based on actual real-world attacks.

These experiments led to a collection of notebooks. One key takeaway was that when defining tools, I cannot simply output massive amounts of text because it consumes tokens and increases costs for paid models (though it is fine for local models using your own hardware and energy). Self-hosted models require significant prompting to produce proper output, which helped me understand the real-world application of prompt engineering.

Change of Plans

Despite extensive experimentation, I felt I was nowhere close to a full implementation. While using a Gemini learning tool to study MCP, it suddenly occurred to me: why not write the entire thing as an MCP server? This would save me from implementing the agent side and allow me to hook it into any IDE-based LLM.

Design

This MCP server is primarily a mix of a "tool" (which executes on the server machine to identify installed packages and their vulnerabilities) and a "resource" (which exposes read-only information for a specific CVE ID).

The MCP exposes two tools:

- List Vulnerabilities: This tool identifies vulnerabilities in the packages installed on the system, categorizes them using CVE and EPSS scores, and provides a dictionary of critical, high, medium, and low vulnerabilities.

- Research Vulnerabilities: Based on the user prompt, the LLM can identify relevant vulnerabilities and pass them to this function to retrieve details such as whether a fix is available, the fixed version, and criticality.

Vibe Coding

"Vibe coding" is the latest trend, with many claiming that software engineering jobs are a thing of the past. Without going into too much detail, I decided to give it a try. While this is not my first "vibe coded" project (I have done this previously at work using corporate tools), it is my first attempt to vibe code a hobby/learning project.

I chose Antigravity because it seemed to be the only editor providing a sufficient amount of free tokens. For every vibe coding project, I spend time thinking about the barebones skeleton: the modules, function return values, and data structures. This allows me to maintain control over the LLM-generated code so it doesn't become overly complicated or incomprehensible.

As a first step, I wrote down my initial design in a requirements document. In that document, I explicitly called for using debsecan as the model for various components. Additionally, I asked the AI to reference my specific code for the EPSS logic. The reasons were:

- debsecan already solves the core problem; I am simply rebuilding it. debsecan uses a single file generated by the Debian Security team containing all necessary information, which prevents us from needing multiple external sources.

- This provides the flexibility to categorize vulnerabilities within the listing tool itself since all required information is readily available, unlike my original notebook-based design.

I initially used Gemini 3 Flash as the model because I was concerned about exceeding my free limits.

Hiccups

Although it initially seemed successful, I soon noticed discrepancies between the local debsecan outputs and the outputs generated by the tools. I asked the AI to fix this, but after two attempts, it still could not match the outputs. I realized it was writing its own version-comparison logic and failing significantly.

Finally, I instructed it to depend entirely on the python-apt module for version comparison; since it is not on PyPI, I asked it to pull directly from the Git source. This solved some issues, but the problem persisted. By then, my weekly quota was exhausted, and I stopped debugging.

A week later, I resumed debugging with the Claude 3.5 Sonnet model. Within 20-25 minutes, it found the fix, which involved four lines of changes in the parsing logic. However, I ran out of limits again before I could proceed further.

In the requirements, I specified that the list vulnerabilities tool should only provide a dictionary of CVE IDs divided by severity. However, the AI instead provided full text for all vulnerability details, resulting in excessive data-including negligible vulnerabilities-being sent to the LLM. Consequently, it never called the research vulnerabilities tool. Since I had run out of limits, I manually fixed this in a follow-up commit.

How to Use

I have published the current work in the debsecan-mcp repository. I have used the same license as the original debsecan. I am not entirely sure how to interpret licenses for vibe-coded projects, but here we are.

To use this, you need to install the tool in a virtual environment and configure your IDE to use the MCP. Here is how I set it up for Visual Studio Code:

- Follow the guide from the VS Code documentation regarding adding an MCP server.

- My global mcp.json looks like this:

{

"servers": {

"debsecan-mcp": {

"command": "uv",

"args": [

"--directory",

"/home/vasudev/Documents/personal/FOSS/debsecan-mcp/debsecan-mcp",

"run",

"debsecan-mcp"

]

}

},

"inputs": []

}

- I am running it directly from my local codebase using a virtualenv created with uv. You may need to tweak the path based on your installation.

- To use the MCP server in the Copilot chat window, reference it using #debsecan-mcp. The LLM will then use the server for the query.

- Use a prompt like: "Give an executive summary of the system security status and immediate actions to be taken."

- You can observe the LLM using list_vulnerabilities followed by research_cves. Because the first tool only provides CVE IDs based on severity, the LLM is smart enough to research only high and critical vulnerabilities, thereby saving tokens.

What's Next?

This MCP is not yet perfect and has the following issues:

- The list_vulnerabilities dictionary contains duplicate CVE IDs because the code used a list instead of a set. While the LLM is smart enough to deduplicate these, it still costs extra tokens.

- Because I initially modeled this on debsecan, it uses a raw method for parsing /var/lib/dpkg/status instead of python-apt. I am considering switching to python-apt to reduce maintenance overhead.

- Interestingly, the AI did not add a single unit test, which is disappointing. I will add these once my limits are restored.

- I need to create a cleaner README with usage instructions.

- I need to determine if the MCP can be used via HTTP as well as stdio.

Conclusion

Vibe coding is interesting, but things can get out of hand if not managed properly. Even with a good process, code must be reviewed and tested; you cannot blindly trust an AI to handle everything. Even if it adds tests, you must validate them, or you are doomed!

21 Feb 2026 11:43am GMT

Thomas Goirand: Seamlessly upgrading a production OpenStack cluster in 4 hours : with 2k lines shell script

tl;dr:

To the question: "what does it take to upgrade OpenStack", my personal answer is: less than 2K lines of dash script. I'll here describe its internals, and why I believe it is the correct solution.

Why writing this blog post

During FOSSDEM 2024, I was asked "how to you handle upgrades". I answered with a big smile and a short "with a very small shell script" as I couldn't explain in 2 minutes how it was done. Just saying "it is great this way" doesn't help giving readers enough hints to be trusted. Why and how did I do it the right way ? This blog post is an attempt to reply better to this question more deeply.

Upgrading OpenStack in production

I wrote this script maybe a 2 or 3 years ago. Though I'm only blogging about it today, because … I did such an upgrade in a public cloud in production last Thuesday evening (ie: the first region of the Infomaniak public cloud). I'd say the cluster is moderately large (as of today: about 8K+ VMs running, 83 compute nodes, 12 network nodes, … for a total of 10880 physical CPU cores and 125 TB of RAM if I only count the compute servers). It took "only" 4 hours to do the upgrade (though I already wore some more code to speed this up for the next time…). It went super smooth without a glitch. I mostly just sat, reading the script output… and went to bed once it finished running. The next day, all my colleagues at Infomaniak were nicely congratulating me that it went that smooth (a big thanks to all of you who did). I couldn't dream of an upgrade that smooth! :)

Still not impressed? Boring read? Yeah… let's dive into more technical details.

Intention behind the implementation

My script isn't perfect. I wont ever pretend it is. But at least, it does minimize down time of every OpenStack service. It also is a "by the book" implementation of what's written in the OpenStack doc, following every upstream advice. As a result, it is fully seamless for some OpenStack services, and as HA as OpenStack can be for others. The upgrade process is of course idempotent and can be re-run in case of failure. Here's why.

General idea

My upgrade script does thing in a certain order, respecting what is documented about upgrades in the OpenStack documentation. It basically does:

- Upgrade all dependency

- Upgrade all services one by one, in all the cluster

Installing dependencies

The first thing the upgrade script does is:

- disable puppet on all nodes of the cluster

- switch the APT repository

- apt-get update on all nodes

- install library dependency on all nodes

For this last thing, a static list of all needed dependency upgrade is maintained between each release of OpenStack, and for each type of nodes. Then for all packages in this list, the script checks with dpkg-query that the package is really installed, and with apt-cache policy that it really is going to be upgraded (Maybe there's an easier way to do this?). This way, no package is marked as manually installed by mistake during the upgrade process. This ensure that "apt-get -purge autoremove" really does what it should, and that the script is really idempotent.

The idea then, is that once all dependencies are installed, upgrading and restarting leaf packages (ie: OpenStack services like Nova, Glance, Cinder, etc.) is very fast, because the apt-get command doesn't need to install all dependencies. So at this point, doing "apt-get install python3-cinder" for example (which will also, thanks to dependencies, upgrade cinder-api and cinder-scheduler, if it's in a controller node) only takes a few seconds. This principle applies to all nodes (controller nodes, network nodes, compute nodes, etc.), which helps a lot speeding-up the upgrade and reduce unavailability.

hapc

At its core, the oci-cluster-upgrade-openstack-release script uses haproxy-cmd (ie: /usr/bin/hapc) to drain each API server to-be-upgraded from haproxy. Hapc is a simple Python wrapper around the haproxy admin socket: it sends command to it with an easy to understand CLI. So it is possible to reliably upgrade one API service only after it's drained away. Draining means one just wait for the last query to finish and the client to disconnect from http before giving the backend server some more queries. If you do not know hapc / haproxy-cmd, I recommend trying it: it's going to be hard for you to stop using it once you tested it. Its bash-completion script makes it VERY easy to use, and it is helpful in production. But not only: it is also nice to have when writing this type of upgrade script. Let's dive into haproxy-cmd.

Example on how to use haproxy-cmd

Let me show you. First, ssh one of the 3 controller and search where the virtual IP (VIP) is located with "crm resource locate openstack-api-vip" or with a (more simple) "crm status". Let's ssh that server who got the VIP, and now, let's drain it away from haproxy.

$ hapc list-backends

$ hapc drain-server --backend glancebe --server cl1-controller-1.infomaniak.ch --verbose --wait --timeout 50

$ apt-get install glance-api

$ hapc enable-server --backend glancebe --server cl1-controller-1.infomaniak.ch

Upgrading the control plane

My upgrade script leverages hapc just like above. For each OpenStack project, it's done in this order on the first node holding the VIP:

- "hapc drain-server" of the API, so haproxy gracefully stops querying it

- stop all services on that node (including non-API services): stop, disable and mask with systemd.

- upgrade that service Python code. For example: "apt-get install python3-nova", which also will pull nova-api, nova-conductor, nova-novncprox, etc. but services wont start automatically as they've been stoped + disabled + masked on the previous bullet point.

- perform the db_sync so that the db is up-to-date [1]

- start all services (unmask, enable and start with systemd)

- re-enable the API backend with "hapc enable-server"

Starting at [1], the risk is that other nodes may have a new version of the database schema, but an old version of the code that isn't compatible with it. But it doesn't take long, because the next step is to take care of the other (usually 2) nodes of the OpenStack control plane:

- "hapc drain-server" of the API of the other 2 controllers

- stop of all services on these 2 controllers [2]

- upgrade of the package

- start of all services

So while there's technically zero down time, still some issues between [1] and [2] above may happen because of the new DB schema and the old code (both for API and other services) are up and running at the same time. It is however supposed to be rare cases (some OpenStack project don't even have db change between some OpenStack releases, and it often continues to work on most queries with the upgraded db), and the cluster will be like that for a very short time, so that's fine, and better than an full API down time.

Satellite services

Then there's satellite services, that needs to be upgraded. Like Neutron, Nova, Cinder. Nova is the least offender as it has all the code to rewrite Json object schema on-the-fly so that it continues to work during an upgrade. Though it's a known issue that Cinder doesn't have the feature (last time I checked), and it's also probably the same for Neutron (maybe recent-ish versions of OpenStack do use oslo.versionnedobjects ?). Anyways, upgrade on these nodes are done just right after the control plane for each service.

Parallelism and upgrade timings

As we're dealing with potentially hundreds of nodes per cluster, a lot of operations are performed in parallel. I choose to simply use the & shell thingy with some "wait" shell stuff so that not too many jobs are done in parallel. For example, when disabling SSH on all nodes, this is done 24 nodes at a time. Which is fine. And the number of nodes is all depending on the type of thing that's being done. For example, while it's perfectly OK to disable puppet on 24 nodes at the same time, but it is not OK to do that with Neutron services. In fact, each time a Neutron agent is restarted, the script explicitly waits for 30 seconds. This conveniently avoids a hailstorm of messages in RabbitMQ, and neutron-rpc-server to become too busy. All of these waiting are necessary, and this is one of the reasons why can sometimes take that long to upgrade a (moderately big) cluster.

Not using config management tooling

Some of my colleagues would have prefer that I used something like Ansible. Whever, there's no reason to use such tool if the idea is just to perform some shell script commands on every servers. It is a way more efficient (in terms of programming) to just use bash / dash to do the work. And if you want my point of view about Ansible: using yaml for doing such programming would be crasy. Yaml is simply not adapted for a job where if, case, and loops are needed. I am well aware that Ansible has workarounds and it could be done, but it wasn't my choice.

21 Feb 2026 12:44am GMT

20 Feb 2026

Planet Debian

Planet Debian

Bits from Debian: Proxmox Platinum Sponsor of DebConf26

We are pleased to announce that Proxmox has committed to sponsor DebConf26 as a Platinum Sponsor.

Proxmox develops powerful, yet easy-to-use open-source server solutions. The comprehensive open-source ecosystem is designed to manage divers IT landscapes, from single servers to large-scale distributed data centers. Our unified platform integrates server virtualization, easy backup, and rock-solid email security ensuring seamless interoperability across the entire portfolio. With the Proxmox Datacenter Manager, the ecosystem also offers a "single pane of glass" for centralized management across different locations.

Since 2005, all Proxmox solutions have been built on the rock-solid Debian platform. We are proud to return to DebConf26 as a sponsor because the Debian community provides the foundation that makes our work possible. We believe in keeping IT simple, open, and under your control.

Thank you very much, Proxmox, for your support of DebConf26!

Become a sponsor too!

DebConf26 will take place from 20th to July 25th 2026 in Santa Fe, Argentina, and will be preceded by DebCamp, from 13th to 19th July 2026.

DebConf26 is accepting sponsors! Interested companies and organizations may contact the DebConf team through sponsors@debconf.org, and visit the DebConf26 website at https://debconf26.debconf.org/sponsors/become-a-sponsor/.

20 Feb 2026 5:26pm GMT

Reproducible Builds (diffoscope): diffoscope 313 released

The diffoscope maintainers are pleased to announce the release of diffoscope version 313. This version includes the following changes:

[ Chris Lamb ]

* Don't fail the entire pipeline if deploying to PyPI automatically fails.

[ Vagrant Cascadian ]

* Update external tool reference for 7z on guix.

You find out more by visiting the project homepage.

20 Feb 2026 12:00am GMT

19 Feb 2026

Planet Debian

Planet Debian

Peter Pentchev: Ringlet release: fnmatch-regex 0.3.0

Version 0.3.0 of the fnmatch-regex Rust crate is now available. The major new addition is the glob_to_regex_pattern function that only converts the glob pattern to a regular expression one without building a regular expression matcher. Two new features - regex and std - are also added, both enabled by default.

For more information, see the changelog at the homepage.

19 Feb 2026 11:18am GMT

18 Feb 2026

Planet Debian

Planet Debian

Clint Adams: Holger says

sq network keyserver search $id ; sq cert export --cert=$id > $id.asc

18 Feb 2026 10:59pm GMT