04 Mar 2026

Planet GNOME

Planet GNOME

Sophie Herold: What you might want to know about painkillers

Painkillers are essential. (There are indicators that Neanderthals already used them.) However, many people don't know about aspects of them, that could be relevant for them in practice. Since I learned some new things recently, here a condensed info dump about painkillers.

Many aspects here are oversimplified in the hope to raise some initial awareness. Please consult your doctor or pharmacist about your personal situation, if that's possible. I will not talk about opioids. Their addiction potential should never be underestimated.

Here is the short summary:

- Find out which substance and dose works for you.

- With most painkillers, check if you need to take Pantoprazole to protect your stomach.

- Never overdose paracetamol, never take it with alcohol.

- If possible, take pain medication early and directly in the dose you need.

- Don't take pain medication for more than 15 days a month against headaches. Some mediaction even fewer days.

- If you have any preexisting conditions, health risks, or take additional medication, check very carefully if any of these things could interacts with your pain medication.

Not all substances will work for you

The likelihood of some substances not working for some sort of pain for you is pretty high. If something doesn't seem to work for you, consider trying a different substance. I have seen many doctors being very confident that a substance must work. The statistics often contradict them.

Common over the counter options are:

- Ibuprofen

- Paracetamol

- Naproxen

- Acetylsalicylic Acid (ASS)

- Diclofenac

All of them also reduce fever. All of them, except Paracetamol, are anti-inflammatory. The anti-inflammatory effect is highest in Diclofenac and Naproxen, still significant in Ibuprofen.

It might very well be that none of them work for you. In that case, there might still be other options to prevent or treat your pain.

Gastrointestinal (GI) side effects

All nonsteroidal anti-inflammatory drugs (NSAIDs), that is, Ibuprofen, Naproxen, ASS, and, Diclofenac can be hard on your stomach. This can be somewhat mitigated by taking them after a meal and with a lot of water.

Among the risk factors you should be aware of are Age above 60, history of GI issues, intake of an SSRI, SNRI, or Steroids, consumption of alcohol, or smoking. The risk is lower with Ibuprofen, but higher for ASS, Naproxen, and, especially, Diclofenac.

It is common to mitigate the GI risks by taking a Proton Pump Inhibitor (PPI) like Pantoprazole 20 mg. Usually, if any of the risk factors apply to you. You can limit the intake to the days where you use painkillers. You only need one dose per day, 30-60 minutes before a meal. Then you can take the first painkiller for the day after the meal. Taking Pantoprazole for a few days a month is usually fine. If you need to take it continuously or very often, you have to very carefully weigh all the side effects of PPIs.

Paracetamol doesn't have the same GI risks. If it is effective for you, it can be an option to use it instead. It is also an option to take a lower dose NSAIDs and a lower dose of paracetamol to minimize the risks of both.

Metamizole is also a potential alternative. It might, however, not be available in your country, due to a rare severe side effect. If available, it is still a potential option in cases where other side effects can also become very dangerous. It is usually prescription-only.

For headaches, you might want to look into Triptans. They are also usually prescription-only.

Liver related side effects

Paracetamol can negatively affect the liver. It is therefore very important to honor its maximum dosage of 4000 mg per day, or lower for people with risk factors. Taking paracetamol more than 10 days per month can be a risk for the liver. Monitoring liver values can help, but conclusive changes in your blood work might be delayed until initial damage has happened.

A risk factor is alcohol consumption. It increases if the intake overlaps. To be safe, avoid taking paracetamol for 24 hours after alcohol consumption.

NSAIDs have a much lower risk of affecting the liver negatively.

Cardiovascular risks

ASS is also prescribed as a blood thinner. All NSAIDs have this effect to some extent. However, for ASS, the blood thinning effect extends to more than a week after it has been discontinued. Surgeries should be avoided until that effect has subsided. It also increases the risk for hemorrhagic stroke. If you have migraine with aura, you might want to avoid ASS and Diclofenac.

NSAIDs also have the risk to increase thrombosis. If you are in as risk group for that, you should consider avoiding Diclofenac.

Paracetamol increases blood pressure which can be relevant if there are preexisting risks like already increased blood pressure.

If you take ASS as a blood thinner. Take Aspirin at least 60 minutes before Metamizole. Otherwise, the blood thinning effect of the ASS might be suppressed.

Effective application

NSAIDs have a therapeutic ceiling for pain relief. You might not see an increased benefit beyond a dose of 200 mg or 400 mg for Ibuprofen. However, this ceiling does not apply for their anti-inflammatory effect, which might increase until 600 mg or 800 mg. Also, a higher dose than 400 mg can often be more effective to treat period pain. Higher doses can reduce the non-pain symptoms of migraine. Diclofenac is commonly used beyond its pain relief ceiling for rheumatoid arthritis.

Take pain medication early and in a high enough dose. Several mechanisms can increase the benefit of pain medication. Knowing your effective dose and the early signs to take it is important. If you have early signs of a migraine attack, or you know that you are getting your period, it often makes sense to start the medication before the pain onset. Pain can have cascading effects in the body, and often there is a minimum amount of medication that you need to get a good effect, while a lower dose is almost ineffective.

As mentioned before, you can combine an NSAIDs and Paracetamol. The effects of NSAIDs and Paracetamol can enhance each other, potentially reducing your required dose. In an emergency, it can be safe to combine both of their maximum dosage for a short time. With Ibuprofen and Paracetamol, you can alternate between them every three hours to soften the respective lows in the 6-hour cycle of each of them.

Caffeine can support the pain relief. A cup of coffee or a double-espresso might be enough.

Medication overuse headache

Don't use pain medication against headaches for more than 15 days a month. If you are using pain medication too often for headaches, you might develop a medication overuse headache (German: Medikamentenübergebrauchskopfschmerz). They can be reversed by taking a break from any pain medication. If you are using triptans (not further discussed here), the limit is 10 days instead of 15 days.

While less likely, a medication overuse headache can also appear when treating a different pain than headaches.

If you have more headache days than your painkillers allow treating, there are a lot of medications for migraine prophylaxis. Some, like Amitriptyline, can also be effective for a variety of other kinds headaches.

04 Mar 2026 7:22pm GMT

03 Mar 2026

Planet GNOME

Planet GNOME

Michael Meeks: 2026-03-03 Tuesday

- Planning call in the morning, mail chew, prodded a proposal, lunch, sync with Laser, Anna & Andras, customer call.

- Pleased to see a really nice The Open Road to Freedom index, making it easier to see what is going on.

- Finally managed to get my Apple account to let me pay for a developer subscription - after lots of compound problems wasting hours. Clearly I've hit some buggy indeterminate state - still can't see subscriptions or country information: perhaps I'm stuck mid-atlantic beween two systems.

03 Mar 2026 9:00pm GMT

Martín Abente Lahaye: [Call for Applicants] Flatseal at Igalia’s Coding Experience 2026

Six years ago I released Flatseal. Since then, it has become an essential tool in the Flatpak ecosystem helping users understand and manage application permissions. But there's still a lot of work to do!

I'm thrilled to share that my employer Igalia has selected Flatseal for its Coding Experience 2026 mentoring program.

The Coding Experience is a grant program for people studying Information Technology or related fields. It doesn't matter if you're enrolled in a formal academic program or are self-taught. The goal is to provide you with real world professional experience by working closely with seasoned mentors.

As a participant, you'll work with me to improve Flatseal, addressing long standing limitations and developing features needed for recent Flatpak releases. Possible areas of work include:

- Redesign and refactor Flatseal's permissions backend

- Support denying unassigned permissions

- Support reading system-level overrides

- Support USB devices lists permissions

- Support conditional permissions

- Support most commonly used portals

This is a great opportunity to gain real-world experience, while contributing to open source and helping millions of users.

Applications are open from February 23rd to April 3rd. Learn more and apply here!

03 Mar 2026 2:42pm GMT

Matthew Garrett: To update blobs or not to update blobs

A lot of hardware runs non-free software. Sometimes that non-free software is in ROM. Sometimes it's in flash. Sometimes it's not stored on the device at all, it's pushed into it at runtime by another piece of hardware or by the operating system. We typically refer to this software as "firmware" to differentiate it from the software run on the CPU after the OS has started1, but a lot of it (and, these days, probably most of it) is software written in C or some other systems programming language and targeting Arm or RISC-V or maybe MIPS and even sometimes x862. There's no real distinction between it and any other bit of software you run, except it's generally not run within the context of the OS3. Anyway. It's code. I'm going to simplify things here and stop using the words "software" or "firmware" and just say "code" instead, because that way we don't need to worry about semantics.

A fundamental problem for free software enthusiasts is that almost all of the code we're talking about here is non-free. In some cases, it's cryptographically signed in a way that makes it difficult or impossible to replace it with free code. In some cases it's even encrypted, such that even examining the code is impossible. But because it's code, sometimes the vendor responsible for it will provide updates, and now you get to choose whether or not to apply those updates.

I'm now going to present some things to consider. These are not in any particular order and are not intended to form any sort of argument in themselves, but are representative of the opinions you will get from various people and I would like you to read these, think about them, and come to your own set of opinions before I tell you what my opinion is.

THINGS TO CONSIDER

-

Does this blob do what it claims to do? Does it suddenly introduce functionality you don't want? Does it introduce security flaws? Does it introduce deliberate backdoors? Does it make your life better or worse?

-

You're almost certainly being provided with a blob of compiled code, with no source code available. You can't just diff the source files, satisfy yourself that they're fine, and then install them. To be fair, even though you (as someone reading this) are probably more capable of doing that than the average human, you're likely not doing that even if you are capable because you're also likely installing kernel upgrades that contain vast quantities of code beyond your ability to understand4. We don't rely on our personal ability, we rely on the ability of those around us to do that validation, and we rely on an existing (possibly transitive) trust relationship with those involved. You don't know the people who created this blob, you likely don't know people who do know the people who created this blob, these people probably don't have an online presence that gives you more insight. Why should you trust them?

-

If it's in ROM and it turns out to be hostile then nobody can fix it ever

-

The people creating these blobs largely work for the same company that built the hardware in the first place. When they built that hardware they could have backdoored it in any number of ways. And if the hardware has a built-in copy of the code it runs, why do you trust that that copy isn't backdoored? Maybe it isn't and updates would introduce a backdoor, but in that case if you buy new hardware that runs new code aren't you putting yourself at the same risk?

-

Designing hardware where you're able to provide updated code and nobody else can is just a dick move5. We shouldn't encourage vendors who do that.

-

Humans are bad at writing code, and code running on ancilliary hardware is no exception. It contains bugs. These bugs are sometimes very bad. This paper describes a set of vulnerabilities identified in code running on SSDs that made it possible to bypass encryption secrets. The SSD vendors released updates that fixed these issues. If the code couldn't be replaced then anyone relying on those security features would need to replace the hardware.

-

Even if blobs are signed and can't easily be replaced, the ones that aren't encrypted can still be examined. The SSD vulnerabilities above were identifiable because researchers were able to reverse engineer the updates. It can be more annoying to audit binary code than source code, but it's still possible.

-

Vulnerabilities in code running on other hardware can still compromise the OS. If someone can compromise the code running on your wifi card then if you don't have a strong IOMMU setup they're going to be able to overwrite your running OS.

-

Replacing one non-free blob with another non-free blob increases the total number of non-free blobs involved in the whole system, but doesn't increase the number that are actually executing at any point in time.

Ok we're done with the things to consider. Please spend a few seconds thinking about what the tradeoffs are here and what your feelings are. Proceed when ready.

I trust my CPU vendor. I don't trust my CPU vendor because I want to, I trust my CPU vendor because I have no choice. I don't think it's likely that my CPU vendor has designed a CPU that identifies when I'm generating cryptographic keys and biases the RNG output so my keys are significantly weaker than they look, but it's not literally impossible. I generate keys on it anyway, because what choice do I have? At some point I will buy a new laptop because Electron will no longer fit in 32GB of RAM and I will have to make the same affirmation of trust, because the alternative is that I just don't have a computer. And in any case, I will be communicating with other people who generated their keys on CPUs I have no control over, and I will also be relying on them to be trustworthy. If I refuse to trust my CPU then I don't get to computer, and if I don't get to computer then I will be sad. I suspect I'm not alone here.

Why would I install a code update on my CPU when my CPU's job is to run my code in the first place? Because it turns out that CPUs are complicated and messy and they have their own bugs, and those bugs may be functional (for example, some performance counter functionality was broken on Sandybridge at release, and was then fixed with a microcode blob update) and if you update it your hardware works better. Or it might be that you're running a CPU with speculative execution bugs and there's a microcode update that provides a mitigation for that even if your CPU is slower when you enable it, but at least now you can run virtual machines without code in those virtual machines being able to reach outside the hypervisor boundary and extract secrets from other contexts. When it's put that way, why would I not install the update?

And the straightforward answer is that theoretically it could include new code that doesn't act in my interests, either deliberately or not. And, yes, this is theoretically possible. Of course, if you don't trust your CPU vendor, why are you buying CPUs from them, but well maybe they've been corrupted (in which case don't buy any new CPUs from them either) or maybe they've just introduced a new vulnerability by accident, and also you're in a position to determine whether the alleged security improvements matter to you at all. Do you care about speculative execution attacks if all software running on your system is trustworthy? Probably not! Do you need to update a blob that fixes something you don't care about and which might introduce some sort of vulnerability? Seems like no!

But there's a difference between a recommendation for a fully informed device owner who has a full understanding of threats, and a recommendation for an average user who just wants their computer to work and to not be ransomwared. A code update on a wifi card may introduce a backdoor, or it may fix the ability for someone to compromise your machine with a hostile access point. Most people are just not going to be in a position to figure out which is more likely, and there's no single answer that's correct for everyone. What we do know is that where vulnerabilities in this sort of code have been discovered, updates have tended to fix them - but nobody has flagged such an update as a real-world vector for system compromise.

My personal opinion? You should make your own mind up, but also you shouldn't impose that choice on others, because your threat model is not necessarily their threat model. Code updates are a reasonable default, but they shouldn't be unilaterally imposed, and nor should they be blocked outright. And the best way to shift the balance of power away from vendors who insist on distributing non-free blobs is to demonstrate the benefits gained from them being free - a vendor who ships free code on their system enables their customers to improve their code and enable new functionality and make their hardware more attractive.

It's impossible to say with absolute certainty that your security will be improved by installing code blobs. It's also impossible to say with absolute certainty that it won't. So far evidence tends to support the idea that most updates that claim to fix security issues do, and there's not a lot of evidence to support the idea that updates add new backdoors. Overall I'd say that providing the updates is likely the right default for most users - and that that should never be strongly enforced, because people should be allowed to define their own security model, and whatever set of threats I'm worried about, someone else may have a good reason to focus on different ones.

-

Code that runs on the CPU before the OS is still usually described as firmware - UEFI is firmware even though it's executing on the CPU, which should give a strong indication that the difference between "firmware" and "software" is largely arbitrary ↩︎

-

Because UEFI makes everything more complicated, UEFI makes this more complicated. Triggering a UEFI runtime service involves your OS jumping into firmware code at runtime, in the same context as the OS kernel. Sometimes this will trigger a jump into System Management Mode, but other times it won't, and it's just your kernel executing code that got dumped into RAM when your system booted. ↩︎

-

I don't understand most of the diff between one kernel version and the next, and I don't have time to read all of it either. ↩︎

-

There's a bunch of reasons to do this, the most reasonable of which is probably not wanting customers to replace the code and break their hardware and deal with the support overhead of that, but not being able to replace code running on hardware I own is always going to be an affront to me. ↩︎

03 Mar 2026 3:09am GMT

02 Mar 2026

Planet GNOME

Planet GNOME

Mathias Bonn: Mahjongg: Second Year in Review

Another year of work on Mahjongg is over. This was a pretty good year, with smaller improvements from several contributors. Let's take a look at what's new in Mahjongg 49.x.

Game Session Restoration

Thanks to contributions by François Godin, Mahjongg now remembers the previous game in progress before quitting. On startup, you have the option to resume the game or restart it.

New Pause Screen

Pausing a game used to only blank out the tiles and dim them. Since games restored on startup are paused, the lack of information was confusing. A new pause screen has since been added, with prominent buttons to resume/restart or quit. Thanks to Jeff Fortin for raising this issue!

A new Escape keyboard shortcut for pausing the game has also been added, and the game now pauses automatically when opening menus and dialogs.

New Game Rules Dialog

Help documentation for Mahjongg has existed for a long time, but it always seemed less than ideal to open and read through when you just want to get started. Keeping the documentation up-to-date and translated was also difficult. A new Game Rules dialog has replaced it, giving a quick overview of what the game is about.

Accessibility Improvements

Tiles without a free long edge now shake when clicked, to indicate that they are not selectable. Tiles are also slightly dimmer in dark mode now, and follow the high contrast setting of the operating system.

When attempting to change the layout while a game is in progress, a confirmation dialog about ending the current game is shown.

Fixes and Modernizations

Various improvements to the codebase have been made, and tests were added for the game algorithm and layout loading. Performance issues with larger numbers of entries in the Scores dialog were fixed, as well as an issue focusing the username entry at times when saving a score. Some small rendering issues related to fractional scaling were also addressed.

Mahjongg used to load its tile assets using GdkPixbuf, but since that's being phased out, it's now using Rsvg directly instead. The upcoming GTK 4.22 release is introducing a new internal SVG renderer, GtkSvg, which we will hopefully start using in the near future.

GNOME Circle Membership

After a few rounds of reviews from Gregor Niehl and Tobias Bernard, Mahjongg was accepted into GNOME Circle. Mahjongg now has a page on apps.gnome.org, instructions for contributing and testing on welcome.gnome.org, as well as a new app icon by Tobias.

Future Improvements

The following items are next on the roadmap:

- Port the Scores dialog to the one provided by libgnome-games-support

- Use GtkSvg instead of Rsvg for rendering tile assets

- Look into adding support for keyboard navigation (and possibly gamepad support)

Download Mahjongg

The latest version of Mahjongg is available on Flathub.

That's all for now!

02 Mar 2026 7:41pm GMT

27 Feb 2026

Planet GNOME

Planet GNOME

This Week in GNOME: #238 Navigating Months

Update on what happened across the GNOME project in the week from February 20 to February 27.

GNOME Core Apps and Libraries

Calendar ↗

A simple calendar application.

Hari Rana | TheEvilSkeleton (any/all) 🇮🇳 🏳️⚧️ announces

Georges livestreamed himself reviewing and merging parts of merge request !598, making the month view easier than ever to navigate with a keyboard!

This merge request introduces a coordinate-aware navigation system in the month view, which computes the coordinates of relevant event widgets and finds the nearest widget relative to the one in focus when using arrow keys. When tabbing, focus moves chronologically, meaning focus moves down until there are no event widgets overlaying that specific cell, which then moves focus to the topmost event widget found in the next cells or rows; tabbing backwards goes in the opposite direction.

To illustrate the sheer complexity of navigation in a calendaring app, here is Georges's live reaction:

"Wow, congratulations, this is looking INSANE, Hari… The hell is going on here"

- Georges, maintainer of GNOME Calendar - https://youtu.be/smofXzVwNwQ?t=1h24m6s

Blueprint ↗

A markup language for app developers to create GTK user interfaces.

James Westman says

Blueprint 0.20.0 is here! This update includes a ton of features from many contributors. Most significantly, this release includes a linter thanks to Neighborhoodie and the STA grant. The linter catches common mistakes that go beyond simple syntax and type checking. Due to the nature of these checks, it may still have some rough edges, so please file an issue if you see room for improvement.

Also of note are a number of new completion suggestions while editing, improved type checking in expressions, and support for newer GTK features like Gtk.TryExpression.

GNOME Circle Apps and Libraries

Tobias Bernard says

Sudoku by Sepehr Rasouli was accepted into Circle! It's what it says on the tin: A dead-simple, polished GNOME app for playing Sudoku. Congratulations 🥳

Tobias Bernard reports

Gradia by Alexander Vanhee was accepted into Circle ✨️

Edit and annotate screenshots, draw on them, add a background, and share them with the world.

Third Party Projects

Anton Isaiev says

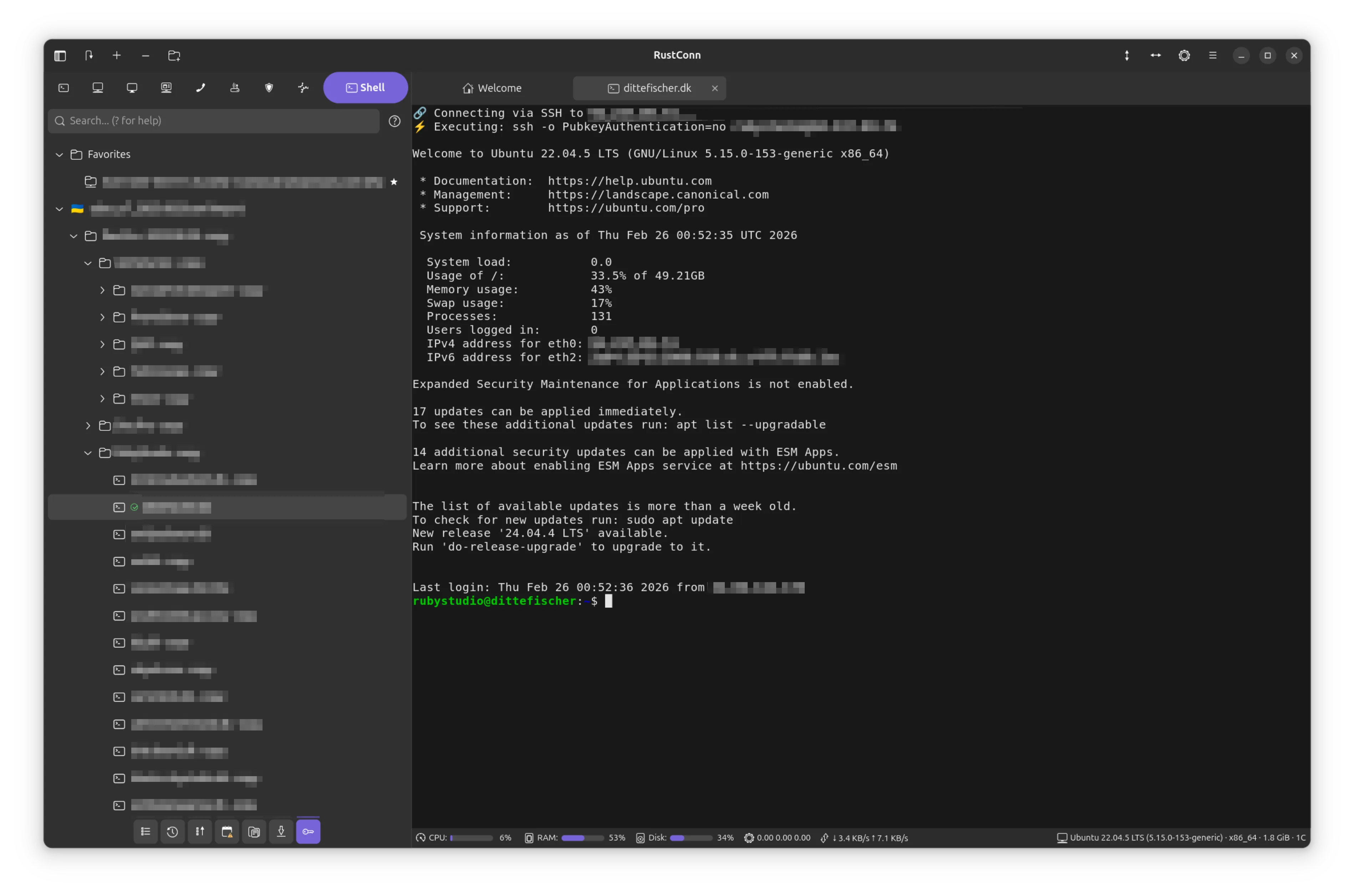

RustConn 0.9.3 is out!

This release cycle was all about closing the gap between "it works" and "it works exactly how you'd expect." I successfully closed every single open issue and feature request from this period, delivering major quality-of-life and security upgrades for anyone who lives in a terminal.

Highlights from this release:

Agentless Remote Monitoring: A MobaXterm-style bar now sits below your SSH, Telnet, and Kubernetes terminals, parsing /proc/* over the existing session to show live CPU, memory, disk, and network stats.

Lightning-Fast Navigation: A new Command Palette (Ctrl+P) brings VS Code-style fuzzy searching for connections, tags, and commands. I also added full support for Custom Keybindings, letting you remap 30+ actions.

Visual Organization: Tame massive connection lists with pinned Favorites, custom GTK icons or emojis, and protocol-colored tabs with group indicators (e.g., "Production" or "Staging").

Modernized UI: Eight dialogs were migrated to modern adw::Dialog with adaptive sizing, and I've added screen reader support to password and connection dialogs.

Rock-Solid Security: A massive backend overhaul! Stored credentials now use AES-256-GCM with Argon2id, and the entire codebase was migrated to SecretString to prevent memory leaks. I also added full support for SSH port forwarding (-L, -R, -D).

pass Backend: A huge shoutout to community member @h3nnes for contributing a pass (passwordstore.org) backend with full GUI and CLI support!

Under the Hood: Migrated to the Rust 2024 edition, added smart protocol fallbacks for RDP/VNC to gracefully handle negotiation failures, and reached 100% translation coverage across 15 languages.

https://github.com/totoshko88/RustConn https://flathub.org/en/apps/io.github.totoshko88.RustConn

Haydn Trowell says

Typesetter, the minimalist, local-first Typst editor, gets some quality of life updates with version 0.11.0:

- New app icon

- The preview now automatically sizes itself to fit the window and your display without needing a manual PPI setting

- Invert lightness option for the preview when using dark mode

- The ability to simulate different forms of color blindness in the preview to test document accessibility

- Performance improvements, including reduced memory usage

Install via Flathub (https://flathub.org/apps/net.trowell.typesetter)

Bilal Elmoussaoui announces

oo7-daemon, the server side of the Secret Service provider, has received a new release featuring KDE support. Making it compatible with both GNOME and KDE.

GNOME Websites

federico announces

The Code of Conduct page is now generated from the original sources with a beautiful stylesheet. Thanks for Bart for the web app and the design team for the updated look!

Shell Extensions

storageb reports

Build your own custom menu for the GNOME top bar!

Custom Command Menu is a GNOME extension that lets you build a custom menu to run commands directly from the top bar. Launch apps, run scripts, execute shell commands, and more through a simple, intuitive interface.

Version 13 introduces support for submenu creation, increases the maximum number of entries allowed, and adds compatibility with GNOME 50. This release also includes additional translations for Japanese, Chinese, Portuguese, and Polish.

More information can be found on the project's GitHub page.

Miscellaneous

Sophie (she/her) reports

As a cost-saving measure, git traffic like

git clone https://gitlab.gnome.org/GNOME/<repo>is now redirected to our mirror underhttps://github.com/GNOME/<repo>.

Peter Eisenmann says

Last week the long unmaintained support for Google Drive in gvfs was dropped. If you ever needed motivation to switch to a more privacy-respecting cloud provider, now is as good a time as any.

That's all for this week!

See you next week, and be sure to stop by #thisweek:gnome.org with updates on your own projects!

27 Feb 2026 12:00am GMT

26 Feb 2026

Planet GNOME

Planet GNOME

Jussi Pakkanen: Discovering a new class of primes fur the fun of it

There are a lot of prime classes, such as left truncating primes, twin primes, mersenne primes, palindromic primes, emirp primes and so on. The Wikipedia page on primes lists many more. Recently I got to thinking (as one is wont to do) how difficult would it be to come up with a brand new one. The only reliable way to know is to try it yourself.

The basic loop

The method I used was fairly straightforward:

- Download a list of the first one million primes

- Look at it

- Try to come up with a pattern

- Check if numbers from your pattern show up on OEIS

- Find out they are not

- Rejoice

- Check again more rigorously

- Realize they are in fact there in a slightly different form

- Go to 2

Eventually I managed to come up with a prime category that is not in OEIS. Python code that generates them can be found in this repo. It may have bugs (I discovered several in the course of writing this post). The data below has not been independently validated.

Faro primes

In magic terminology, a Faro shuffle is one that cuts a deck of cards in half and then interleaves the results. It is also known as a perfect shuffle. There are two different types of Faro shuffle, an in shuffle and an out shuffle. They have the peculiar property that if you keep repeating the same operation, eventually the deck returns to the original order.

A prime p is a Faro prime if all numbers obtained by applying Faro shuffles (either in or out shuffles, but only one type) to its decimal representation are also prime. A Faro prime can be an Faro in prime, a Faro out prime or both. As an example, 19 is a Faro in prime, because a single in shuffle returns it to its original form. It is not an Faro out prime, because out shuffling it produces 91, which is not a prime (91 = 7*13).

The testing for this was not rigorous, but at least OEIS does not recognize it.

Statistics

I only used primes with an even number of digits. For odd number of digits you'd first need to decide how in and out shuffles should work. This is left as an exercise to the reader.

Within the first one milllion primes, there are 7492 in primes, 775 out primes and 38 that are both in and out primes.

The numbers with one or two digits are not particularly interesting. The first "actual" Faro in prime is 1103. It can be in shuffled once yielding 1013.

For the first out shuffle you need to go to 111533, which shuffles to 513131 and 153113.

The first prime longer than 2 digits that qualifies for both a Faro in and out prime is 151673. Its in shuffle primes are 165713, 176153 and 117563. The corresponding out shuffle primes are 151673, 617531 and 563117.

Within the first one million primes the largest in shuffle prime is 15484627, the largest out shuffle prime is 11911111 and the largest in and out prime is 987793.

Further questions

As is typical in maths, finding out something immediately raises more questions. For example:

Why are there so many fewer out primes than in primes?

How would this look for primes with odd number of digits in them?

Is it possible to build primes by a mixture of in and out shuffles?

Most of the primes do not complete a "full shuffle", that is, they repeat faster than a deck of fully unique playing cards would. For any number n can you find a Faro prime that requires that many shuffles or is there an upper limit for the number of shuffles?

26 Feb 2026 10:31pm GMT

25 Feb 2026

Planet GNOME

Planet GNOME

Matthias Clasen: An update on SVG in GTK

In my last post on this topic, I explained the history of SVG in GTK, and how I tricked myself into working on an SVG renderer in 2025.

Now we are in 2026, and on the verge of the GTK 4.22 release. A good time to review how far we've come.

Testsuites

While working on this over the last year, I was constantly looking for good tests to check my renderer against.

Eventually, I found the resvg testsuite, which has broad coverage and is refreshingly easy to work with. In my unscientific self-evaluation, GtkSvg passes 1250 of the 1616 tests in this testsuite now, which puts GTK one tier below where the web browsers are. It would be nice to catch up with them, but that will require closing some gaps in our rendering infrastructure to support more complex filters.

The resvg testsuite only covers static SVG.

Another testsuite that I've used a lot is the much older SVG 1.1 testsuite, which covers SVG animation. GtkSvg passes most of these tests as well, which I am happy about - animation was one of my motivations when going into this work.

Benchmarks

But doing a perfect job of rendering complex SVG doesn't do us much good if it slows GTK applications down too much. Recently, we've started to look at the performance implications of SVG rendering.

We have a 'scrolling wall of icons' benchmark in our gtk4-demo app, which naturally is good place to test the performance impact of icon rendering changes. When switching it over to GtkSvg, it initially dropped from 60fps to around 40 on my laptop. We've since done some optimizations and regained most of the lost fps.

The performance impact on typical applications will be much smaller, since they don't usually present walls of icons in their UI.

Stressing our rendering infrastructure with some more demanding content was another motivation when I started to work on SVG, so I think I can declare success here.

Content Creators

The new SVG renderer needs new SVGs to take advantage of the new capabilities. Thankfully, Jakub Steiner has been hard at work to update many of the symbolic icons in GNOME.

Others are exploring what we can do with the animation capabilities of the new renderer. Expect these things to start showing up in apps over the next cycle.

Others are exploring what we can do with the animation capabilities of the new renderer. Expect these things to start showing up in apps over the next cycle.

Future work

Feature-wise, GtkSvg is more than good enough for all our icon rendering needs, so making it cover more obscure SVG features may not be big priority in the short term.

GtkSvg will be available in GTK 4.22, but we will not use it for every SVG icon yet - we still have a much simpler symbolic icon parser which is used for icons that are looked up by icon name from an icontheme. Switching over to using GtkSvg for everything is on the agenda for the next development cycle, after we've convinced ourselves that we can do this without adverse effects on performance or resource consumption of apps.

Ongoing improvements of our rendering infrastructure will help ensure that that is the case.

Where you can help

One of the most useful contributions is feedback on what does or doesn't work, so please: try out GtkSvg, and tell us if you find SVGs that are rendered badly or with poor performance!

Update: GtkSvg is an unsandboxed, in-process SVG parser written in C, so we don't recommend using it for untrusted content - it is meant for trusted content such as icons, logos and other application resources. If you want to load a random SVG of unknown providence, please use a proper image loading framework like glycin (but still, tell us if you find SVGs that crash GtkSvg).

Of course, contributions to GtkSvg itself are more than welcome too. Here is a list of possible things to work on.

If you are interested in working on an application, the simple icon editor that ships with GTK really needs to be moved to its own project and under separate maintainership. If that sounds appealing to you, please get in touch.

If you would like to support the GNOME foundation, who's infrastructure and hosting GTK relies on, please donate.

25 Feb 2026 12:07pm GMT

23 Feb 2026

Planet GNOME

Planet GNOME

Sam Thursfield: Status update, 23rd February 2026

Its moments of change that remain striking in your memory when you look back. I feel like i'm in a long period of change, and if like me you participate in the tech industry and open source then you probably feel the same. It's going to be a wild time to look back on.

As humans we're naturally drawn to exciting new changes. Its not just the tech industry. The Spanish transport minister recently announced ambicious plans to run trains at record speeds of 350km/h. Then two tragic accidents happened, apparently due to careless infrastructure maintenance. Its easy (and valid) to criticise the situation. But I can sympathise too. You don't see many news reports saying "Infrastructure is being maintained really well at the moment and there haven't been any accidents for years". We all just take that shit for granted.

This is a "middle aged man states obvious truths" post, so here's another one we forget in the software world: Automating work doesn't make it go away. Lets say you automate a 10 step release process which takes an hour to do manually. That's pretty great, now at release time you just push a button and wait. Maybe you can get on with some other work meanwhile - except you still need to check the automation finished and the release published correctly. What if step 5 fails? Now you have drop your other work again, push that out of your brain and try to remember how the release process worked, which will be hazy enough if you've stopped ever doing release manually.

Sometimes I'll take an hour of manual work each month in preference to maintaining a complex, bespoke automation system.

Over time we do build great tools and successfully automate bits of our jobs. Forty or fifty years ago, most computer programmers could write assembly code and do register allocation in their heads. I can't remember the last time I needed that skill. The C compiler does it for me.

The work of CPU register allocation hasn't gone away, though. I've outsourced the cognitive load to researchers and compiler teams working at places like IBM / Red Hat, embecosm and Apple who maintain GCC and LLM.

When I first got into computer programming, at the tail end of the "MOV AX, 10h; INT 13h" era, part of the fun was this idea you could have wild ideas and simply create yourself piece by piece, making your own tools, and pulling yourself up by your bootstraps. Look at this teenager who created his own 3D game engine! Look at this crazy dude who made an entire operating system! Now I'm gonna do something cool that will change the world, and then ideally retire.

It took me the longest time to see that this "rock star" development model is all mythology. Just like actual rock stars, in fact. When a musician appears with stylish clothes and a bunch of great songs, the "origin story" is a carefully curated myth. The music world is a diverse community of artists, stylists, mentors, coaches, co-writers, producers, technicians, drivers, promotors, photographers, session musicians and social media experts, constantly trading our skills and ideas and collaborating to make them a reality. Nobody just walks out of their bedroom onto a stage and changes the world. But that doesn't make for a good press release does it ?

The AI bubble is built on this same myth of the individual creator. I think LLMs are a transformative tool, and computer programming will never be the same; the first time you input some vaguely worded English prompt and get back a working unit test, you see a shining road ahead paved with automation, where you can finally turn ideas into products within days or weeks instead of having to chisel away at them painfully for years.

But here's the reality: our monkey brains are still the same size, and you can't If your new automation is flaky, then you're going to spend as much time debugging and fixing things as you always did. Doing things the old way may take longer, but the limiting factor was never our typing speed, but our capacity to understand and communicate new ideas.

"The future belongs to idea guys who can just do things". No it doesnt mate, the past, present and future belongs to diverse groups of people whose skills and abilities complement each other and who have collectively agreed on some sort of common goal. But that idea doesn't sell very well.

If and when we do land on genuinely transformative new tool - something like a C compiler, or hypertext - then I promise you, everyone's going to be on it in no time. How long did it take for ChatGPT to go from 0 to 1 billlion users wordwide?

In all of this, I've had an intense few months in a new role at Codethink. It's been an intense winter too - by some measures Galicia is literally the wettest place on earth right now - so I guess it was a good time to learn new things. Since I rejoined back in 2021 I've nearly always been outsourced on different client projects. What I'm learning now is how the company's R&D division works.

23 Feb 2026 9:14am GMT

21 Feb 2026

Planet GNOME

Planet GNOME

Federico Mena-Quintero: Librsvg got its first AI slop pull request

You all know that librsvg is developed in gitlab.gnome.org, not in GitHub. The README prominently says, "PLEASE DO NOT SEND PULL REQUESTS TO GITHUB".

So, of course, today librsvg got its first AI slop pull request and later a second one, both in GitHub. Fortunately (?) they were closed by the same account that opened them, four minutes and one minute after opening them, respectively.

I looked.

There is compiled Python code (nope, that's how you get another xz attack).

There are uncomfortably large Python scripts with jewels like subprocess.run("a single formatted string") (nope, learn to call commands correctly).

There are two vast JSON files with "suggestions" for branches to make changes to the code, with jewels like:

-

Suggestions to call standard library functions that do not even exist. The proposed code does not even use the nonexistent standard library function.

-

Adding enum variants to SVG-specific constructs for things that are not in the SVG spec.

-

Adding incorrect "safety checks".

assert!(!c_string.is_null())to be replaced byif c_string.is_null() { return ""; }. -

Fix a "floating-point overflow"... which is already handled correctly, and with a suggestion to use a function that does not exist.

-

Adding a cache for something that does not need caching (without an eviction policy (so it is a memory leak)).

-

Parallelizing the entire rendering process through a 4-line function. Of course this does not work.

-

Adding two "missing" filters from the SVG spec (they are already implemented), and the implementation is

todo!().

It's all like that. I stopped looking, and reported both PRs for spam.

21 Feb 2026 1:13am GMT

20 Feb 2026

Planet GNOME

Planet GNOME

This Week in GNOME: #237 Article Rendering

Update on what happened across the GNOME project in the week from February 13 to February 20.

GNOME Circle Apps and Libraries

NewsFlash feed reader ↗

Follow your favorite blogs & news sites.

Jan Lukas announces

I released Newsflash 5.0-beta1 to the beta channel of flathub. The headlining feature is a new native article view rendering everything with the help of Gtk. This also made it possible to overhaul everything media related (images, videos and audio). It should now closer resemble what can be found in fractal or tuba.

Jan Lukas reports

In related news: Newsflash now has a brother. The core newsflash library was used to create a TUI application by Christopher Auer. So if you like what Newsflash has to offer but prefer to stay in the terminal, check out eilmeldung: https://github.com/christo-auer/eilmeldung

Third Party Projects

Diego Povliuk announces

Cine, the video player, got some quality of life updates on version 1.0.9:

- The volume is now always restored on startup

- Icon indicators for mute and subtitles on/off

- Toggle elapsed/remaining time

- Option to add folder to playlist

- Unmute when changing volume

- Hold click to double playback speed

Install via Flathub

ranfdev announces

Lobjur has just received a big update!

- It now works as a client for multiple link aggregators: Lobsters and Hacker News.

- An integrated WebView has been added

- The UI has been improved, with a new split layout

- The comments are now displayed with complex formatting, converting HTML tags to GTK widgets

Check it out on Flathub

Anton Isaiev announces

I've released RustConn 0.8.9, a major update driven largely by user feedback and feature requests. This version expands protocol support, security and refines the user experience.

Key highlights:

- New Protocols: Added full Kubernetes support (shell access/exec), Serial Console (via picocom), and SFTP integration (via URI or Midnight Commander).

- Virtualization: Added support for importing Virt-Viewer (.vv) files (Proxmox/oVirt) and SPICE proxy tunneling.

- Connectivity & Security: Added FIDO2/Security Key support for SSH, Wake on LAN (WoL) GUI, and improved RDP rendering on HiDPI displays.

- UX & i18n: Added Regex-capable terminal search and translations for 14 languages (including UK, DE, FR, IT, PL).

https://github.com/totoshko88/RustConn https://flathub.org/apps/io.github.totoshko88.RustConn

Phosh ↗

A pure wayland shell for mobile devices.

Guido says

Phosh 0.53 is out:

Phosh's overview now shows a splash for launching apps and the Polkit dialog has more user details. The on-screen keyboard scales better in landscape and adds new completion dictionaries. The Wayland compositor phoc got initial support for the

xx-cutout-v1Wayland protocol to inform clients about notches and display cutouts.There's more - see the full details here.

Parabolic ↗

Download web video and audio.

Nick reports

Parabolic is at V2026.2.4 this week with new features and many bug fixes!

Here's the full changelog:

- Added a search bar for subtitles in the add download dialog

- Added the ability to specify extra yt-dlp arguments for the discovery process

- Added the ability to specify extra yt-dlp arguments for the download process

- Fixed an issue where pausing and resuming downloads did not work correctly

- Fixed an issue where video passwords were not working when specified

- Fixed an issue where yt-dlp progress was not always displayed correctly

- Fixed an issue where instagram stories did not download correctly

- Fixed an issue where the encoder field was not properly cleared on FLAC files when remove source data was enabled

- Fixed an issue where Parabolic would not detect translation languages correctly

- Fixed an issue where Parabolic would use a previous save folder even if it no longer existed

- Fixed an issue where downloaded yt-dlp version were not getting executable permissions on Linux

- Fixed an issue where Parabolic wouldn't open on Linux

- Fixed an issue where playlist video downloads may not have had sound on Windows

Crosswords ↗

A crossword puzzle game and creator.

jrb says

I've released GNOME Crosswords 0.3.17. This is a strong release, featuring both a new interface for the game and multiple features for the editor. Read the announcement here.

Crosswords

The game got a full UI refresh in preparation for GNOME Circle.

- The game sports a new layout and design, including a sidebar for easier navigation

- The welcome screen was cleaned up

- A hints dialog was added to help players learn game controls

- Fullscreen support was redone

- Sorting and filtering was added to puzzle pickers

- We improved the adaptive functionality and accent color support

- Many polishing touches and bug fixes

Crosswords Editor

The editor also got some quality of life improvements as a result of user testing.

- The new puzzle dialog got a complete overhaul, including the addition of almost a thousand additional templates

- This dialog also gained a theme word editor to preset crossword themes before grid creation.

- A new wordplay indicator dialog was added to help write cryptic clues

- Printing support was added, so the final product can be printed.

- Many polishing touches and bug fixes

Finally, a request for TWIG readers. GNOME Crosswords could really benefit from improved artwork, and we've reached the limits of my Inkscape skills. If you'd like to get involved with artwork for GNOME, this could be a great place to begin. Drop me a line if you're interested!

Shell Extensions

Miklós Zsitva says

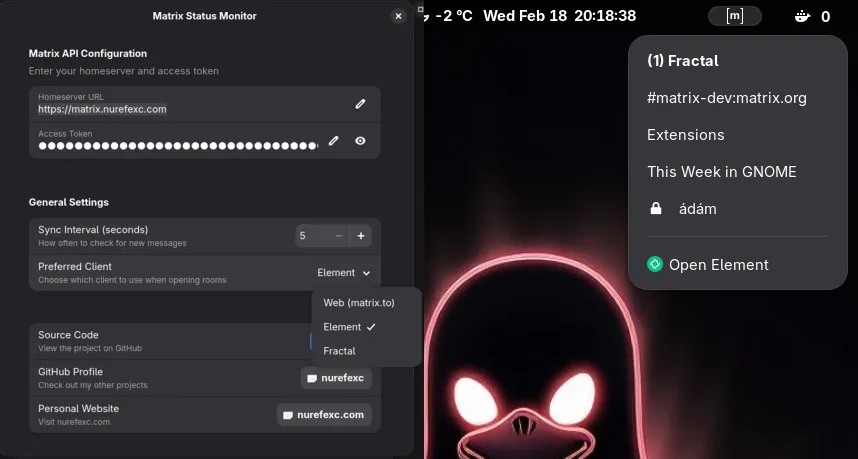

Matrix Status Monitor v5

Matrix Status Monitor v5 has been released, focusing on deeper desktop integration and security awareness.

New features include:

- End-to-End Encryption Indicators: Encrypted rooms now feature a lock icon in the room list for immediate security verification.

- Selectable Matrix Clients: Users can now toggle between Element and Fractal in the preferences. The extension handles URI dispatching to ensure the correct application is launched.

- Settings UI Update: A refined preference window for easier API configuration and client selection.

- Performance: Optimized asynchronous /sync calls using Libsoup3 to minimize impact on Shell performance.

Extension Link: https://extensions.gnome.org/extension/9328/matrix-status-monitor/ Source Code: https://github.com/nurefexc/matrix-status

Just Perfection says

The extensions porting guide for GNOME Shell 50 has been released and we are now accepting version 50 packages on EGO.

Miscellaneous

GNOME OS ↗

The GNOME operating system, development and testing platform

Ada Magicat ❤️🧡🤍🩷💜 reports

For a few months now, the gnome-build-meta repository - home of GNOME OS and the GNOME flatpak runtime - has been generating and publishing debug tarballs to our new debuginfod server. This week, Jordan added the final bits of configuration needed to use this infrastructure.

As a result, debugging tools (like gdb) running in GNOME OS and the upcoming version 50 release of the GNOME flatpak SDK can dynamically fetch the debug symbols they need at that moment, no longer requiring developers to download and update large debug extensions on their system for debugging.

GNOME Foundation

Allan Day announces

A new GNOME Foundation update post is available this week, covering highlights from the past two weeks. Notable items include the LAS 2026 announcement and a report from the latest Board of Directors meeting.

That's all for this week!

See you next week, and be sure to stop by #thisweek:gnome.org with updates on your own projects!

20 Feb 2026 12:00am GMT

19 Feb 2026

Planet GNOME

Planet GNOME

Allan Day: GNOME Foundation Update, 2026-02-19

Welcome to another GNOME Foundation update post, covering highlights from the past two weeks (this week and last week). It's been a busy time, particularly due to conference planning and our upcoming audit - read on to find out more!

Linux App Summit 2026

We were thrilled to be able to announce the location and dates of this year's Linux App Summit this week. The conference will happen in Berlin on the 16th and 17th of May, at Betahaus Berlin. More information is available on the LAS website.

As usual, we are very pleased to be collaborating with KDE on this year's LAS. Our partnership on LAS has been a real success that we hope to continue.

Travel sponsorship for LAS 2026 is available for Foundation members through the Travel Committee, so head over to the travel page if you would like to attend and need financial support.

February's Board meeting

The Board of Directors it's regular monthly meeting last week, on 9th February. Highlights from the meeting included:

- We finally caught up on our minutes, approving the minutes from a total of nine meetings. This was a big relief, and hopefully we will be able to stay on top of the minutes now that we're caught up.

- The Board was thrilled to formally add Nirbheek Chauhan as a member of the Travel Committee. Many contributors will know Nirbheek as a longstanding GStreamer hacker, and he's already been doing some great work to help with travel. Thanks Nirbheek!

- The Board approved a new document retention and destruction policy, which is something that we are encouraged to have by regulators.

- I gave an update on the operational highlights from the last month, including fundraising, conference planning, and audit preparation.

- The Board considered a proposal for an exciting new program that we're hoping to launch very soon. More details to follow soon.

The next Board meeting is scheduled for March 9th.

Audit submissions

As I've mentioned in previous updates, the GNOME Foundation is due to be audited very soon. This is a routine occurrence for non-profits like us, but this is our first formal audit, so there's a good deal of learning and setup to be done.

Last week was the deadline to submit all the documentation for the audit, which meant that many of us were extremely busy finalising numbers, filling in spreadsheets, and tidying up other documentation ready to send it all to the auditors.

Our finance team *really* went the extra mile for us to get everything ready on time, so I'd like to give them a huge thank you for helping us out.

The audit inspection itself will happen in the first week of March, so preparations continue, as we assemble and organise our records, update our policies, and so on.

GUADEC 2026

Planning for this summer's conference has continued over the past two weeks. In case you missed it, the location and dates have been announced, and accommodation bookings are open at a reduced rate. In the background we are gearing up to open the call for papers, and the sponsorship effort is on its way. Now is a good time to start thinking about any talk proposals that you'd like to submit.

Membership certificates

A cool community effort is currently underway to provide certificates for GNOME Foundation members. This is a great idea in my opinion, as it will allow contributors to get official recognition which can be used for job applications and so on. More volunteers to help out would definitely be welcome.

That's it for this week. Thanks for reading, and feel free to ask questions in the comments.

19 Feb 2026 3:01pm GMT

18 Feb 2026

Planet GNOME

Planet GNOME

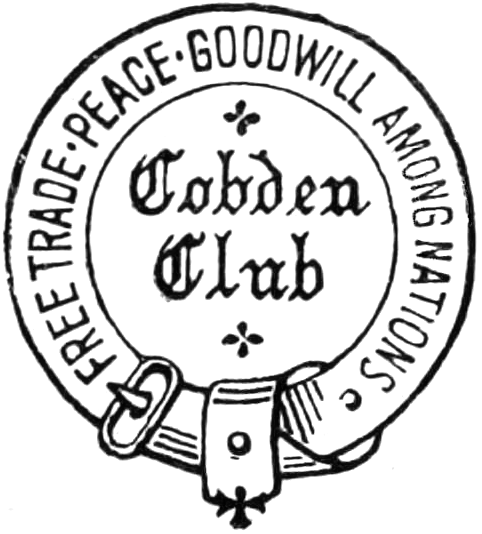

Andy Wingo: free trade and the left, bis: from cobden to lenin

A week ago we discussed free trade, and specifically took a look at the classical mechanism by which free trade is supposed to improve overall outcomes, as measured by GDP.

As I described it, the value proposition of free trade is ambiguous at best: there is an intangible sense that a country might have a higher GDP with lower trade barriers, but with a side serving of misery as international competition forces some local industries to close, and without any guarantee about how that trade advantage would be distributed among the population. Why bother? And why is my news feed full of EU commissioners signing new trade agreements? Where are these ideas coming from?

stave 2

I asked around, placed some orders, and a week later a copy of Marc-William Palen's Pax Economica came in the mail. I was hoping for a definitive, reasoned argument for or against free trade, from a leftist's perspective (whatever that means). The book was both more and less than that. Less, in the sense that its narrative is most tightly woven in the century before the end of the second World War, becoming loose and frayed as it breezes through decolonization, the rise of neoliberalism, the end of history, and what it describes as our current neomercantilist moment. Less, also, in that Palen felt no need to summarize the classic economic arguments for free trade, leaving me to clumsily do so in the previous stave. And yet, the story it tells fills in gaps in my understanding that I didn't even know I had.

To pick up one thread from the book, let's go back to 1815. British parliament passes the Corn Laws, establishing a price floor for imported grain. This trade barrier essentially imposes a significant tax on all people who eat, to the profit of a small number of landowners. A movement to oppose these laws develops over the next 30 years, with Richard Cobden as its leading advocate. One of the arguments of the Anti-Corn Law League, which was actually a thing, is that cheaper food is good for workers; though wages might decline as bosses realize they don't need to pay so much to keep their workers alive, relatively speaking workers will do better. More money left over at the end of the month also means more demand for other manufactured products, which is good for growing industry.

In the end, bad harvests in 1845 led to shortages and famine (yes, that one) and eventually a full repeal of the laws in 1846. Perhaps the Anti-Corn Law League's success was inevitable: a bad harvest year is a stochastic certainty, and not having enough food is the kind of problem no government can ignore. In any case, the episode does prove the Corn Laws to be a front in a class war, and their repeal was a victory for the left, even if it occured under a Conservative government, and even if the campaign was essentially middle-class Liberal in character.

The repeal campaign was not just about domestic cost of living, however. Its exponents argued that free trade among nations was an essential step to a world of peaceful international cooperation. Palen's book puts Cobden in context by comparison to Friedrich List, who, inspired by a stint in America in the 1820s, starts from the premise that for a nation to be great, it needs an empire of colonies to exploit, and to conquer and defend colonies, it needs a developed domestic industry and navy; and for a nation to develop its own industry, it needs protectionism. The natural state of empire is not exactly one of free trade with its neighbors.

The "commercial peace" movement that Palen describes cuts List's argument short at "empire": because there is no empire without war, a peace movement should scratch away at the causes of war, and the causes of the causes; a world living in pax economica would avoid imperial conflict by tying nations together through trade. It sounds idealistic, and it is, but it's easy to forget that today we wage war through trade also: blockades and sanctions are often followed by bombs. Palen's book draws clear lines from Cobdenism through such disparate groups as women's peace societies, Christian internationalists, pre-war German socialists, and Lenin himself.

Marx understood history as a process of development, consisting of stages through which a society must necessarily pass on its way to socialism. This allies him with capitalism in many ways; he viewed free trade as a step towards a higher form of capitalism, which would necessarily lead to socialism. This, to me, is not a convincing argument in 2026: not only has the mechanistic vision of history failed to fruit, but its mechanism of plant closures and capital flight can be cruel and hard to campaign for politically. And yet, I think we do need a healthy dose of internationalism to remedy the ills of the present day: a jolt of ideals and even idealism to remind us that we are all travelling together on this spaceship Earth, and that those on the other side of a political line are just as much our brothers and sisters those on "our" side.

i went seeking clarity

When you tend Marxist, you know in your bones that although the road to socialism is rough and winding, the winds of history are always at your back; there is an in-baked inevitability of success that softens defeat. There is something similar in the Christian and feminist narrative strands that Palen weaves: a sense not that victory is inevitable, at least in this lifetime, but that fighting for it is a moral imperative, and that God is on your side. The campaign for free trade was a means to a moral end, one of international peace and shared prosperity. And this, in 2026, sounds... good, actually?

Again from our 2026 perspective, I cannot help but agree that a trade barrier is often an act of war; preliminary, yes, but on the spectrum. I have had enough freedom fries in my life to have developed an allergy to anything that tastes of my-side-of-the-line-is-better-than-yours. Though I have not yet read Klein and Pettis's deliciously titled Trade Wars are Class Wars, I do know that among the 1.5 million people who died as a result of the sanctions on Iraq in the 1990s, Saddam Hussein was not on the list. Sometimes I feel like we learned the lessons of Cobdenism backwards: in order to keep the people starving, we must impose anew the Corn Laws.

Palen's book leaves me with one doubt, and one big question. The doubt is, to what extent do the lessons of the early 1800s apply today? Ricardo's contemporary comparative advantage theories presupposed that capital was relatively fixed in space; nowadays this is much less the case. The threat of moving the plant elsewhere is always present in all union drives everywhere. Though history rhymes, it does not repeat; it will take some creativity to transplant pax economica to the soils of the 21st century.

The bigger question, though, is as regards the morality of protectionism as practiced by more and less developed economies: when is it morally right for a country to erect trade barriers? Palen's book does not pretend to answer this question. And yet, this issue was foremost in our minds, as we shut down Seattle in 1999, as we died in Genoa in 2001. (Forgive the collectivism, if you aren't of this tribe (yet?), but it was a lived experience.) Free trade was a moral cause in 1835; how did it become immoral in 1995, at least to us?

world without end

Well. To answer that question, we need a history that picks up where Palen leaves off, and we have something like it in Quinn Slobodian's Globalists, which we will look at next time. But before we go, two reflections.

One, in Europe we have kept the Corn Laws on the books, in a way, in the form of the EU Common Agricultural Policy (CAP). In France the dominant discourse is very much in opposition to the free trade agreement with Mercosur, and the main reason is the threat to French farmers. The tradeoff to get the Mercosur agreement over the line were additional subsidies under the CAP, which are a form of trade barrier. And yet, the way the CAP is structured allocates most of the money in proportion to the surface area of a farm, which is to say, to the largest agribusinesses and to the largest landowners. Greenpeace just put out an excellent briefing arguing that the CAP is just a subsidy to the heirs of the Duchess of Alba and their ilk. Again, are we running the 19th century in reverse?

Secondly, and harder to explain... in the 2000s I listened a lot to an anarchist radio show hosted by Lyn Gerry, Unwelcome Guests. (Have you heard the eponymous tune? It makes me shiver every time.) Anyway I remember one episode which discussed the gift economy and hunter-gather economics, in which a researcher asked a member of that community what he would do if he came into a lot of food at one time: the response, as I recall, was that he would store it "in his brother". He would give it to others. One day, if he needed it, they would give to him.

I know that our world does not work this way, but there is an element of truth here, in that it's not reasonable for France to grow everything that it eats, to never trade what it grows, to make all its own solar panels, to write all software used within its borders. We live richer lives when we share and learn from each other, without regards to which side of the line our home is.

next

Still here? Gosh me too. Next time we will look at what the kids call the "1900s" and perhaps approach present day. Until then, commercial peace be with you!

18 Feb 2026 10:25pm GMT

Adrian Vovk: GNOME OS Hackfest @ FOSDEM 2026

For a few days leading up to FOSDEM 2026, the GNOME OS developers met for a GNOME OS hackfest. Here are some of the things we talked about!

Stable

The first big topic on our to-do list was GNOME OS stable. We started by defining the milestone: we can call GNOME OS "stable" when we settle on a configuration that we're willing to support long-term. The most important blocker here is systemd-homed: we know that we want the stable release of GNOME OS to use systemd-homed, and we don't want to have to support pre-homed GNOME OS installations forever. We discussed the possiblity of building a migration script to move people onto systemd-homed once it's ready, but it's simply too difficult and dangerous to deploy this in practice.

We did, however, agree that we can already start promoting GNOME OS a bit more heavily, provided that we make very clear that this is an unstsable product for very early adopters, who would be willing to occasionally reinstall their system (or manually migrate it).

We also discussed the importance of project documentation. GNOME OS's documentation isn't in a great state at the moment, and this makes it especially difficult to start contributing. BuildStream, which is GNOME OS's build system, has a workflow that is unfamiliar to most people that may want to contribute. Despite its comprehensive documentation, there's no easy "quick start" reference for the most common tasks and so it is ultimately a source of friction for potential contributors. This is especially unfourtunate given the current excitement around building next-gen "distroless" operating systems. Our user documentation is also pretty sparse. Finally, the little documentation we do have is spread across different places (markdown comitted to git, GitLab Wiki pages, the GNOME OS website, etc) and this makes it very difficult for people to find it.

Fixing /etc

Next we talked about the situation with /etc on GNOME OS. /etc has been a bit of an unsolved problem in the UAPI group's model of immutability: ideally all default configuration can be loaded from /usr, and so /etc would remain entirely for overrides by the system administrator. Unfourtunately, this isn't currently the case, so we must have some solution to keep track of both upstream defaults and local changes in /etc.

So far, GNOME OS had a complicated set-up where parts of /usr would be symlinked into /etc. To change any of these files, the user would have to break the symlinks and replace them with normal files, potentially requiring copies of entire directories. This would then cause loads of issues, where the broken symlinks cause /etc to slowly drift away from the changing defaults in /usr.

For years, we've known that the solution would be overlayfs. This kernel filesystem allows us to mount the OS's defaults underneath a writable layer for administrator overrides. For various reasons, however, we've struggled to deploy this in practice.

Modern systemd has native support for this arrangement via systemd-confext, and we decided to just give it a try at the hackfest. A few hours later, Valentin had a merge request to transition us to the new scheme. We've now fully rolled this out, and so the issue is solved in the latest GNOME OS nightlies.

FEX and Flatpak

Next, we discussed integrating FEX with Flatpak so that we can run x86 apps on ARM64 devices.

Abderrahim kicked off the topic by telling us about fexwrap, a script that grafts two different Flatpak runtimes together to successfully run apps via FEX. After studying this implementation, we discussed what proper upstream support might look like.

Ultimately, we decided that the first step will be a new Flatpak runtime extension that bundles FEX, the required extra libraries, and the "thunks" (glue libraries that let x86 apps call into native ARM GPU drivers). From there, we'll have to experiment and see what integrations Flatpak itself needs to make everything work seamlessly.

Abderrahim has already started hacking on this upstream.

Amutable

The Amutable crew were in Brussels for FOSDEM, and a few of them stopped in to attend our hackfest. We had some very interesting conversations! From a GNOME OS perspective, we're quite excited about the potential overlap between our work and theirs.

We also used the opportunity to discuss GNOME OS, of course! For instance, we were able to resolve some kernel VFS blockers for GNOME OS delta updates and Flatpak v2.

mkosi

For a few years, we've been exploring ways to factor out GNOME OS's image build scripts into a reusable component. This would make it trivial for other BuildStream-based projects to distribute themselves as UAPI.3 DDIs. It would also allow us to ship device-specific builds of GNOME OS, which are necessary to target mobile devices like the Fairphone 5.

At Boiling the Ocean 7, we decided to try an alternative approach. What if we could drop our bespoke image build steps, and just use mkosi? There, we threw together a prototype and successfully booted to login. With the concept proven, I put together a better prototype in the intervening months. This prompted a discussion with Daan, the maintainer of mkosi, and we ultimately decided that mkosi should just have native BuildStream support upstream.

At the hackfest, Daan put together a prototype for this native support. We were able to use his modified build of mkosi to build a freedesktop-sdk BuildStream image, package it up as a DDI, boot it in a virtual machine, set the machine up via systemd-firstboot, and log into a shell. Daan has since opened a pull request, and we'll continue iterating on this approach in the coming months.

Overall, this hackfest was extremely productive! I think it's pretty likely that we'll organize something like this again next year!

18 Feb 2026 4:57pm GMT

Andy Wingo: two mechanisms for dynamic type checks

Today, a very quick note on dynamic instance type checks in virtual machines with single inheritance.

The problem is that given an object o whose type is t, you want to check if o actually is of some more specific type u. To my knowledge, there are two sensible ways to implement these type checks.

if the set of types is fixed: dfs numbering

Consider a set of types T := {t, u, ...} and a set of edges S := {<t|ε, u>, ...} indicating that t is the direct supertype of u, or ε if u is a top type. S should not contain cycles and is thus a direct acyclic graph rooted at ε.

First, compute a pre-order and post-order numbering for each t in the graph by doing a depth-first search over S from ε. Something like this:

def visit(t, counter):

t.pre_order = counter

counter = counter + 1

for u in S[t]:

counter = visit(u, counter)

t.post_order = counter

return counter

Then at run-time, when making an object of type t, you arrange to store the type's pre-order number (its tag) in the object itself. To test if the object is of type u, you extract the tag from the object and check if tag-u.pre_order mod 2n < u.post_order-u.pre_order.

Two notes, probably obvious but anyway: one, you know the numbering for u at compile-time and so can embed those variables as immediates. Also, if the type has no subtypes, it can be a simple equality check.

Note that this approach applies only if the set of types T is fixed. This is the case when statically compiling a WebAssembly module in a system that doesn't allow modules to be instantiated at run-time, like Wastrel. Interestingly, it can also be the case in JIT compilers, when modeling types inside the optimizer.

if the set of types is unbounded: the display hack

If types may be added to a system at run-time, maintaining a sorted set of type tags may be too much to ask. In that case, the standard solution is something I learned of as the display hack, but whose name is apparently ungooglable. It is described in a 4-page technical note by Norman H. Cohen, from 1991: Type-Extension Type Tests Can Be Performed In Constant Time.

The basic idea is that each type t should have an associated sorted array of supertypes, starting with its top type and ending with t itself. Each t also has a depth, indicating the number of edges between it and its top type. A type u is a subtype of t if u[t.depth]=t, if u.depth <= t.depth.

There are some tricks one can do to optimize out the depth check, but it's probably a wash given the check performs a memory access or two on the way. But the essence of the whole thing is in Cohen's paper; go take a look!

Jan Vitek notes in a followup paper (Efficient Type Inclusion Tests) that Christian Queinnec discovered the technique around the same time. Vitek also mentions the DFS technique, but as prior art, apparently already deployed in DEC Modula-3 systems. The term "display" was bouncing around in the 80s to describe some uses of arrays; I learned it from Dybvig's implementation of flat closures, who learned it from Cardelli. I don't know though where "display hack" comes from.

That's it! If you know of any other standard techniques for type checks with single-inheritance subtyping, do let me know in the comments. Until next time, happy hacking!

Addendum: Thanks to kind readers, I have some new references! Michael Schinz refers to Yoav Zibin's PhD thesis as a good overview. Alex Bradbury points to a survey article by Roland Ducournau as describing the DFS technique as "Schubert numbering". CF Bolz-Tereick unearthed the 1983 Schubert paper, and it is a weird one. Still, I can't but think that the DFS technique was known earlier; I have a 1979 graph theory book by Shimon Even that describes a test for "separation vertices" that is precisely the same, though it does not mention the application to type tests. Many thanks also to fellow traveller Max Bernstein for related discussions.

18 Feb 2026 4:21pm GMT

16 Feb 2026

Planet GNOME

Planet GNOME

Jonathan Blandford: Crosswords 0.3.17: Circle Bound

It's time for another Crosswords release. This is relatively soon after the last one, but I have an unofficial rule that Crosswords is released after three bloggable features. We've been productive and blown way past that bar in only a few months, so it's time for an update.

This round, we redid the game interface (for GNOME Circle) and added content to the editor. The editor also gained printing support, and we expanded support for Adwaita accent colors. In details:

New Layout

I applied for GNOME Circle a couple years ago, but it wasn't until this past GUADEC that I was able to sit down together with Tobias to take a closer look at the game. We sketched out a proposed redesign, and I've been implementing it for the last four months. The result: a much cleaner look and workflow. I really like the way it has grown.

Overall, I'm really happy with the way it looks and feels so far. The process has been relatively smooth (details), though it's clear that the design team has limited resources to spend on these efforts. They need more help, and I hope that team can grow. Here's how the game looks now:

I really could use help with the artwork for this project! Jakub made some sketches and I tried to convert them to svg, but have reached the limits of my inkscape skills. If you're interested in helping and want to get involved in GNOME Design artwork, this could be a great place to start. Let me know!

Indicator Hints

Time for some crossword nerdery:

One thing that characterizes cryptic crosswords is that its clues feature wordplay. A key part of the wordplay is called an "indicator hint". These hints are a word - or words - that tell you to transform neighboring words into parts of the solutions. These transformations could be things like rearranging the letters (anagrams) or reversing them. The example in the dialog screenshot below might give a better sense of how these work. There's a whole universe built around this.

Good clues always use evocative indicator hints to entertain or mislead the solver. To help authors, I install a database of common indicator hints compiled by George Ho and show a random subset. His list also includes how frequently they're used, which can be used to make a clue harder or easier to solve.

Templates and Settability