28 Feb 2026

Fedora People

Fedora People

Kevin Fenzi: misc fedora bits last week of feb 2026

The year is rolling along, and here we are at the end of Feb.

Lots of small day to day items

There were a lot of small day to day investigations and incoming requests, along with a pretty large amount of pull requests for our ansible repo. Since we are in Beta freeze some of them will have to wait, but some we can test out in staging now. It's great to see people submitting fixes and enhancements.

There were also some small fun to debug issues this week, including:

-

The https://whatcanidoforfedora.org/ site was sometimes alerting that it's ssl cert was expired. Turns out this was caused by that domain having old ip's for 2 proxies that had moved datacenters. So, sometimes it hit those, timed out and the ssl check just assumed it was bad. So, it was DNS. :)

-

The fedorapeople.org web server started being very slow to respond. Turns out the scrapers were hitting the cgit interface there and downloading xz snapshots of every commit. This caused the server to have to try and compress things over and over again. So, for now I just disabled those links and increased resources on the webserver. scrapers continue to keep on giving.

Secure boot signing work

Much of my time this week has been spent working on our new secure boot signing workflow. This is really really overdue and something I was hoping to finish mid last year, but things kept coming up and it kept getting pushed back.

The new setup leverages our existing signing infrastructure (sigul) so there's no need for special build hardware anymore. It also removes some constraints in the existing setup allowing us to do something we have wanted for a long time, namely sign aarch64 boot loader artifacts for secure boot.

Kudos to Jermey Cline for all his work on the code to make this possible. This uses the siguldry-bridge, rust based server to talk to sigul, and hopfully before too long we can replace the sigul server side with the new rust based server too.

I got everything deployed, I am now able to sign things, but in testing on my aarch64 laptop, there's still some issue with grub2 that needs to be sorted out. Hopefully it's something not too difficult to track down and we can move to this new setup after beta freeze once and for all.

comments? additions? reactions?

As always, comment on mastodon: https://fosstodon.org/@nirik/116149442549416772

28 Feb 2026 5:10pm GMT

27 Feb 2026

Fedora People

Fedora People

Tim Waugh: Patchutils 0.4.5 released

I have released version 0.4.5 of patchutils and also built it in Fedora rawhide. This is a stability-focused update fixing compatibility issues and bugs, some of which had been introduced in 0.4.4.

Compatibility Fix: Git Extended Diffs

Version 0.4.4 added support in the filterdiff suite for Git's extended diff format. Git diffs without content hunks (such as renames, copies, mode-only changes and binary files) were included in the output. This broke compatibility with 0.4.3.

For 0.4.5 this functionality is now gated with a --git-extended-diffs=include|exclude parameter. The default for 0.4.x is to exclude files in Git extended diffs with no content. There were also some fixes relating to file numbering for these types of diffs.

Note: in 0.5.x this default will change to include.

Status Indictors for grepdiff

Previously grepdiff --status showed ! for all matching files, but now it correctly reports them as additions (+), removals (-) or modifications (!).

As always, bug reports and feature requests are welcome on GitHub. Thanks to everyone who reported issues and helped to test fixes!

The post Patchutils 0.4.5 released appeared first on PRINT HEAD.

27 Feb 2026 4:04pm GMT

Fedora Community Blog: Community Update – Week 9, 2026

This is a report created by CLE Team, which is a team containing community members working in various Fedora groups for example Infrastructure, Release Engineering, Quality etc. This team is also moving forward some initiatives inside Fedora project.

Week: 20 Feb - 27 Feb 2026

Fedora Infrastructure

This team is taking care of day to day business regarding Fedora Infrastructure.

It's responsible for services running in Fedora infrastructure.

Ticket tracker

- Migration of pagure.io repositories continues

- Ansible repository now has CI running in runner

- Resolved errors on dist-git that were spamming sysadmin-main mailbox - ticket

CentOS Infra including CentOS CI

This team is taking care of day to day business regarding CentOS Infrastructure and CentOS Stream Infrastructure.

It's responsible for services running in CentOS Infratrusture and CentOS Stream.

CentOS ticket tracker

CentOS Stream ticket tracker

- Fix `automotive10s-packages-main-el10s-build` inheritance to inherit from `autosd10s-packages-main-release`

- Extend underlying storage for OKD/SCoS deliverables

- Add kernel-rpm-macros to the buildroot of automotive10s-packages-main-el10s-build

- Rebase/align mailman3 packages stack for el 9.7

- Clarify debuginfod.centos.org Stream 8 usage (and take action)

- Investigate current hardware situation

Release Engineering

This team is taking care of day to day business regarding Fedora releases.

It's responsible for releases, retirement process of packages and package builds.

Ticket tracker

- Release engineering is currently in Beta Freeze.

- Releng has provided the first beta release candidate after the QE request.

- Request for creating detached signature has been handled by Samyak for ignition 2.26.0 release.

RISC-V

This is the summary of the work done regarding the RISC-V architecture in Fedora.

- Continued to go through the list of packages to investigate (failing to build; requires patching, and more).

- Got a PR merged for 'libkrunfw' (a low-level library for process isolation) and built it in RISC-V Koji; Marcin ("hrw") also got a couple more merged.

- Work is progressing well on Fedora RISC-V unified kernels (Jason Montleon is doing most of the heavy-lifting here). Currently hosted in Copr.

AI

This is the summary of the work done regarding AI in Fedora.

- Worked on the AI-powered This Week in Fedora.

- Updated ai-code-review to use Gemini 3.1 Pro by default

- Nexus team: interesting experience using Claude to refactor fedora-easy-karma, on review we found it made multiple unrequested functionality changes which complicated the review, after discussion decided to re-prompt it to avoid functionality changes

QE

This team is taking care of quality of Fedora. Maintaining CI, organizing test days

and keeping an eye on overall quality of Fedora releases.

- Fedora 44 Beta testing in full swing

- Kernel 6.19 test week in progress, please contribute

- Help wanted: if anyone can help figure out why F44 boot occasionally just hangs for no very obvious reason that'd be great (details in bug)

Forgejo

This team is working on introduction of https://forge.fedoraproject.org to Fedora

and migration of repositories from pagure.io.

- Create and present Fedora -> Forgejo efforts during FOSDEM 2026 Distributions Devroom [Video] [Followup]

- Pagure migration doc: add follow-up checklist based on Nexus team's [Review]

- Create new organisation and namespace on Fedora Forge for Fedora Join [Followup] [Namespace]

- Rename "translations" into "localization-docs" [Followup][Namespace]

- Remedy mapping after renaming translations to localization-docs [Commit]

- Migrating NeuroFedora SIG [Followup][Namespace][Update]

- Perform mapping for NeuroFedora SIG teams and groups [Commit] [Followup]

- New Organization and Teams Request: Fedora KDE [Followup][Triaged]

- Perform mapping for Fedora KDE teams and groups [Commit]

- REST API: Create private issue & foundational work [Followup]

- Runner for forge organization [Followup]

- Refactor the ansible-role-forgejo-runner role task now configured and deploy the runners using ansible-pull

- Clean out the spam from Pagure.io [Followup]

- Refactor internal APIs for public vs. private issue identity [Followup][Feature]

EPEL

This team is working on keeping Epel running and helping package things.

- Completed EPEL 10.2 mass branching in preparation for the upcoming RHEL 10.2 release (announcement)

UX

This team is working on improving User experience. Providing artwork, user experience,

usability, and general design services to the Fedora project

- Madeline's F45 Wallpaper Process Update: blog post / discussion post

- The Fedora Design Team has switched to using Google Meet for our weekly meetings to utilise Gemini for note-taking. Discussion took place on this ticket and on our weekly call. Updated Fedocal entry can be found here.

- Forgejo migration: Sprint board created, tickets currently being imported, remaining assets currently being imported / reorganised.

If you have any questions or feedback, please respond to this report or contact us on #admin:fedoraproject.org channel on matrix.

The post Community Update - Week 9, 2026 appeared first on Fedora Community Blog.

27 Feb 2026 3:13pm GMT

Guillaume Kulakowski: Mise en place d’Anubis sur l’instance Scaleway de Fedora-Fr

27 Feb 2026 11:22am GMT

Remi Collet: 🎲 PHP version 8.4.19RC1 and 8.5.4RC1

Release Candidate versions are available in the testing repository for Fedora and Enterprise Linux (RHEL / CentOS / Alma / Rocky and other clones) to allow more people to test them. They are available as Software Collections, for parallel installation, the perfect solution for such tests, and as base packages.

RPMs of PHP version 8.5.4RC1 are available

- as base packages in the remi-modular-test for Fedora 42-44 and Enterprise Linux ≥ 8

- as SCL in remi-test repository

RPMs of PHP version 8.4.19RC1 are available

- as base packages in the remi-modular-test for Fedora 42-44 and Enterprise Linux ≥ 8

- as SCL in remi-test repository

ℹ️ The packages are available for x86_64 and aarch64.

ℹ️ PHP version 8.3 is now in security mode only, so no more RC will be released.

ℹ️ Installation: follow the wizard instructions.

ℹ️ Announcements:

Parallel installation of version 8.5 as Software Collection:

yum --enablerepo=remi-test install php85

Parallel installation of version 8.4 as Software Collection:

yum --enablerepo=remi-test install php84

Update of system version 8.5:

dnf module switch-to php:remi-8.5 dnf --enablerepo=remi-modular-test update php\*

Update of system version 8.4:

dnf module switch-to php:remi-8.4 dnf --enablerepo=remi-modular-test update php\*

ℹ️ Notice:

- version 8.5.4RC1 is in Fedora rawhide for QA

- EL-10 packages are built using RHEL-10.1 and EPEL-10.1

- EL-9 packages are built using RHEL-9.7 and EPEL-9

- EL-8 packages are built using RHEL-8.10 and EPEL-8

- oci8 extension uses the RPM of the Oracle Instant Client version 23.9 on x86_64 and aarch64

- intl extension uses libicu 74.2

- RC version is usually the same as the final version (no change accepted after RC, exception for security fix).

- versions 8.4.19 and 8.5.4 are planed for March 12th, in 2 weeks.

Software Collections (php84, php85)

Base packages (php)

27 Feb 2026 7:12am GMT

Tim Lauridsen: INTERSECT - A nondestructive, time-stretching, and intersecting sample slicer

27 Feb 2026 6:00am GMT

26 Feb 2026

Fedora People

Fedora People

Christof Damian: Friday Links 26-07

26 Feb 2026 11:00pm GMT

Peter Czanik: New toy: Installing Ubuntu on the HP Z2 Mini

26 Feb 2026 2:06pm GMT

25 Feb 2026

Fedora People

Fedora People

Peter Czanik: Version 4.11.0 of syslog-ng is now available

25 Feb 2026 1:11pm GMT

Ben Cotton: Trust in open source communities

In chapter 3 of Program Management for Open Source Projects, I talk about the importance of trust. "Open source communities run on trust," I wrote. I go on to talk about building trust by establishing relationships and credibility. This is fine when you're coming into a defined role, perhaps if you got hired to fill a sponsored role in a community or if a project leader has asked you to apply your skills to the project.

Most people, of course, don't come directly into a defined role. They start by making a small contribution: filing a bug, answering a question on a mailing list or forum, submitting a patch, and so on. Sometimes, they don't even plan to stick around. They're making one contribution and moving on. The kind of trust-building based on relationships doesn't work as well in that case. But you still need trust to evaluate a contributor (and thus their contribution).

This issue has only grown more relevant as large language models become widespread. If the person who submitted a pull request didn't write the code, do they understand it? Can they answer maintainers' questions or address feedback? Is the code even worth a maintainer's time to review or is it plausible-looking garbage?

In late January, GitHub product manager Camilla Moraes started a conversation seeking ideas for giving maintainers tools to address low-quality contributions. The conversation produced many good (and also some bad) ideas and highlighted the difficulty of a universal solution. Although the word "trust" only appears six times (as of this writing) in the whole thread, the conversation is basically a discussion of trust. "How can we slow the rate of un-trusted contribution without making life harder for the trusted contributors?" is a fair summary of the underlying issue.

Defining trust

Charles H. Green developed an equation of trustworthiness that includes credibility, reliability, and intimacy. Although it's a smidge hokey, it's fundamentally a reasonable representation of trust, so we can roll with it.

Importantly, trust is not a static characteristic of a person. Instead, it's a dynamic measure that changes based on context and relationship. My coworkers (hopefully) think that I am competent in my work, deliver what I say I will, and am a fun guy to be around. There's a high degree of trust because I rate highly in credibility, reliability, and intimacy. When I join a new project, I am the same person, but I am relatively or entirely unknown. The other people in the community need to interact with me for a period of time before they can develop trust in me.

Even if I've known someone for a long time, their trust in me may change when the context changes. The intimacy and reliability may be the same, but they don't necessarily know if I'm credible in the new context. Just because I have experience in other languages, that doesn't mean my Rust code is good. Someone who thinks I write competent Python (we're pretending here!) would be well served reviewing a Rust contribution very closely, as I've written essentially none.

Trust in your community

As with many concepts, we often think about trust in open source communities without explicitly thinking about it. But most projects have some concept of a contributor ladder, where people get increased privileges and responsibilities based on the trust they've earned. It's more important than ever to give deliberate thought to how trust is evaluated in your community.

This not only affects community management concerns but also security. Many projects have automated CI jobs that run on pull requests. These check for code style, run unit and integration tests, and so on. In the best case, bad code (intentional or accidental) can limit resources (including maintainer time). In the worst case, bad code can compromise the project and publish malware. For this reason, projects often require maintainers or other trusted users to grant permission for automated tests to run when the submitter is untrusted. Unfortunately, this still places a time burden on maintainers, which is a precious resource.

I suggest that projects explicitly consider what levels of trust are required to access certain resources (CI jobs, project emails, etc) and how that trust will be measured. The Discourse trust levels are an excellent starting point for building your project's trust model. The specifics are designed for forum interaction, but you can extrapolate to your project's activities.

The path to build trust has to be easy, or else you'll drive away new contributors and your community will wither away over time. Trust levels are a safety measure, not a gatekeeping measure.

Tools to help

I am aware of a few tools to help with trust evaluation. I share them here as a reference, but I have not used them and do not endorse or renounce them. contributor-report is a GitHub Action that gives maintainers a report on a new contributor's activity levels. This helps maintainers evaluate newcomers on the metrics that make sense for their specific project. vouch is a tool for marking users as vouched (or denounced) and taking action based on that. It can be used to provide a web of trust across projects and communities.

This post's featured photo by Andrew Petrov on Unsplash.

The post Trust in open source communities appeared first on Duck Alignment Academy.

25 Feb 2026 12:00pm GMT

24 Feb 2026

Fedora People

Fedora People

Peter Czanik: New toy: Installing openSUSE Tumbleweed on the HP Z2 Mini

24 Feb 2026 11:58am GMT

Remi Collet: 📝 Install PHP 8.5 on Fedora, RHEL, CentOS Stream, Alma, Rocky or other clone

Here is a quick howto upgrade default PHP version provided on Fedora, RHEL, CentOS, AlmaLinux, Rocky Linux or other clones with latest version 8.5.

You can also follow the Wizard instructions.

Architectures:

The repository is available for x86_64 (Intel/AMD) and aarch64 (ARM).

Repositories configuration:

On Fedora, standards repositories are enough, on Enterprise Linux (RHEL, CentOS) the Extra Packages for Enterprise Linux (EPEL) and Code Ready Builder (CRB) repositories must be configured.

Fedora 44

dnf install https://rpms.remirepo.net/fedora/remi-release-44.rpm

Fedora 43

dnf install https://rpms.remirepo.net/fedora/remi-release-43.rpm

Fedora 42

dnf install https://rpms.remirepo.net/fedora/remi-release-42.rpm

RHEL version 10.1

dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-10.noarch.rpm dnf install https://rpms.remirepo.net/enterprise/remi-release-10.rpm subscription-manager repos --enable codeready-builder-for-rhel-10-x86_64-rpms

RHEL version 9.7

dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-9.noarch.rpm dnf install https://rpms.remirepo.net/enterprise/remi-release-9.rpm subscription-manager repos --enable codeready-builder-for-rhel-9-x86_64-rpms

RHEL version 8.10

dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm dnf install https://rpms.remirepo.net/enterprise/remi-release-8.rpm subscription-manager repos --enable codeready-builder-for-rhel-8-x86_64-rpms

Alma, CentOS Stream, Rocky version 10

dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-10.noarch.rpm dnf install https://rpms.remirepo.net/enterprise/remi-release-10.rpm crb install

Alma, CentOS Stream, Rocky version 9

dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-9.noarch.rpm dnf install https://rpms.remirepo.net/enterprise/remi-release-9.rpm crb install

Alma, Rocky version 8

dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm dnf install https://rpms.remirepo.net/enterprise/remi-release-8.rpm crb install

PHP module usage

With Fedora and EL, you can simply use the remi-8.4 stream of the php module

With Fedora (dnf5 has partial module support)

dnf module reset php dnf module enable php:remi-8.5 dnf install php-cli php-fpm php-mbstring php-xml

Other distributions (dnf4)

dnf module switch-to php:remi-8.5/common

PHP upgrade

By choice, the packages have the same name as in the distribution, so a simple update is enough:

dnf update

That's all :)

$ php -v

PHP 8.5.3 (cli) (built: Feb 10 2026 18:25:51) (NTS gcc x86_64)

Copyright (c) The PHP Group

Built by Remi's RPM repository #StandWithUkraine

Zend Engine v4.5.3, Copyright (c) Zend Technologies

with Zend OPcache v8.5.3, Copyright (c), by Zend Technologies

Known issues

The upgrade can fail (by design) when some installed extensions are not yet compatible with PHP 8.5.

See the compatibility tracking list: PECL extensions RPM status

If these extensions are not mandatory, you can remove them before the upgrade; otherwise, you must be patient.

Warning: some extensions are still under development, but it seems useful to provide them to upgrade more people and allow users to give feedback to the authors.

More information

If you prefer to install PHP 8.5 beside the default PHP version, this can be achieved using the php85 prefixed packages, see the PHP 8.5 as Software Collection post.

You can also try the configuration wizard.

This is also documented as the community way to install PHP 8.5 on the official PHP web site.

The packages available in the repository were used as sources for Fedora 44.

By providing a full feature PHP stack, with about 150 available extensions, 11 PHP versions, as base and SCL packages, for Fedora and Enterprise Linux, and with 300 000 downloads per day, the remi repository became in the last 21 years a reference for PHP users on RPM based distributions, maintained by an active contributor to the projects (Fedora, PHP, PECL...).

See also:

- Posts RSS feed (version announcements)

- Comments RSS feed

- Repository RSS feed (example for EL-10, php 8.5)

- Install PHP 8.4 on Fedora RHEL CentOS Alma Rocky or other clone

- Install PHP 8.3 on Fedora RHEL CentOS Alma Rocky or other clone

- Install PHP 8.2 on Fedora RHEL CentOS Alma Rocky or other clone

24 Feb 2026 11:16am GMT

23 Feb 2026

Fedora People

Fedora People

Akashdeep Dhar: Loadouts For Genshin Impact v0.1.14 Released

Hello travelers!

Loadouts for Genshin Impact v0.1.14 is OUT NOW with the addition of support for recently released artifacts like Aubade of the Morningstar and Moon and A Day Carved From Rising Woods, recently released characters like Columbina, Zibai and Illuga and for recently released weapons like Nocturne's Curtain Call and Lightbearing Moonshard from Genshin Impact Luna IV or v6.3 Phase 2. Take this FREE and OPEN SOURCE application for a spin using the links below to manage the custom equipment of artifacts and weapons for the playable characters.

Resources

- Loadouts for Genshin Impact - GitHub

- Loadouts for Genshin Impact - PyPI

- Loadouts for Genshin Impact v0.1.14

Installation

Besides its availability as a repository package on PyPI and as an archived binary on PyInstaller, Loadouts for Genshin Impact is now available as an installable package on Fedora Linux. Travelers using Fedora Linux 42 and above can install the package on their operating system by executing the following command.

$ sudo dnf install gi-loadouts --assumeyes --setopt=install_weak_deps=FalseInstallation command for Fedora Linux

Changelog

- Automated dependency updates for GI Loadouts by @renovate[bot] in #486

- Add the recently added character

Columbinato the GI Loadouts roster by @sdglitched in #494 - Add the recently added weapon

Nocturne's Curtain Callto the GI Loadouts roster by @sdglitched in #495 - Add the recently added artifact set

A Day Carved From Rising Windsto the GI Loadouts roster by @sdglitched in #497 - Add the recently added artifact set

Aubade of Morningstar and Moonto the GI Loadouts roster by @sdglitched in #496 - Update dependency pillow to v12.1.1 [SECURITY] by @renovate[bot] in #502

- Add the recently added character Zibai to the GI Loadouts roster by @sdglitched in #501

- Add the recently added character Illuga to the GI Loadouts roster by @sdglitched in #503

- Add the recently added weapon Lightbearing Moonshard to the GI Loadouts roster by @sdglitched in #504

- Remove the conditional substat calculation of weapon

Harbinger of Dawnby @sdglitched in #505 - Stage the release v0.1.13 for Genshin Impact Luna IV (v6.3 Phase 2) by @sdglitched in #506

- Automated dependency updates for GI Loadouts by @renovate[bot] in #500

Artifacts

Two artifacts have debuted in this version release.

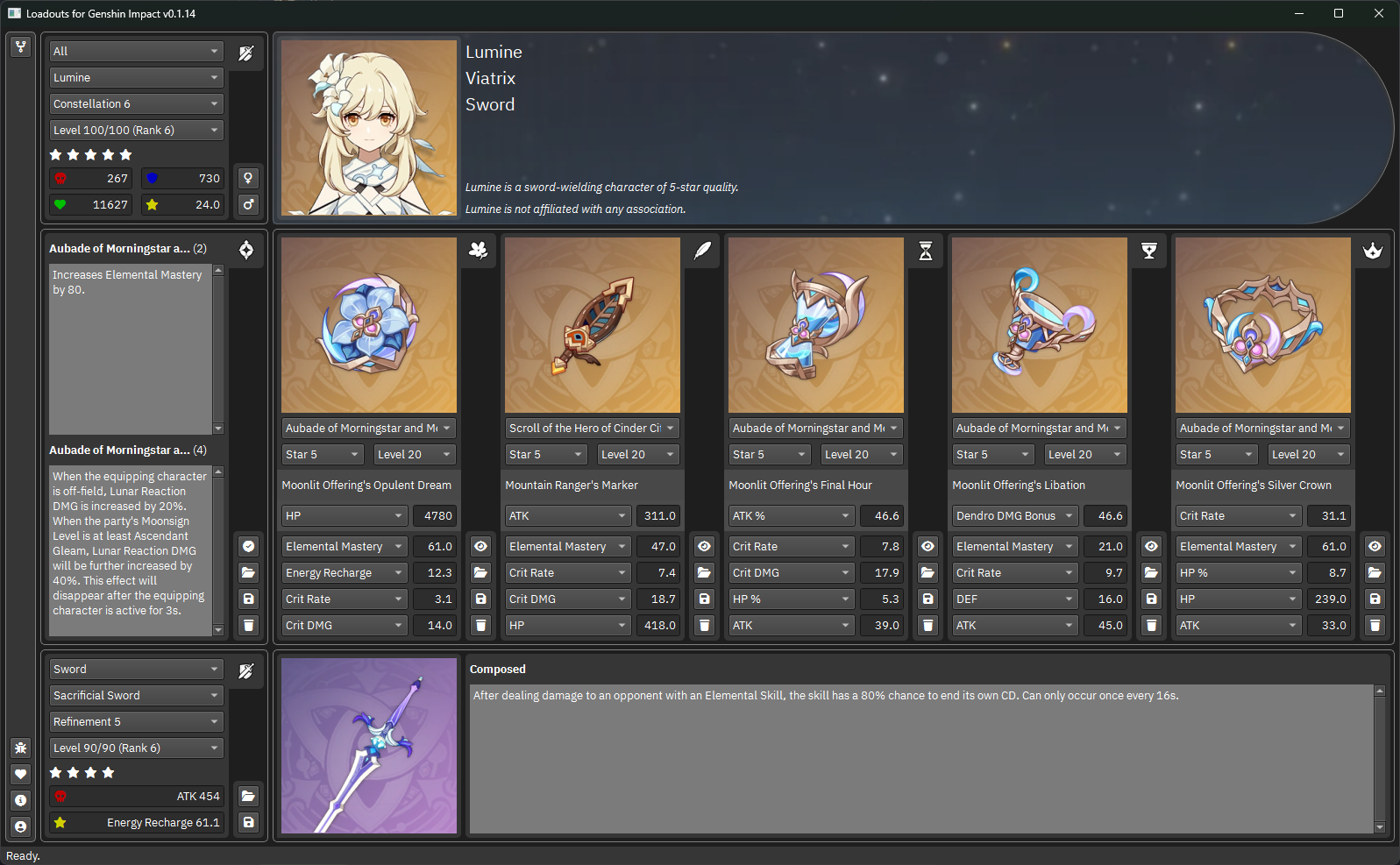

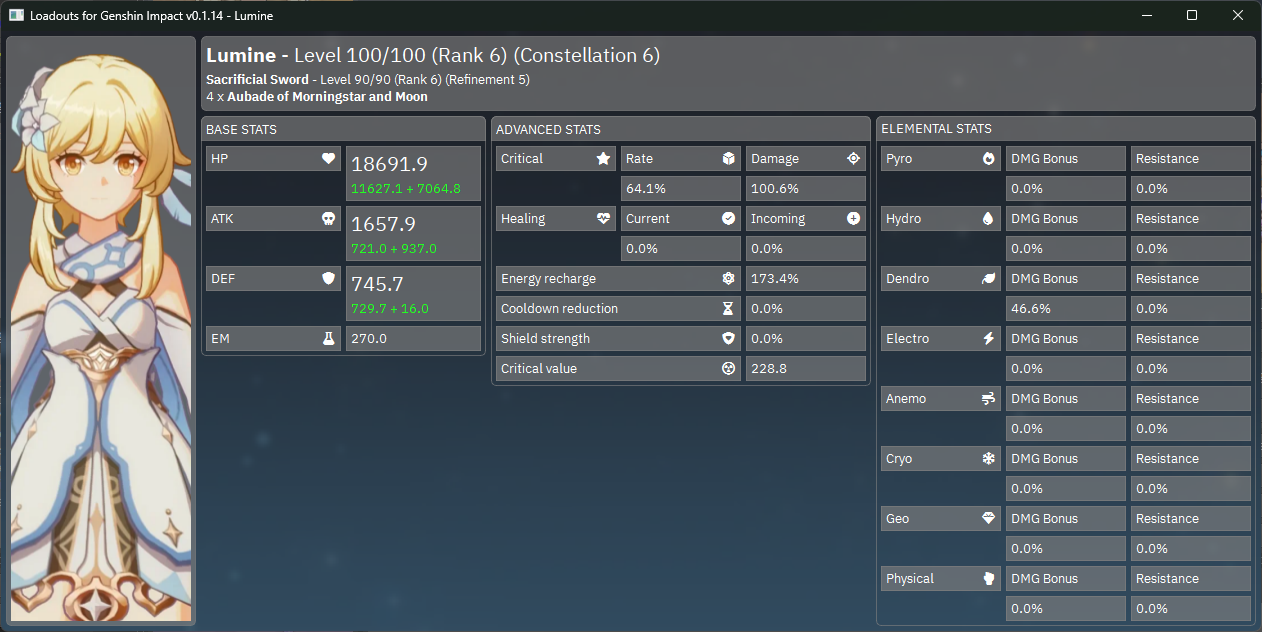

Aubade of the Morningstar and Moon

- Bonus for Two Piece Equipment

Increases Elemental Mastery by 80. - Bonus for Four Piece Equipment

When the equipping character is off-field, Lunar Reaction DMG is increased by 20%. When the party's Moonsign Level is at least Ascendant Gleam, Lunar Reaction DMG will be further increased by 40%. This effect will disappear after the equipping character is active for 3s.

Aubade of the Morningstar and Moon - Workspace and Results

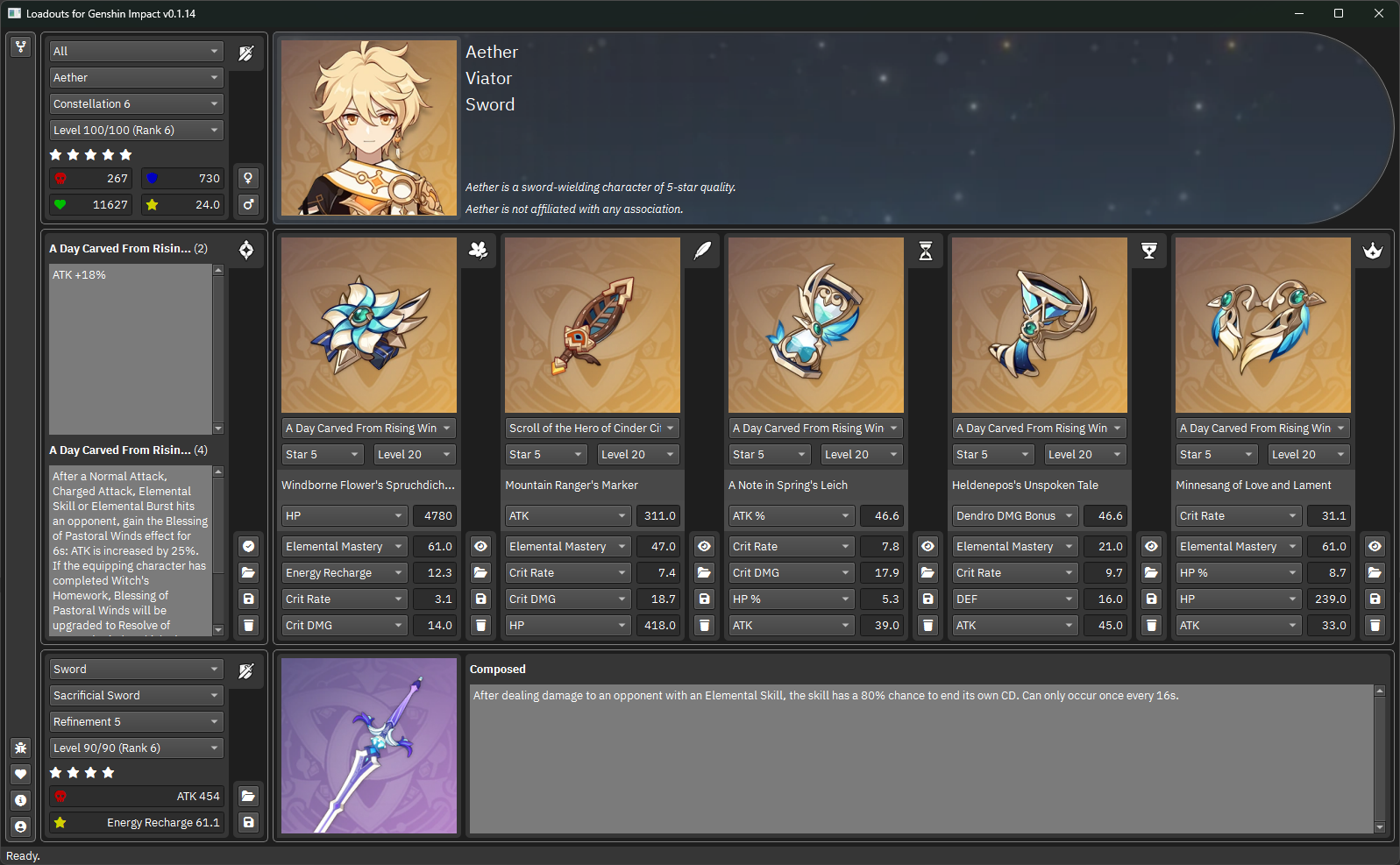

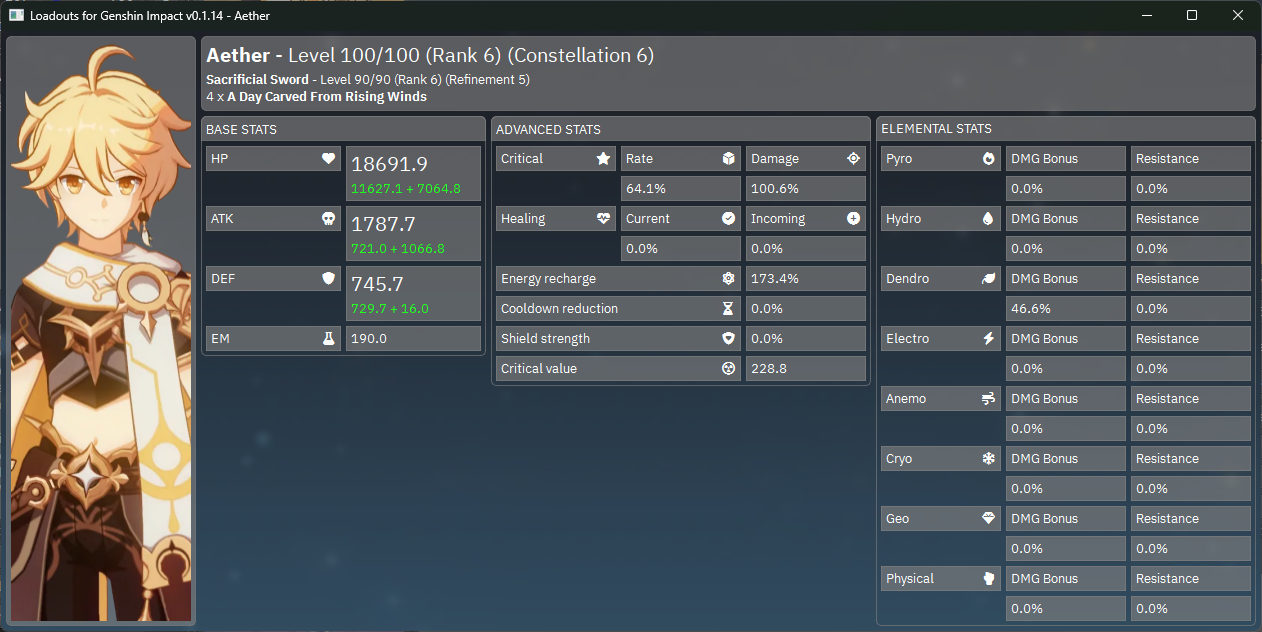

A Day Carved From Rising Winds

- Bonus for Two Piece Equipment

ATK +18%. - Bonus for Four Piece Equipment

After a Normal Attack, Charged Attack, Elemental Skill or Elemental Burst hits an opponent, gain the Blessing of Pastoral Winds effect for 6s: ATK is increased by 25%. If the equipping character has completed Witch's Homework, Blessing of Pastoral Winds will be upgraded to Resolve of Pastoral Winds, which also increases the CRIT Rate of the equipping character by an additional 20%. This effect can be triggered even when the character is off-field.

A Day Carved From Rising Winds - Workspace and Results

Characters

Three characters have debuted in this version release.

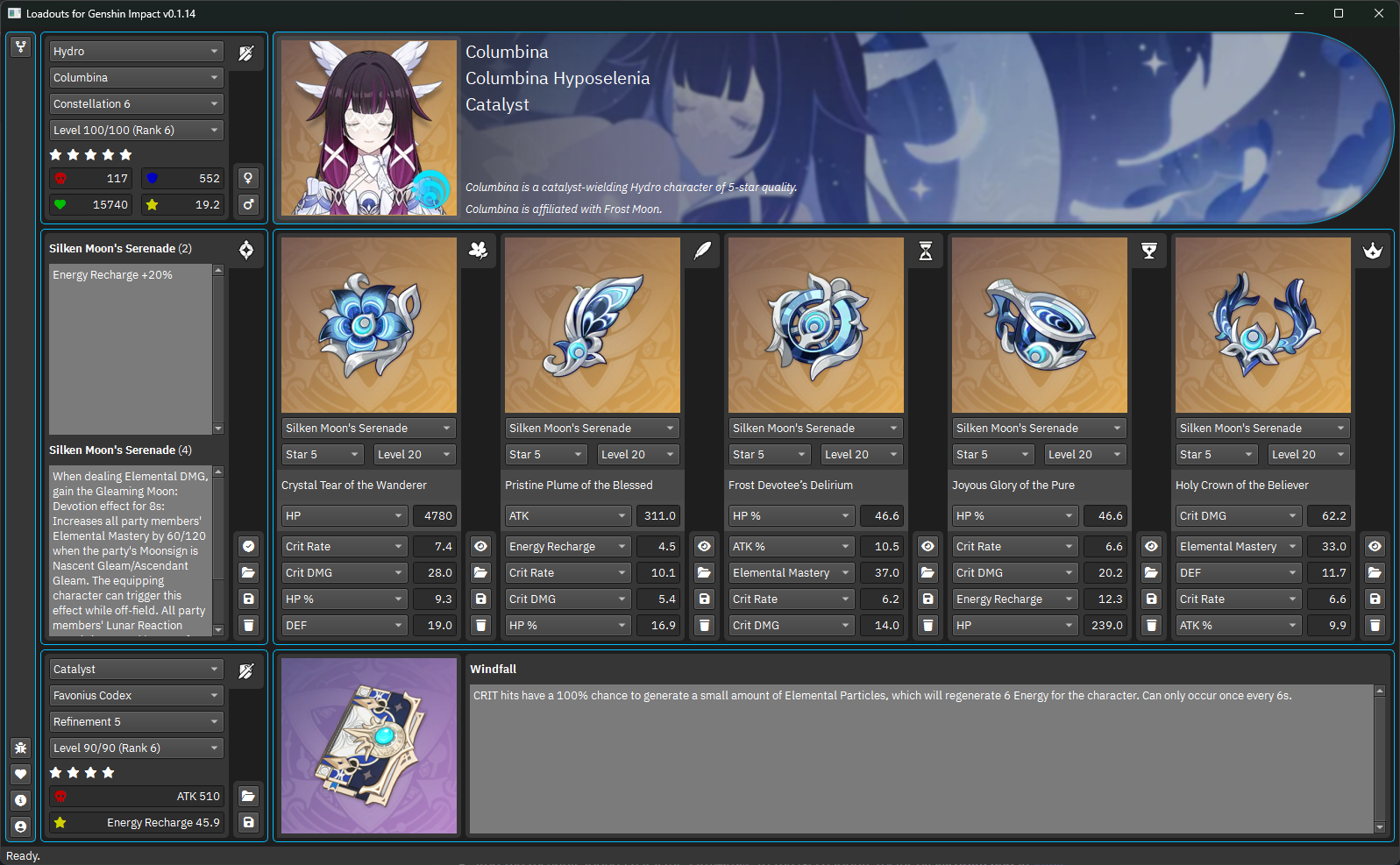

Columbina

Columbina is a catalyst-wielding Hydro character of five-star quality.

Columbina - Workspace and Results

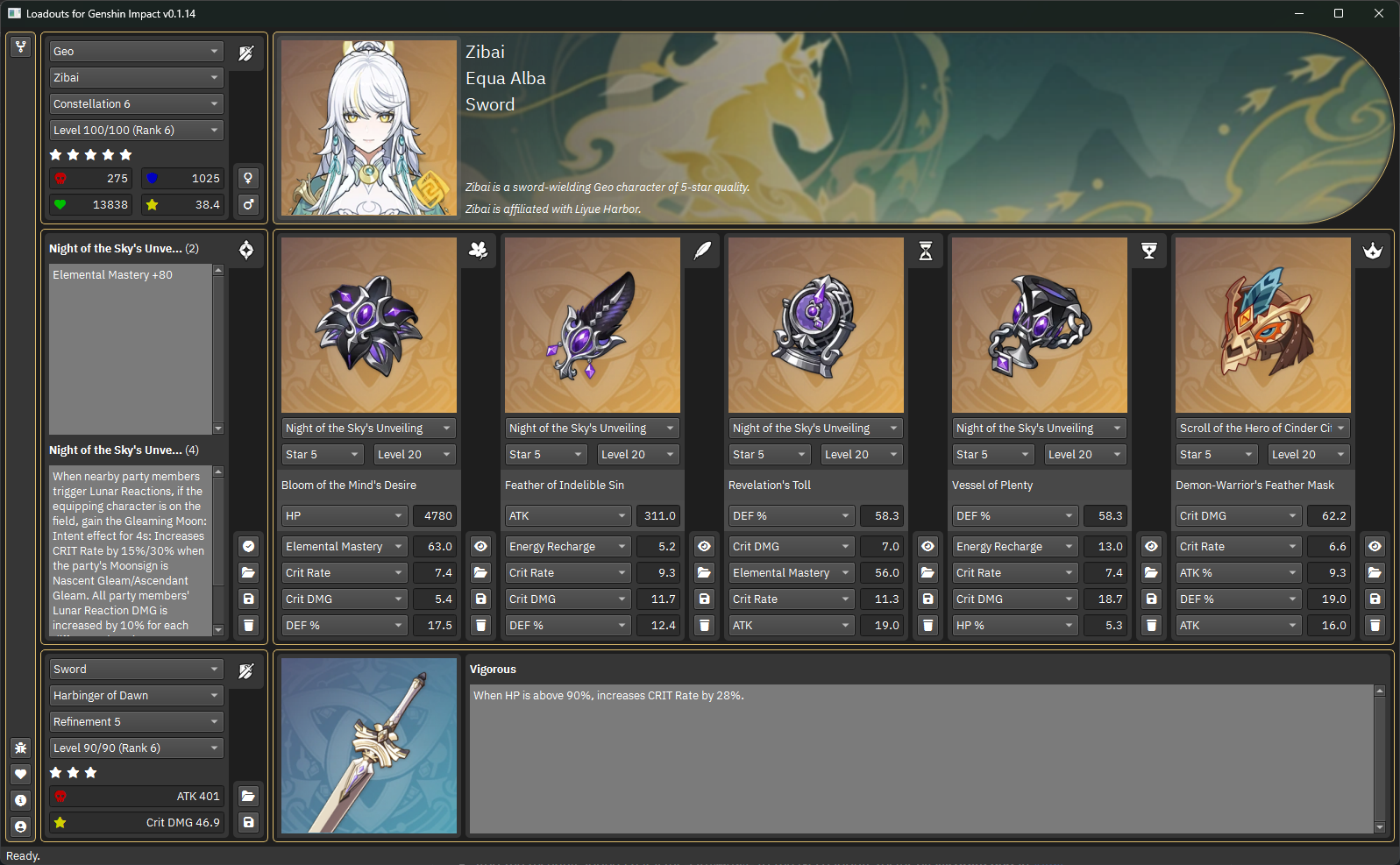

Zibai

Zibai is a sword-wielding Geo character of five-star quality.

Zibai - Workspace and Results

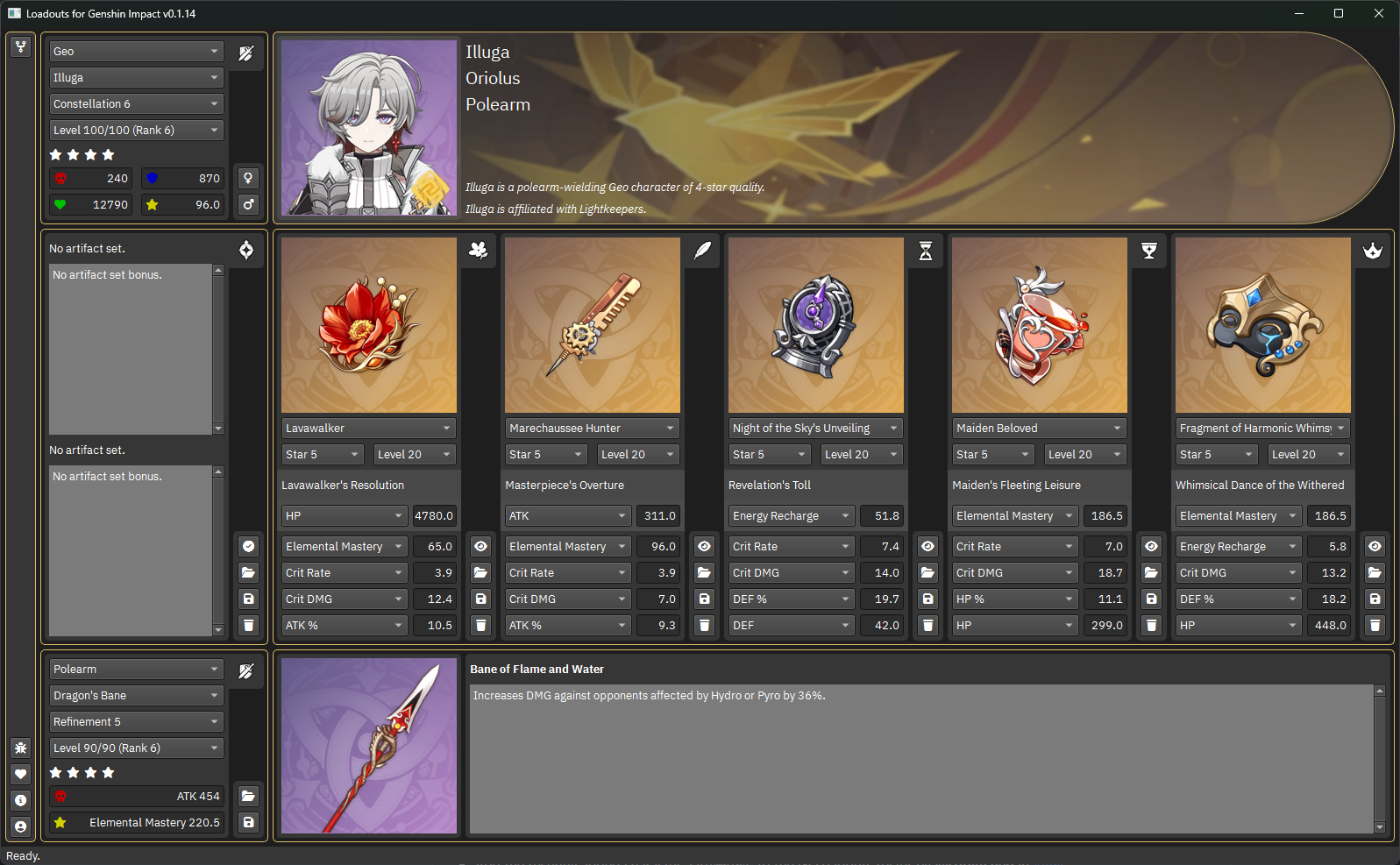

Illuga

Illuga is a polearm-wielding Geo character of five-star quality.

Illuga - Workspace and Results

Weapons

Two weapons have debuted in this version release.

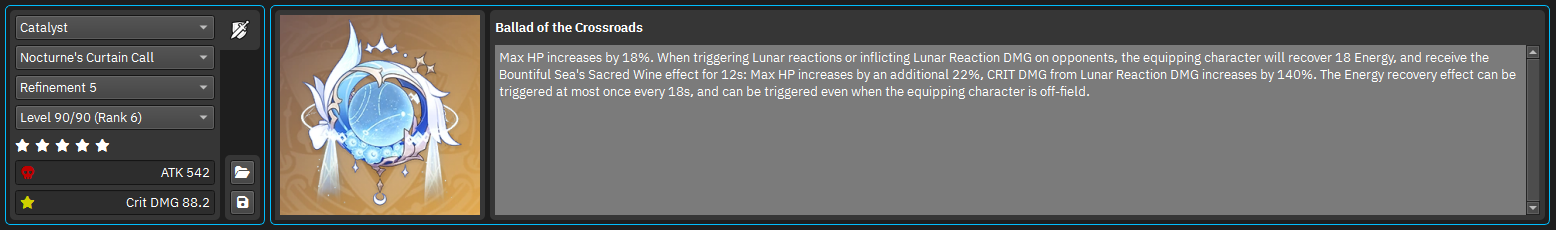

Nocturne's Curtain Call

Ballad of the Crossroads - Scales on Crit DMG.

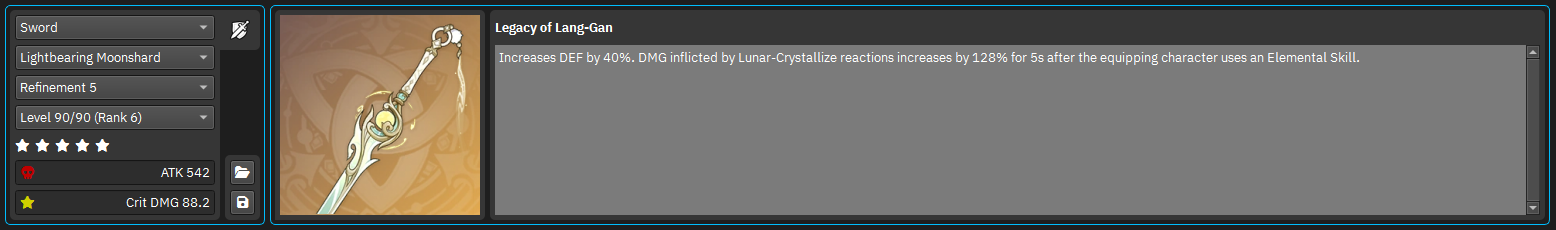

Lightbearing Moonshard

Legacy of Lang-Gan - Scales on Crit DMG.

Appeal

While allowing you to experiment with various builds and share them for later, Loadouts for Genshin Impact lets you take calculated risks by showing you the potential of your characters with certain artifacts and weapons equipped that you might not even own. Loadouts for Genshin Impact has been and always will be a free and open source software project, and we are committed to delivering a quality experience with every release we make.

Disclaimer

With an extensive suite of over 1550 diverse functionality tests and impeccable 100% source code coverage, we proudly invite auditors and analysts from MiHoYo and other organizations to review our free and open source codebase. This thorough transparency underscores our unwavering commitment to maintaining the fairness and integrity of the game.

The users of this ecosystem application can have complete confidence that their accounts are safe from warnings, suspensions or terminations when using this project. The ecosystem application ensures complete compliance with the terms of services and the regulations regarding third-party software established by MiHoYo for Genshin Impact.

All rights to Genshin Impact assets used in this project are reserved by miHoYo Ltd. and Cognosphere Pte., Ltd. Other properties belong to their respective owners.

23 Feb 2026 6:30pm GMT

Tim Lauridsen: Fedora Audio Production Ressources

23 Feb 2026 11:00am GMT

Brian (bex) Exelbierd: Phone a Friend: Multi-Model Subagents for VS Code Copilot Chat

I wanted a way to stay inside Visual Studio Code, use Copilot Chat as the "orchestrator," and still mix and match models for different parts of the work. Plan a change with one of the slower, more capable models, but let a smaller, faster model handle mechanical refactors. Edit a blog post with one model, but hand Jekyll plumbing or JSON/YAML munging to another. The friction was that the built-in Copilot Chat extension only lets subagents run on the same model as the parent conversation, while the Copilot CLI happily lets you pick any available model per run. Phone a Friend bolts that flexibility onto Copilot Chat, so I can keep the full VS Code experience - including gutter diffs - while dispatching subtasks to whatever model is best for the job.

The Problem

When you use GitHub Copilot Chat in VS Code, every subagent it spawns runs on the same model as the parent conversation. If you're on Claude Opus 4.6, all subagents are Claude Opus 4.6. Sometimes you want a different model for a subtask - a faster one for simple work, or a different vendor for a second opinion.

GitHub Copilot CLI supports --model to pick any available model, but using it directly doesn't help - changes made by the CLI don't produce VS Code's gutter indicators (the green/red diff decorations in the editor margin). You get the work done but lose the visual feedback that makes code review comfortable.

Phone a Friend is an MCP server that solves both problems. It dispatches work to Copilot CLI with the model of choice, captures a unified diff of the changes, and returns it to the calling agent - which applies it through VS Code's edit tools. Gutter indicators show up as the changes were made natively.

How It Works

- Copilot Chat calls the

phone_a_friendMCP tool with a prompt, model name, and working directory - The MCP server creates an isolated git worktree from

HEAD - It launches Copilot CLI in non-interactive mode in that worktree with the requested model

- The subagent does its work and writes its response to a "message-in-a-bottle" file

- The MCP server reads the response, captures a

git diff, and cleans up the worktree - The MCP server then returns the response text and unified diff to the calling agent

- The calling agent applies the diff using VS Code's edit tools - gutter indicators appear

The "message in a bottle" pattern is worth explaining. Copilot CLI's stdout mixes the agent's response with progress output and is unreliable to parse. Rather than fighting noisy output, the tool instructs the subagent to write its final response to a file. The server reads the file. Clean separation.

Safety

Worktree isolation means your working tree is never modified directly. Push protection blocks git push at the tool level. Worktrees are cleaned up after every invocation, even on errors.

Setup

You install Phone a Friend like any other MCP server in VS Code: add the @bexelbie/phone-a-friend npm package through the MCP: Add Server... command, or point VS Code at it via your MCP configuration. The GitHub README details the exact JSON and prerequisites (Node.js, Copilot CLI, Git).

Usage

Once configured, you stay in Copilot Chat and describe the outcome you want; the calling agent decides when to route a subtask through Phone a Friend. The tool surface includes discovery hints, so natural phrasing like "get a second opinion from another model" is usually enough to trigger it. Any model that Copilot CLI exposes is available.

Known Limitations

A few trade-offs worth knowing:

- Context cost. The unified diff lands in the calling agent's context window. Large diffs eat context. I've got an issue open exploring ideas for improving this.

- Message-in-a-bottle compliance. Most models follow the instruction to write their final response into the message-in-a-bottle file, but some may occasionally ignore it. When that happens, the calling agent still gets the diff of any file changes but not the response text.

Availability

The project is on GitHub under MIT license, and published on npm as @bexelbie/phone-a-friend. Written in TypeScript.

What Changed For Me

Since integrating this into my Copilot setup, the biggest shift is that I no longer have to choose between "the model I want to think with" and "the model I want to do the work" and I eliminated a bunch of copy/paste from manually emulating this. I keep the main conversation with a larger, more capable model for planning and review, and routinely:

- send quick, mechanical refactors to a smaller, faster model

- hand Jekyll front matter, Liquid, and config tweaks to a model that's better at markup and templating

- ask a different vendor's model for a second opinion on changes or ideas, especially where that model may be better at the task

Because everything still lands back in the same VS Code buffer with normal gutter diffs, it feels like one coherent tool instead of a handful of loosely-connected ones.

The project also had an unexpected dynamic in the development process. Building an MCP server that mimics a capability already available to the model created a strange feedback loop. I could collaborate on the implementation with Opus, and then turn around and interview it as a subject matter expert on how it uses that very same capability. It was a weird feeling to use the model as both a partner in writing the code and a primary source for understanding the user requirements.

23 Feb 2026 10:10am GMT

Fedora Magazine: Join Us for Fedora Hatch at SCaLE 23x!

Fedora is heading back to sunny Southern California! As we gear up for SCaLE 23x, we are thrilled to announce a special edition of Fedora Hatch. This is taking place on Friday, March 6 as an embedded track at SCALE.

Whether you're a long-time contributor, a curious user, or someone looking to make your very first pull request, Fedora Hatch is designed for you. This is our way of bringing the experience of Flock (our annual contributor conference) to a local level. It focuses on connection, collaboration, and community growth.

What's Happening?

This year, Fedora has secured a dedicated track on Friday at SCALE. We've curated a line-up that balances technical deep dives with essential community initiatives.

When: Friday, March 6, 2026

Where: Room 208, Pasadena Convention Center

Who: You! (And a bunch of friendly Fedorans)

The Schedule Highlights

We have a packed morning featuring five talks and a hands-on workshop:

- Getting Started in Open Source and Fedora (Amy Marrich): Are you new to the world of open source? Or are you looking to make your first contribution? This session will provide a guide for beginners interested in contributing to open source projects. It will focus on the Fedora project. We'll cover a variety of topics, like finding suitable projects, making your first pull request, and navigating community interactions. Attendees will leave with practical tips, resources, and the confidence to embark on their open source journey.

- Fedora Docs Revamp Initiative (Shaun McCance): The Fedora Council recently approved an initiative to revamp the Fedora docs. The initiative aims to establish a support team to maintain a productive environment for writing docs. It will establish subteams with subject matter expertise to develop docs in specific areas of interest. We'll describe some of the challenges the Fedora docs have faced, and present the progress so far in improving the docs. You'll also learn how you can help Fedora have better docs.

- A Brief Tour of the Age of Atomic (Laura Santamaria): Ever wished to try a number of different desktop experiences quickly in your homelab? Maybe it's time to explore Fedora Atomic or Universal Blue! The tour starts with what makes these experiences special. It will then review the options including Silverblue, Cosmic, Bluefin and Bazzite (yes, the gaming OS). We'll briefly get under the hood to explore bootc, the technology powering Atomic. Finally, we'll explore how you can contribute to the future of Fedora Atomic.

- Accelerating CentOS with Fedora (Davide Cavalca): This talk will explore how CentOS SIGs are able to leverage the work happening in Fedora to improve the quality and velocity of packages in CentOS Stream. We'll cover how the CentOS Hyperscale SIG is able to deliver faster-moving updates for select packages, and how the CentOS Proposed Updates SIG integrates bugfixes and improves the contribution process to the distribution.

- Agentic Workloads on Linux: Btrfs + Service Accounts Architecture (David Duncan): As AI agents become more prevalent in enterprise environments, Linux systems need architectural patterns that provide isolation, security, and efficient resource management. This session explores an approach, using BTRFS subvolumes combined with dedicated service accounts, to build secure, isolated environments for autonomous AI agents in enterprise deployment.

- RPM Packaging Workshop (Carl George): While universal package formats like Flatpak, Snap, and AppImage have gained popularity for their cross-distro support, native system packages remain a cornerstone of Linux distributions. These native formats offer numerous benefits. Understanding them is essential for those who want to contribute to the Linux ecosystem at a deeper level. In this hands-on workshop, we'll explore RPM, the native package format used by Fedora, CentOS, and RHEL. RPM is a powerful and flexible tool. It plays a vital role in the management and distribution of software for these operating systems.

Don't forget to swing by the Fedora Booth in the Expo Hall! Our team will be there all weekend (March 6-8) with live demonstrations of Fedora Linux 43, GNOME 49 improvements, and plenty of fresh swag to go around.

Registration Details

To join us at the Hatch, you'll need a SCaLE 23x pass.

- Location: Pasadena Convention Center, 300 E Green St, Pasadena, CA.

- Tickets: Available at the official SCaLE website.

We can't wait to see you there. Let's make SCaLE 23x the best one yet!

23 Feb 2026 8:00am GMT