27 Feb 2026

Planet Mozilla

Planet Mozilla

Niko Matsakis: How Dada enables internal references

In my previous Dada blog post, I talked about how Dada enables composable sharing. Today I'm going to start diving into Dada's permission system; permissions are Dada's equivalent to Rust's borrow checker.

Goal: richer, place-based permissions

Dada aims to exceed Rust's capabilities by using place-based permissions. Dada lets you write functions and types that capture both a value and things borrowed from that value.

As a fun example, imagine you are writing some Rust code to process a comma-separated list, just looking for entries of length 5 or more:

let list: String = format!("...something big, with commas...");

let items: Vec<&str> = list

.split(",")

.map(|s| s.trim()) // strip whitespace

.filter(|s| s.len() > 5)

.collect();

One of the cool things about Rust is how this code looks a lot like some high-level language like Python or JavaScript, but in those languages the split call is going to be doing a lot of work, since it will have to allocate tons of small strings, copying out the data. But in Rust the &str values are just pointers into the original string and so split is very cheap. I love this.

On the other hand, suppose you want to package up some of those values, along with the backing string, and send them to another thread to be processed. You might think you can just make a struct like so…

struct Message {

list: String,

items: Vec<&str>,

// ----

// goal is to hold a reference

// to strings from list

}

…and then create the list and items and store them into it:

let list: String = format!("...something big, with commas...");

let items: Vec<&str> = /* as before */;

let message = Message { list, items };

// ----

// |

// This *moves* `list` into the struct.

// That in turn invalidates `items`, which

// is borrowed from `list`, so there is no

// way to construct `Message`.

But as experienced Rustaceans know, this will not work. When you have borrowed data like an &str, that data cannot be moved. If you want to handle a case like this, you need to convert from &str into sending indices, owned strings, or some other solution. Argh!

Dada's permissions use places, not lifetimes

Dada does things a bit differently. The first thing is that, when you create a reference, the resulting type names the place that the data was borrowed from, not the lifetime of the reference. So the type annotation for items would say ref[list] String1 (at least, if you wanted to write out the full details rather than leaving it to the type inferencer):

let list: given String = "...something big, with commas..."

let items: given Vec[ref[list] String] = list

.split(",")

.map(_.trim()) // strip whitespace

.filter(_.len() > 5)

// ------- I *think* this is the syntax I want for closures?

// I forget what I had in mind, it's not implemented.

.collect()I've blogged before about how I would like to redefine lifetimes in Rust to be places as I feel that a type like ref[list] String is much easier to teach and explain: instead of having to explain that a lifetime references some part of the code, or what have you, you can say that "this is a String that references the variable list".

But what's also cool is that named places open the door to more flexible borrows. In Dada, if you wanted to package up the list and the items, you could build a Message type like so:

class Message(

list: String

items: Vec[ref[self.list] String]

// ---------

// Borrowed from another field!

)

// As before:

let list: String = "...something big, with commas..."

let items: Vec[ref[list] String] = list

.split(",")

.map(_.strip()) // strip whitespace

.filter(_.len() > 5)

.collect()

// Create the message, this is the fun part!

let message = Message(list.give, items.give)Note that last line - Message(list.give, items.give). We can create a new class and move list into it along with items, which borrows from list. Neat, right?

OK, so let's back up and talk about how this all works.

References in Dada are the default

Let's start with syntax. Before we tackle the Message example, I want to go back to the Character example from previous posts, because it's a bit easier for explanatory purposes. Here is some Rust code that declares a struct Character, creates an owned copy of it, and then gets a few references into it.

struct Character {

name: String,

class: String,

hp: u32,

}

let ch: Character = Character {

name: format!("Ferris"),

class: format!("Rustacean"),

hp: 22

};

let p: &Character = &ch;

let q: &String = &p.name;

The Dada equivalent to this code is as follows:

class Character(

name: String,

klass: String,

hp: u32,

)

let ch: Character = Character("Tzara", "Dadaist", 22)

let p: ref[ch] Character = ch

let q: ref[p] String = p.nameThe first thing to note is that, in Dada, the default when you name a variable or a place is to create a reference. So let p = ch doesn't move ch, as it would in Rust, it creates a reference to the Character stored in ch. You could also explicitly write let p = ch.ref, but that is not preferred. Similarly, let q = p.name creates a reference to the value in the field name. (If you wanted to move the character, you would write let ch2 = ch.give, not let ch2 = ch as in Rust.)

Notice that I said let p = ch "creates a reference to the Character stored in ch". In particular, I did not say "creates a reference to ch". That's a subtle choice of wording, but it has big implications.

References in Dada are not pointers

The reason I wrote that let p = ch "creates a reference to the Character stored in ch" and not "creates a reference to ch" is because, in Dada, references are not pointers. Rather, they are shallow copies of the value, very much like how we saw in the previous post that a shared Character acts like an Arc<Character> but is represented as a shallow copy.

So where in Rust the following code…

let ch = Character { ... };

let p = &ch;

let q = &ch.name;

…looks like this in memory…

# Rust memory representation

Stack Heap

───── ────

┌───► ch: Character {

│ ┌───► name: String {

│ │ buffer: ───────────► "Ferris"

│ │ length: 6

│ │ capacity: 12

│ │ },

│ │ ...

│ │ }

│ │

└──── p

│

└── q

in Dada, code like this

let ch = Character(...)

let p = ch

let q = ch.namewould look like so

# Dada memory representation

Stack Heap

───── ────

ch: Character {

name: String {

buffer: ───────┬───► "Ferris"

length: 6 │

capacity: 12 │

}, │

.. │

} │

│

p: Character { │

name: String { │

buffer: ───────┤

length: 6 │

capacity: 12 │

... │

} │

} │

│

q: String { │

buffer: ───────────────┘

length: 6

capacity: 12

}

Clearly, the Dada representation takes up more memory on the stack. But note that it doesn't duplicate the memory in the heap, which tends to be where the vast majority of the data is found.

Dada talks about values not references

This gets at something important. Rust, like C, makes pointers first-class. So given x: &String, x refers to the pointer and *x refers to its referent, the String.

Dada, like Java, goes another way. x: ref String is a String value - including in memory representation! The difference between a given String, shared String, and ref String is not in their memory layout, all of them are the same, but they differ in whether they own their contents.2

So in Dada, there is no *x operation to go from "pointer" to "referent". That doesn't make sense. Your variable always contains a string, but the permissions you have to use that string will change.

In fact, the goal is that people don't have to learn the memory representation as they learn Dada, you are supposed to be able to think of Dada variables as if they were all objects on the heap, just like in Java or Python, even though in fact they are stored on the stack.3

Rust does not permit moves of borrowed data

In Rust, you cannot move values while they are borrowed. So if you have code like this that moves ch into ch1…

let ch = Character { ... };

let name = &ch.name; // create reference

let ch1 = ch; // moves `ch`

…then this code only compiles if name is not used again:

let ch = Character { ... };

let name = &ch.name; // create reference

let ch1 = ch; // ERROR: cannot move while borrowed

let name1 = name; // use reference again

…but Dada can

There are two reasons that Rust forbids moves of borrowed data:

- References are pointers, so those pointers may become invalidated. In the example above,

namepoints to the stack slot forch, so ifchwere to be moved intoch1, that makes the reference invalid. - The type system would lose track of things. Internally, the Rust borrow checker has a kind of "indirection". It knows that

chis borrowed for some span of the code (a "lifetime"), and it knows that the lifetime in the type ofnameis related to that lifetime, but it doesn't really know thatnameis borrowed fromchin particular.4

Neither of these apply to Dada:

- Because references are not pointers into the stack, but rather shallow copies, moving the borrowed value doesn't invalidate their contents. They remain valid.

- Because Dada's types reference actual variable names, we can modify them to reflect moves.

Dada tracks moves in its types

OK, let's revisit that Rust example that was giving us an error. When we convert it to Dada, we find that it type checks just fine:

class Character(...) // as before

let ch: given Character = Character(...)

let name: ref[ch.name] String = ch.name

// -- originally it was borrowed from `ch`

let ch1 = ch.give

// ------- but `ch` was moved to `ch1`

let name1: ref[ch1.name] = name

// --- now it is borrowed from `ch1`Woah, neat! We can see that when we move from ch into ch1, the compiler updates the types of the variables around it. So actually the type of name changes to ref[ch1.name] String. And then when we move from name to name1, that's totally valid.

In PL land, updating the type of a variable from one thing to another is called a "strong update". Obviously things can get a bit complicated when control-flow is involved, e.g., in a situation like this:

let ch = Character(...)

let ch1 = Character(...)

let name = ch.name

if some_condition_is_true() {

// On this path, the type of `name` changes

// to `ref[ch1.name] String`, and so `ch`

// is no longer considered borrowed.

ch1 = ch.give

ch = Character(...) // not borrowed, we can mutate

} else {

// On this path, the type of `name`

// remains unchanged, and `ch` is borrowed.

}

// Here, the types are merged, so the

// type of `name` is `ref[ch.name, ch1.name] String`.

// Therefore, `ch` is considered borrowed here.Renaming lets us call functions with borrowed values

OK, let's take the next step. Let's define a Dada function that takes an owned value and another value borrowed from it, like the name, and then call it:

fn character_and_name(

ch1: given Character,

name1: ref[ch1] String,

) {

// ... does something ...

}We could call this function like so, as you might expect:

let ch = Character(...)

let name = ch.name

character_and_name(ch.give, name)So…how does this work? Internally, the type checker type-checks a function call by creating a simpler snippet of code, essentially, and then type-checking that. It's like desugaring but only at type-check time. In this simpler snippet, there are a series of let statements to create temporary variables for each argument. These temporaries always have an explicit type taken from the method signature, and they are initialized with the values of each argument:

// type checker "desugars" `character_and_name(ch.give, name)`

// into more primitive operations:

let tmp1: given Character = ch.give

// --------------- -------

// | taken from the call

// taken from fn sig

let tmp2: ref[tmp1.name] String = name

// --------------------- ----

// | taken from the call

// taken from fn sig,

// but rewritten to use the new

// temporariesIf this type checks, then the type checker knows you have supplied values of the required types, and so this is a valid call. Of course there are a few more steps, but that's the basic idea.

Notice what happens if you supply data borrowed from the wrong place:

let ch = Character(...)

let ch1 = Character(...)

character_and_name(ch, ch1.name)

// --- wrong place!This will fail to type check because you get:

let tmp1: given Character = ch.give

let tmp2: ref[tmp1.name] String = ch1.name

// --------

// has type `ref[ch1.name] String`,

// not `ref[tmp1.name] String`Class constructors are "just" special functions

So now, if we go all the way back to our original example, we can see how the Message example worked:

class Message(

list: String

items: Vec[ref[self.list] String]

)Basically, when you construct a Message(list, items), that's "just another function call" from the type system's perspective, except that self in the signature is handled carefully.

This is modeled, not implemented

I should be clear, this system is modeled in the dada-model repository, which implements a kind of "mini Dada" that captures what I believe to be the most interesting bits. I'm working on fleshing out that model a bit more, but it's got most of what I showed you here.5 For example, here is a test that you get an error when you give a reference to the wrong value.

The "real implementation" is lagging quite a bit, and doesn't really handle the interesting bits yet. Scaling it up from model to real implementation involves solving type inference and some other thorny challenges, and I haven't gotten there yet - though I have some pretty interesting experiments going on there too, in terms of the compiler architecture.6

This could apply to Rust

I believe we could apply most of this system to Rust. Obviously we'd have to rework the borrow checker to be based on places, but that's the straight-forward part. The harder bit is the fact that &T is a pointer in Rust, and that we cannot readily change. However, for many use cases of self-references, this isn't as important as it sounds. Often, the data you wish to reference is living in the heap, and so the pointer isn't actually invalidated when the original value is moved.

Consider our opening example. You might imagine Rust allowing something like this in Rust:

struct Message {

list: String,

items: Vec<&{self.list} str>,

}

In this case, the str data is heap-allocated, so moving the string doesn't actually invalidate the &str value (it would invalidate an &String value, interestingly).

In Rust today, the compiler doesn't know all the details of what's going on. String has a Deref impl and so it's quite opaque whether str is heap-allocated or not. But we are working on various changes to this system in the Beyond the & goal, most notably the Field Projections work. There is likely some opportunity to address this in that context, though to be honest I'm behind in catching up on the details.

-

I'll note in passing that Dada unifies

strandStringinto one type as well. I'll talk in detail about how that works in a future blog post. ↩︎ -

This is kind of like C++ references (e.g.,

String&), which also act "as if" they were a value (i.e., you writes.foo(), nots->foo()), but a C++ reference is truly a pointer, unlike a Dada ref. ↩︎ -

This goal was in part inspired by a conversation I had early on within Amazon, where a (quite experienced) developer told me, "It took me months to understand what variables are in Rust". ↩︎

-

I explained this some years back in a talk on Polonius at Rust Belt Rust, if you'd like more detail. ↩︎

-

No closures or iterator chains! ↩︎

-

As a teaser, I'm building it in async Rust, where each inference variable is a "future" and use "await" to find out when other parts of the code might have added constraints. ↩︎

27 Feb 2026 10:20am GMT

26 Feb 2026

Planet Mozilla

Planet Mozilla

Hacks.Mozilla.Org: Making WebAssembly a first-class language on the Web

This post is an expanded version of a presentation I gave at the 2025 WebAssembly CG meeting in Munich.

WebAssembly has come a long way since its first release in 2017. The first version of WebAssembly was already a great fit for low-level languages like C and C++, and immediately enabled many new kinds of applications to efficiently target the web.

Since then, the WebAssembly CG has dramatically expanded the core capabilities of the language, adding shared memories, SIMD, exception handling, tail calls, 64-bit memories, and GC support, alongside many smaller improvements such as bulk memory instructions, multiple returns, and reference values.

These additions have allowed many more languages to efficiently target WebAssembly. There's still more important work to do, like stack switching and improved threading, but WebAssembly has narrowed the gap with native in many ways.

Yet, it still feels like something is missing that's holding WebAssembly back from wider adoption on the Web.

There are multiple reasons for this, but the core issue is that WebAssembly is a second-class language on the web. For all of the new language features, WebAssembly is still not integrated with the web platform as tightly as it should be.

This leads to a poor developer experience, which pushes developers to only use WebAssembly when they absolutely need it. Oftentimes JavaScript is simpler and "good enough". This means its users tend to be large companies with enough resources to justify the investment, which then limits the benefits of WebAssembly to only a small subset of the larger Web community.

Solving this issue is hard, and the CG has been focused on extending the WebAssembly language. Now that the language has matured significantly, it's time to take a closer look at this. We'll go deep into the problem, before talking about how WebAssembly Components could improve things.

What makes WebAssembly second-class?

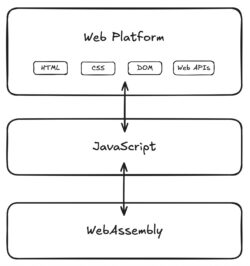

At a very high level, the scripting part of the web platform is layered like this:

WebAssembly can directly interact with JavaScript, which can directly interact with the web platform. WebAssembly can access the web platform, but only by using the special capabilities of JavaScript. JavaScript is a first-class language on the web, and WebAssembly is not.

This wasn't an intentional or malicious design decision; JavaScript is the original scripting language of the Web and co-evolved with the platform. Nonetheless, this design significantly impacts users of WebAssembly.

What are these special capabilities of JavaScript? For today's discussion, there are two major ones:

- Loading of code

- Using Web APIs

Loading of code

WebAssembly code is unnecessarily cumbersome to load. Loading JavaScript code is as simple as just putting it in a script tag:

<script src="script.js"></script>WebAssembly is not supported in script tags today, so developers need to use the WebAssembly JS API to manually load and instantiate code.

let bytecode = fetch(import.meta.resolve('./module.wasm'));

let imports = { ... };

let { exports } =

await WebAssembly.instantiateStreaming(bytecode, imports);The exact sequence of API calls to use is arcane, and there are multiple ways to perform this process, each of which has different tradeoffs that are not clear to most developers. This process generally just needs to be memorized or generated by a tool for you.

Thankfully, there is the esm-integration proposal, which is already implemented in bundlers today and which we are actively implementing in Firefox. This proposal lets developers import WebAssembly modules from JS code using the familiar JS module system.

import { run } from "/module.wasm";

run();In addition, it allows a WebAssembly module to be loaded directly from a script tag using type="module":

<script type="module" src="/module.wasm"></script>This streamlines the most common patterns for loading and instantiating WebAssembly modules. However, while this mitigates the initial difficulty, we quickly run into the real problem.

Using Web APIs

Using a Web API from JavaScript is as simple as this:

console.log("hello, world");For WebAssembly, the situation is much more complicated. WebAssembly has no direct access to Web APIs and must use JavaScript to access them.

The same single-line console.log program requires the following JavaScript file:

// We need access to the raw memory of the Wasm code, so

// create it here and provide it as an import.

let memory = new WebAssembly.Memory(...);

function consoleLog(messageStartIndex, messageLength) {

// The string is stored in Wasm memory, but we need to

// decode it into a JS string, which is what DOM APIs

// require.

let messageMemoryView = new UInt8Array(

memory.buffer, messageStartIndex, messageLength);

let messageString =

new TextDecoder().decode(messageMemoryView);

// Wasm can't get the `console` global, or do

// property lookup, so we do that here.

return console.log(messageString);

}

// Pass the wrapped Web API to the Wasm code through an

// import.

let imports = {

"env": {

"memory": memory,

"consoleLog": consoleLog,

},

};

let { instance } =

await WebAssembly.instantiateStreaming(bytecode, imports);

instance.exports.run();

And the following WebAssembly file:

(module

;; import the memory from JS code

(import "env" "memory" (memory 0))

;; import the JS consoleLog wrapper function

(import "env" "consoleLog"

(func $consoleLog (param i32 i32))

)

;; export a run function

(func (export "run")

(local i32 $messageStartIndex)

(local i32 $messageLength)

;; create a string in Wasm memory, store in locals

...

;; call the consoleLog method

local.get $messageStartIndex

local.get $messageLength

call $consoleLog

)

)

Code like this is called "bindings" or "glue code" and acts as the bridge between your source language (C++, Rust, etc.) and Web APIs.

This glue code is responsible for re-encoding WebAssembly data into JavaScript data and vice versa. For example, when returning a string from JavaScript to WebAssembly, the glue code may need to call a malloc function in the WebAssembly module and re-encode the string at the resulting address, after which the module is responsible for eventually calling free.

This is all very tedious, formulaic, and difficult to write, so it is typical to generate this glue automatically using tools like embind or wasm-bindgen. This streamlines the authoring process, but adds complexity to the build process that native platforms typically do not require. Furthermore, this build complexity is language-specific; Rust code will require different bindings from C++ code, and so on.

Of course, the glue code also has runtime costs. JavaScript objects must be allocated and garbage collected, strings must be re-encoded, structs must be deserialized. Some of this cost is inherent to any bindings system, but much of it is not. This is a pervasive cost that you pay at the boundary between JavaScript and WebAssembly, even when the calls themselves are fast.

This is what most people mean when they ask "When is Wasm going to get DOM support?" It's already possible to access any Web API with WebAssembly, but it requires JavaScript glue code.

Why does this matter?

From a technical perspective, the status quo works. WebAssembly runs on the web and many people have successfully shipped software with it.

From the average web developer's perspective, though, the status quo is subpar. WebAssembly is too complicated to use on the web, and you can never escape the feeling that you're getting a second class experience. In our experience, WebAssembly is a power user feature that average developers don't use, even if it would be a better technical choice for their project.

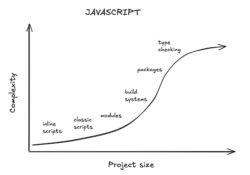

The average developer experience for someone getting started with JavaScript is something like this:

There's a nice gradual curve where you use progressively more complicated features as the scope of your project increases.

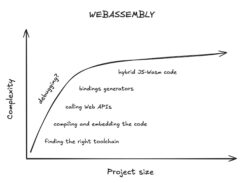

By comparison, the average developer experience for someone getting started with WebAssembly is something like this:

You immediately must scale "the wall" of wrangling the many different pieces to work together. The end result is often only worth it for large projects.

Why is this the case? There are several reasons, and they all directly stem from WebAssembly being a second class language on the web.

1. It's difficult for compilers to provide first-class support for the web

Any language targeting the web can't just generate a Wasm file, but also must generate a companion JS file to load the Wasm code, implement Web API access, and handle a long tail of other issues. This work must be redone for every language that wants to support the web, and it can't be reused for non-web platforms.

Upstream compilers like Clang/LLVM don't want to know anything about JS or the web platform, and not just for lack of effort. Generating and maintaining JS and web glue code is a specialty skill that is difficult for already stretched-thin maintainers to justify. They just want to generate a single binary, ideally in a standardized format that can also be used on platforms besides the web.

2. Standard compilers don't produce WebAssembly that works on the web

The result is that support for WebAssembly on the web is often handled by third-party unofficial toolchain distributions that users need to find and learn. A true first-class experience would start with the tool that users already know and have installed.

This is, unfortunately, many developers' first roadblock when getting started with WebAssembly. They assume that if they just have rustc installed and pass a -target=wasm flag that they'll get something they could load in a browser. You may be able to get a WebAssembly file doing that, but it will not have any of the required platform integration. If you figure out how to load the file using the JS API, it will fail for mysterious and hard-to-debug reasons. What you really need is the unofficial toolchain distribution which implements the platform integration for you.

3. Web documentation is written for JavaScript developers

The web platform has incredible documentation compared to most tech platforms. However, most of it is written for JavaScript. If you don't know JavaScript, you'll have a much harder time understanding how to use most Web APIs.

A developer wanting to use a new Web API must first understand it from a JavaScript perspective, then translate it into the types and APIs that are available in their source language. Toolchain developers can try to manually translate the existing web documentation for their language, but that is a tedious and error prone process that doesn't scale.

4. Calling Web APIs can still be slow

If you look at all of the JS glue code for the single call to console.log above, you'll see that there is a lot of overhead. Engines have spent a lot of time optimizing this, and more work is underway. Yet this problem still exists. It doesn't affect every workload, but it's something every WebAssembly user needs to be careful about.

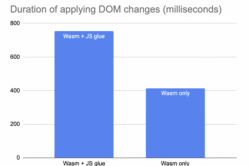

Benchmarking this is tricky, but we ran an experiment in 2020 to precisely measure the overhead that JS glue code has in a real world DOM application. We built the classic TodoMVC benchmark in the experimental Dodrio Rust framework and measured different ways of calling DOM APIs.

Dodrio was perfect for this because it computed all the required DOM modifications separately from actually applying them. This allowed us to precisely measure the impact of JS glue code by swapping out the "apply DOM change list" function while keeping the rest of the benchmark exactly the same.

We tested two different implementations:

- "Wasm + JS glue": A WebAssembly function which reads the change list in a loop, and then asks JS glue code to apply each change individually. This is the performance of WebAssembly today.

- "Wasm only": A WebAssembly function which reads the change list in a loop, and then uses an experimental direct binding to the DOM which skips JS glue code. This is the performance of WebAssembly if we could skip JS glue code.

The duration to apply the DOM changes dropped by 45% when we were able to remove JS glue code. DOM operations can already be expensive; WebAssembly users can't afford to pay a 2x performance tax on top of that. And as this experiment shows, it is possible to remove the overhead.

5. You always need to understand the JavaScript layer

There's a saying that "abstractions are always leaky".

The state of the art for WebAssembly on the web is that every language builds their own abstraction of the web platform using JavaScript. But these abstractions are leaky. If you use WebAssembly on the web in any serious capacity, you'll eventually hit a point where you need to read or write your own JavaScript to make something work.

This adds a conceptual layer which is a burden for developers. It feels like it should just be enough to know your source language, and the web platform. Yet for WebAssembly, we require users to also know JavaScript in order to be a proficient developer.

How can we fix this?

This is a complicated technical and social problem, with no single solution. We also have competing priorities for what is the most important problem with WebAssembly to fix first.

Let's ask ourselves: In an ideal world, what could help us here?

What if we had something that was:

- A standardized self-contained executable artifact

- Supported by multiple languages and toolchains

- Which handles loading and linking of WebAssembly code

- Which supports Web API usage

If such a thing existed, languages could generate these artifacts and browsers could run them, without any JavaScript involved. This format would be easier for languages to support and could potentially exist in standard upstream compilers, runtimes, toolchains, and popular packages without the need for third-party distributions. In effect, we could go from a world where every language re-implements the web platform integration using JavaScript, to sharing a common one that is built directly into the browser.

It would obviously be a lot of work to design and validate a solution! Thankfully, we already have a proposal with these goals that has been in development for years: the WebAssembly Component Model.

What is a WebAssembly Component?

For our purposes, a WebAssembly Component defines a high-level API that is implemented with a bundle of low-level WebAssembly code. It's a standards-track proposal in the WebAssembly CG that's been in development since 2021.

Already today, WebAssembly Components…

- Can be created from many different programming languages.

- Can be executed in many different runtimes (including in browsers today, with a polyfill).

- Can be linked together to allow code re-use between different languages.

- Allow WebAssembly code to directly call Web APIs.

If you're interested in more details, check out the Component Book or watch "What is a Component?".

We feel that WebAssembly Components have the potential to give WebAssembly a first-class experience on the web platform, and to be the missing link described above.

How could they work?

Let's try to re-create the earlier console.log example using only WebAssembly Components and no JavaScript.

NOTE: The interactions between WebAssembly Components and the web platform have not been fully designed, and the tooling is under active development.

Take this as an aspiration for how things could be, not a tutorial or promise.

The first step is to specify which APIs our application needs. This is done using an IDL called WIT. For our example, we need the Console API. We can import it by specifying the name of the interface.

component {

import std:web/console;

}The std:web/console interface does not exist today, but would hypothetically come from the official WebIDL that browsers use for describing Web APIs. This particular interface might look like this:

package std:web;

interface console {

log: func(msg: string);

...

}Now that we have the above interfaces, we can use them when writing a Rust program that compiles to a WebAssembly Component:

use std::web::console;

fn main() {

console::log("hello, world");

}Once we have a component, we can load it into the browser using a script tag.

<script type="module" src="component.wasm"></script>And that's it! The browser would automatically load the component, bind the native web APIs directly (without any JS glue code), and run the component.

This is great if your whole application is written in WebAssembly. However, most WebAssembly usage is part of a "hybrid application" which also contains JavaScript. We also want to simplify this use case. The web platform shouldn't be split into "silos" that can't interact with each other. Thankfully, WebAssembly Components also address this by supporting cross-language interoperability.

Let's create a component that exports an image decoder for use from JavaScript code. First we need to write the interface that describes the image decoder:

interface image-lib {

record pixel {

r: u8;

g: u8;

b: u8;

a: u8;

}

resource image {

from-stream:

static async func(bytes: stream<u8>) -> result<image>;

get: func(x: u32, y: u32) -> pixel;

}

}

component {

export image-lib;

}

Once we have that, we can write the component in any language that supports components. The right language will depend on what you're building or what libraries you need to use. For this example, I'll leave the implementation of the image decoder as an exercise for the reader.

The component can then be loaded in JavaScript as a module. The image decoder interface we defined is accessible to JavaScript, and can be used as if you were importing a JavaScript library to do the task.

import { Image } from "image-lib.wasm";

let byteStream = (await fetch("/image.file")).body;

let image = await Image.fromStream(byteStream);

let pixel = image.get(0, 0);

console.log(pixel); // { r: 255, g: 255, b: 0, a: 255 }Next Steps

As it stands today, we think that WebAssembly Components would be a step in the right direction for the web. Mozilla is working with the WebAssembly CG to design the WebAssembly Component Model. Google is also evaluating it at this time.

If you're interested to try this out, learn to build your first component and try it out in the browser using Jco or from the command-line using Wasmtime. The tooling is under heavy development, and contributions and feedback are welcome. If you're interested in the in-development specification itself, check out the component-model proposal repository.

WebAssembly has come very far from when it was first released in 2017. I think the best is still yet to come if we're able to turn it from being a "power user" feature, to something that average developers can benefit from.

The post Making WebAssembly a first-class language on the Web appeared first on Mozilla Hacks - the Web developer blog.

26 Feb 2026 4:02pm GMT

25 Feb 2026

Planet Mozilla

Planet Mozilla

This Week In Rust: This Week in Rust 640

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @thisweekinrust.bsky.social on Bluesky or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Want TWIR in your inbox? Subscribe here.

Updates from Rust Community

Official

Foundation

Project/Tooling Updates

- Zed: Split Diffs are Here

- CHERIoT Rust: Status update #0

- SeaORM now supports Arrow & Parquet

- Releasing bincode-next v3.0.0-rc.1

- Introducing Almonds

- SafePilot v0.1: self-hosted AI assistant

- Hitbox 0.2.0: declarative cache orchestration

Observations/Thoughts

- What it means that Ubuntu is using Rust

- Read Locks Are Not Your Friends

- Achieving Zero Bugs: Rust, Specs, and AI Coding

- [video] device-envoy: Making Embedded Fun with Rust-by Carl Kadie

Rust Walkthroughs

- About memory pressure, lock contention, and Data-oriented Design

- Breaking SHA-2: length extension attacks in practice with Rust

- device-envoy: Making Embedded Fun with Rust, Embassy, and Composable Device Abstractions

Research

Miscellaneous

Crate of the Week

This week's crate is docstr, a macro crate providing a macro to create multiline strings out of doc comments.

Thanks to Nik Revenco for the self-suggestion!

Please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization.

If you are a feature implementer and would like your RFC to appear in this list, add a call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

No calls for testing were issued this week by Rust, Cargo, Rustup or Rust language RFCs.

Let us know if you would like your feature to be tracked as a part of this list.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

No Calls for participation were submitted this week.

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on Bluesky or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

- Rust India Conference 2026 | CFP open until 2026-03-14 | Bangalore, IN | 2026-04-18

- Oxidize Conference | CFP open until 2026-03-23 | Berlin, Germany | 2026-09-14 - 2026-09-16

- EuroRust | CFP open until 2026-04-27 | Barcelona, Spain | 2026-10-14 - 2026-10-17

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on Bluesky or Mastodon!

Updates from the Rust Project

450 pull requests were merged in the last week

Compiler

- bring back

enum DepKind - simplify the canonical

enumclone branches to a copy statement - stabilize

if letguards (feature(if_let_guard))

Library

- add

try_shrink_toandtry_shrink_to_fitto Vec - fixed ByteStr not padding within its Display trait when no specific alignment is mentioned

- reflection

TypeId::trait_info_of - reflection

TypeKind::FnPtr - just pass

Layoutdirectly tobox_new_uninit - stabilize

cfg_select!

Cargo

cli: Remove--lockfile-pathjob_queue: Handle Clippy CLI arguments infixmessage- fix parallel locking when

-Zfine-grain-lockingis enabled

Clippy

- add

unnecessary_trailing_commalint - add new

disallowed_fieldslint clone_on_ref_ptr: don't add a&to the receiver if it's a referenceneedless_maybe_sized: don't lint in proc-macro-generated codestr_to_string: false positive non-str typesuseless_conversion: also fire inside compiler desugarings- add

allow-unwrap-typesconfiguration forunwrap_usedandexpect_used - add brackets around unsafe or labeled block used in

else - allow

deprecated(since = "CURRENT_RUSTC_VERSION") - do not suggest removing reborrow of a captured upvar

- enhance

collapsible_matchto cover if-elses - enhance

manual_is_variant_andto coverfilterchainingis_some - fix

explicit_counter_loopfalse negative when loop counter starts at non-zero - fix

join_absolute_pathsto work correctly depending on the platform - fix

redundant_iter_clonedfalse positive with move closures and coroutines - fix

unnecessary_min_or_maxfor usize - fix panic/assert message detection in edition 2015/2018

- handle

Result<T, !>andControlFlow<!, T>asTwrt#[must_use] - make

unchecked_time_subtractionto better handleDurationliterals - make

unnecessary_foldcommutative - the path from a type to itself is

Self

Rust-Analyzer

- add partial selection for

generate_getter_or_setter - offer block let fallback postfix complete

- offer on

is_some_andforreplace_is_method_with_if_let_method - fix some TryEnum reference assists

- add handling for cycles in

sizedness_constraint_for_ty() - better import placement + merging

- complete

.leton block tail prefix expression - complete derive helpers on empty nameref

- correctly parenthesize inverted condition in

convert_if_to_bool_… - exclude macro refs in tests when excludeTests is enabled

- fix another case where we forgot to put the type param for

PartialOrdandPartialEqin builtin derives - fix predicates of builtin derive traits with two parameters defaulting to

Self - generate method assist uses enclosing impl block instead of first found

- no complete suggest param in complex pattern

- offer

toggle_macro_delimiterin nested macro - prevent qualifying parameter names in

add_missing_impl_members - implement

Span::SpanSoucefor proc-macro-srv

Rust Compiler Performance Triage

Overall, a bit more noise than usual this week, but mostly a slight improvement with several low-level optimizations at MIR and LLVM IR building landing. Also less commits landing than usual, mostly due to GitHub CI issues during the week.

Triage done by @simulacrum. Revision range: 3c9faa0d..eeb94be7

3 Regressions, 4 Improvements, 4 Mixed; 3 of them in rollups 24 artifact comparisons made in total

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

- No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

Tracking Issues & PRs

- Gate #![reexport_test_harness_main] properly

- Observe

close(2)errors forstd::fs::{copy, write} - warn on empty precision

- refactor 'valid for read/write' definition: exclude null

- Remove -Csoft-float

- Place-less cg_ssa intrinsics

- Optimize

repr(Rust)enums by omitting tags in more cases involving uninhabited variants. - Proposal for a dedicated test suite for the parallel frontend

- Promote tier 3 riscv32 ESP-IDF targets to tier 2

- Proposal for Adapt Stack Protector for Rust

No Items entered Final Comment Period this week for Rust RFCs, Language Reference, Language Team, Leadership Council or Unsafe Code Guidelines.

Let us know if you would like your PRs, Tracking Issues or RFCs to be tracked as a part of this list.

New and Updated RFCs

- Cargo: hints.min-opt-level

- Cargo RFC for min publish age

- Place traits

- RFC: Extend manifest dependencies with used

Upcoming Events

Rusty Events between 2026-02-25 - 2026-03-25 🦀

Virtual

- 2026-02-25 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

- 2026-02-25 | Virtual (Girona, ES) | Rust Girona

- 2026-02-26 | Virtual (Berlin, DE) | Rust Berlin

- 2026-03-04 | Virtual (Indianapolis, IN, US) | Indy Rust

- 2026-03-05 | Virtual (Charlottesville, VA, US) | Charlottesville Rust Meetup

- 2026-03-05 | Virtual (Nürnberg, DE) | Rust Nuremberg

- 2026-03-07 | Virtual (Kampala, UG) | Rust Circle Meetup

- 2026-03-10 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

- 2026-03-10 | Virtual (London, UK)| Women in Rust

- 2026-03-11 | Virtual (Girona, ES) | Rust Girona

- 2026-03-12 | Virtual (Berlin, DE) | Rust Berlin

- 2026-03-17 | Virtual (Washington, DC, US) | Rust DC

- 2026-03-18 | Virtual (Girona, ES) | Rust Girona

- 2026-03-18 | Virtual (Vancouver, BC, CA) | Vancouver Rust

- 2026-03-19 | Hybrid (Seattle, WA, US) | Seattle Rust User Group

- 2026-03-20 | Virtual | Packt Publishing Limited

- 2026-03-24 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

- 2026-03-24 | Virtual (London, UK) | Women in Rust

- 2026-03-25 | Virtual (Girona, ES) | Rust Girona

Asia

- 2026-03-22 | Tel Aviv-yafo, IL | Rust 🦀 TLV

Europe

- 2026-02-25 | Copenhagen, DK | Copenhagen Rust Community

- 2026-02-26 | Prague, CZ | Rust Czech Republic

- 2026-02-28 | Stockholm, SE | Stockholm Rust

- 2026-03-04 | Barcelona, ES | BcnRust

- 2026-03-04 | Hamburg, DE | Rust Meetup Hamburg

- 2026-03-04 | Oxford, UK | Oxford ACCU/Rust Meetup.

- 2026-03-05 | Oslo, NO | Rust Oslo

- 2026-03-11 | Amsterdam, NL | Rust Developers Amsterdam Group

- 2026-03-12 | Geneva, CH | Post Tenebras Lab

- 2026-03-18 | Dortmund, DE | Rust Dortmund

- 2026-03-19 - 2026-03-20 | | Rustikon

- 2026-03-24 | Aarhus, DK | Rust Aarhus

North America

- 2026-02-25 | Austin, TX, US | Rust ATX

- 2026-02-25 | Los Angeles, CA, US | Rust Los Angeles

- 2026-02-26 | Atlanta, GA, US | Rust Atlanta

- 2026-02-26 | New York, NY, US | Rust NYC

- 2026-02-28 | Boston, MA, US | Boston Rust Meetup

- 2026-03-05 | Saint Louis, MO, US | STL Rust

- 2026-03-07 | Boston, MA, US | Boston Rust Meetup

- 2026-03-14 | Boston, MA, US | Boston Rust Meetup

- 2026-03-17 | San Francisco, CA, US | San Francisco Rust Study Group

- 2026-03-19 | Hybrid (Seattle, WA, US) | Seattle Rust User Group

- 2026-03-21 | Boston, MA, US | Boston Rust Meetup

- 2026-03-25 | Austin, TX, US | Rust ATX

Oceania

- 2026-03-26 | Melbourne, VIC, AU | Rust Melbourne

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

This is actually just Rust adding support for C++-style duck-typed templates, and the long and mostly-irrelevant information contained in the ICE message is part of the experience.

Thanks to Kyllingene for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by:

- nellshamrell

- llogiq

- ericseppanen

- extrawurst

- U007D

- mariannegoldin

- bdillo

- opeolluwa

- bnchi

- KannanPalani57

- tzilist

Email list hosting is sponsored by The Rust Foundation

25 Feb 2026 5:00am GMT

24 Feb 2026

Planet Mozilla

Planet Mozilla

The Mozilla Blog: How to turn off AI features in Firefox, or choose the ones you want

Other browsers force AI features on users. Firefox gives you a choice.

In the latest desktop version of Firefox, you'll find an AI controls section where you can turn off AI features entirely - or decide which ones stay on. Here's how to set things up the way you want.

But first, what AI features can you manage in Firefox?

AI is a broad term. Traditional machine learning - like systems that classify, rank or personalize an experience - have been part of browsers for a while.

AI controls focus on newer AI-enhanced features on Firefox, including website translations, image alt text in PDFs, AI-enhanced tab groups, link previews and an AI chatbot in the sidebar.

Block AI features with a single switch

To block current and future AI-enhanced features:

- Menu bar > Firefox > Settings (or Preferences) > AI Controls

- Turn on Block AI enhancements

Or block specific features

You can also manage AI features individually. Block link previews? Up to you. Change your mind on translations? You can turn them on any time, while keeping all other AI features blocked.

To block specific features:

- Menu bar > Firefox > Settings (or Preferences) > AI Controls

- Find the AI feature > dropdown menu > Blocked

In the dropdown menu, Available means you can see and use the feature; Enabled means you've opted in to use it; and Blocked means it's hidden and can't be used.

Choose the AI features you want to use

Enable image alt text in PDFs to improve accessibility. Keep AI-enhanced tab group suggestions if they're useful. Block anything that isn't - the decision is yours.

You can update your choices in Setting anytime.

For more details on AI controls, head to our Mozilla Support page. Have feedback? We'd love to hear it on Mozilla Connect - or come chat with us during our Reddit AMA on Feb. 26.

The post How to turn off AI features in Firefox, or choose the ones you want appeared first on The Mozilla Blog.

24 Feb 2026 5:56pm GMT

Firefox Developer Experience: Firefox WebDriver Newsletter 148

WebDriver is a remote control interface that enables introspection and control of user agents. As such it can help developers to verify that their websites are working and performing well with all major browsers. The protocol is standardized by the W3C and consists of two separate specifications: WebDriver classic (HTTP) and the new WebDriver BiDi (Bi-Directional).

This newsletter gives an overview of the work we've done as part of the Firefox 148 release cycle.

Contributions

Firefox is an open source project, and we are always happy to receive external code contributions to our WebDriver implementation. We want to give special thanks to everyone who filed issues, bugs and submitted patches.

In Firefox 148, a WebDriver bug was fixed by a contributor:

WebDriver code is written in JavaScript, Python, and Rust so any web developer can contribute! Read how to setup the work environment and check the list of mentored issues for Marionette, or the list of mentored JavaScript bugs for WebDriver BiDi. Join our chatroom if you need any help to get started!

General

- Fixed a race condition during initialization of required browser features when opening a new window, preventing issues when navigating immediately to another URL.

- Fixed an interoperability issue between Marionette and WebDriver BiDi where the BiDi

clientWindowID was incorrectly used as a window handle in Marionette.

WebDriver BiDi

- Added initial support for interacting with the browser's chrome scope (the Firefox window itself). The

browsingContext.getTreecommand now accepts the vendor specificmoz:scopeparameter and returns chrome contexts when set tochromeand Firefox was started with the--remote-allow-system-accessargument. These contexts can be used withscript.evaluateandscript.callFunctionto execute privileged JavaScript with access to Gecko APIs. Other commands do not yet support chrome contexts, but support will be added incrementally as needed. - Updated the

emulation.setGeolocationOverrideandemulation.setScreenOrientationOverridecommands to implement the new reset behavior: contexts are reset only when thecontextsparameter is provided, and user contexts only when theuserContextsparameter is specified. - Fixed a race condition in

browsingContext.createwhere opening a new tab in the foreground could return before the document became visible. - Fixed an issue that occurred when a navigation redirected to an error page.

- Fixed an issue in

network.getDatathat caused aRangeErrorwhen decoding chunked response bodies due to a size mismatch. - Fixed an issue where the

browsingContext.userPromptOpenedandbrowsingContext.userPromptClosedevents incorrectly reported the top-level context ID instead of the iframe's context ID. - Improved the performance of WebDriver BiDi commands by approximately 100 ms when the selected context is no longer available during the command execution.

Marionette

24 Feb 2026 2:26pm GMT

Hacks.Mozilla.Org: Goodbye innerHTML, Hello setHTML: Stronger XSS Protection in Firefox 148

Cross-site scripting (XSS) remains one of the most prevalent vulnerabilities on the web. The new standardized Sanitizer API provides a straightforward way for web developers to sanitize untrusted HTML before inserting it into the DOM. Firefox 148 is the first browser to ship this standardized security enhancing API, advancing a safer web for everyone. We expect other browsers to follow soon.

An XSS vulnerability arises when a website inadvertently lets attackers inject arbitrary HTML or JavaScript through user-generated content. With this attack, an attacker could monitor and manipulate user interactions and continually steal user data for as long as the vulnerability remains exploitable. XSS has a long history of being notoriously difficult to prevent and has ranked among the top three web vulnerabilities (CWE-79) for nearly a decade.

Firefox has been deeply involved in solutions for XSS from the beginning, starting with spearheading the Content-Security-Policy (CSP) standard in 2009. CSP allows websites to restrict which resources (scripts, styles, images, etc.) the browser can load and execute, providing a strong line of defense against XSS. Despite a steady stream of improvements and ongoing maintenance, CSP did not gain sufficient adoption to protect the long tail of the web as it requires significant architectural changes for existing web sites and continuous review by security experts.

The Sanitizer API is designed to help fill that gap by providing a standardized way to turn malicious HTML into harmless HTML - in other words, to sanitize it. The setHTML( ) method integrates sanitization directly into HTML insertion, providing safety by default. Here is an example of sanitizing a simple unsafe HTML:

document.body.setHTML(`<h1>Hello my name is <img src="x"

onclick="alert('XSS')">`);

This sanitization will allow the HTML <h1> element while removing the embedded <img> element and its onclick attribute, thereby eliminating the XSS attack resulting in the following safe HTML:

<h1>Hello my name is</h1>

Developers can opt into stronger XSS protections with minimal code changes by replacing error-prone innerHTML assignments with setHTML(). If the default configuration of setHTML( ) is too strict (or not strict enough) for a given use case, developers can provide a custom configuration that defines which HTML elements and attributes should be kept or removed. To experiment with the Sanitizer API before introducing it on a web page, we recommend exploring the Sanitizer API playground.

For even stronger protections, the Sanitizer API can be combined with Trusted Types, which centralize control over HTML parsing and injection. Once setHTML( ) is adopted, sites can enable Trusted Types enforcement more easily, often without requiring complex custom policies. A strict policy can allow setHTML( ) while blocking other unsafe HTML insertion methods, helping prevent future XSS regressions.

The Sanitizer API enables an easy replacement of innerHTML assignments with setHTML( ) in existing code, introducing a new safer default to protect users from XSS attacks on the web. Firefox 148 supports the Sanitizer API as well as Trusted Types, which creates a safer web experience. Adopting these standards will allow all developers to prevent XSS without the need for a dedicated security team or significant implementation changes.

Image credits for the illustration above: Website, by Desi Ratna; Person, by Made by Made; Hacker by Andy Horvath.

The post Goodbye innerHTML, Hello setHTML: Stronger XSS Protection in Firefox 148 appeared first on Mozilla Hacks - the Web developer blog.

24 Feb 2026 1:00pm GMT

Firefox Tooling Announcements: Firefox Profiler Deployment (February 24, 2026)

The latest version of the Firefox Profiler is now live! Check out the full changelog below to see what's changed.

Highlights:

- [fatadel] Provide correct instructions on the home page for Firefox for Android (Fenix) (#5816)

- [Markus Stange] Migrate to esbuild (#5589)

- [Nazım Can Altınova] Make the source table non-optional in the gecko profile format (#5842)

Other Changes:

- [Markus Stange] Move transparent fill check to a less expensive place. (#5826)

- [fatadel] Refine docs for ctrl key (#5831)

- [Nazım Can Altınova] Update the team members inside CONTRIBUTING.md (#5829)

- [Markus Stange] Make markers darker (#5839)

- [Markus Stange] Directly use photon-colors (#5821)

- [Markus Stange] Use data URLs for SVG files. (#5845)

- [Markus Stange] Tweak dark mode colors some more. (#5846)

- [fatadel] fix foreground color of the button on error page (#5849)

- [Nazım Can Altınova]

Sync: l10n → main (February 24, 2026) (#5855)

Sync: l10n → main (February 24, 2026) (#5855)

Big thanks to our amazing localizers for making this release possible:

- de: Michael Köhler

- el: Jim Spentzos

- en-GB: Ian Neal

- es-CL: ravmn

- fr: Théo Chevalier

- fy-NL: Fjoerfoks

- a: Melo46

- it: Francesco Lodolo [:flod]

- nl: Mark Heijl

- ru: Valery Ledovskoy

- sv-SE: Andreas Pettersson

- zh-TW: Pin-guang Chen

Find out more about the Firefox Profiler on profiler.firefox.com! If you have any questions, join the discussion on our Matrix channel!

1 post - 1 participant

24 Feb 2026 12:15pm GMT

23 Feb 2026

Planet Mozilla

Planet Mozilla

Niko Matsakis: What it means that Ubuntu is using Rust

Righty-ho, I'm back from Rust Nation, and busily horrifying my teenage daughter with my (admittedly atrocious) attempts at doing an English accent1. It was a great trip with a lot of good conversations and some interesting observations. I am going to try to blog about some of them, starting with some thoughts spurred by Jon Seager's closing keynote, "Rust Adoption At Scale with Ubuntu".

There are many chasms out there

For some time now I've been debating with myself, has Rust "crossed the chasm"? If you're not familiar with that term, it comes from a book that gives a kind of "pop-sci" introduction to the Technology Adoption Life Cycle.

The answer, of course, is it depends on who you ask. Within Amazon, where I have the closest view, the answer is that we are "most of the way across": Rust is squarely established as the right way to build at-scale data planes or resource-aware agents and it is increasingly seen as the right choice for low-level code in devices and robotics as well - but there remains a lingering perception that Rust is useful for "those fancy pants developers at S3" (or wherever) but a bit overkill for more average development3.

On the other hand, within the realm of Safety Critical Software, as Pete LeVasseur wrote in a recent rust-lang blog post, Rust is still scrabbling for a foothold. There are a number of successful products but most of the industry is in a "wait and see" mode, letting the early adopters pave the path.

"Crossing the chasm" means finding "reference customers"

The big idea that I at least took away from reading Crossing the Chasm and other references on the technology adoption life cycle is the need for "reference customers". When you first start out with something new, you are looking for pioneers and early adopters that are drawn to new things:

What an early adopter is buying [..] is some kind of change agent. By being the first to implement this change in the industry, the early adopters expect to get a jump on the competition. - from Crossing the Chasm

But as your technology matures, you have to convince people with a lower and lower tolerance for risk:

The early majority want to buy a productivity improvement for existing operations. They are looking to minimize discontinuity with the old ways. They want evolution, not revolution. - from Crossing the Chasm

So what is most convincing to people to try something new? The answer is seeing that others like them have succeeded.

You can see this at play in both the Amazon example and the Safety Critical Software example. Clearly seeing Rust used for network services doesn't mean it's ready to be used in your car's steering column4. And even within network services, seeing a group like S3 succeed with Rust may convince other groups building at-scale services to try Rust, but doesn't necessarily persuade a team to use Rust for their next CRUD service. And frankly, it shouldn't! They are likely to hit obstacles.

Ubuntu is helping Rust "cross the (user-land linux) chasm"

All of this was on my mind as I watched the keynote by Jon Seager, the VP of Engineering at Canonical, which is the company behind Ubuntu. Similar to Lars Bergstrom's epic keynote from year's past on Rust adoption within Google, Jon laid out a pitch for why Canonical is adopting Rust that was at once visionary and yet deeply practical.

"Visionary and yet deeply practical" is pretty much the textbook description of what we need to cross from early adopters to early majority. We need folks who care first and foremost about delivering the right results, but are open to new ideas that might help them do that better; folks who can stand on both sides of the chasm at once.

Jon described how Canonical focuses their own development on a small set of languages: Python, C/C++, and Go, and how they had recently brought in Rust and were using it as the language of choice for new foundational efforts, replacing C, C++, and (some uses of) Python.

Ubuntu is building the bridge across the chasm

Jon talked about how he sees it as part of Ubuntu's job to "pay it forward" by supporting the construction of memory-safe foundational utilities. Jon meant support both in terms of finances - Canonical is sponsoring the Trifecta Tech Foundation's to develop sudo-rs and ntpd-rs and sponsoring the uutils org's work on coreutils - and in terms of reputation. Ubuntu can take on the risk of doing something new, prove that it works, and then let others benefit.

Remember how the Crossing the Chasm book described early majority people? They are "looking to minimize discontinuity with the old ways". And what better way to do that than to have drop-in utilities that fit within their existing workflows.

The challenge for Rust: listening to these new adopters

With new adoption comes new perspectives. On Thursday night I was at dinner5 organized by Ernest Kissiedu6. Jon Seager was there along with some other Rust adopters from various industries, as were a few others from the Rust Foundation and the open-source project.

Ernest asked them to give us their unvarnished takes on Rust. Jon made the provocative comment that we needed to revisit our policy around having a small standard library. He's not the first to say something like that, it's something we've been hearing for years and years - and I think he's right! Though I don't think the answer is just to ship a big standard library. In fact, it's kind of a perfect lead-in to (what I hope will be) my next blog post, which is about a project I call "battery packs"7.

To grow, you have to change

The broader point though is that shifting from targeting "pioneers" and "early adopters" to targeting "early majority" sometimes involves some uncomfortable changes:

Transition between any two adoption segments is normally excruciatingly awkward because you must adopt new strategies just at the time you have become most comfortable with the old ones. [..] The situation can be further complicated if the high-tech company, fresh from its marketing success with visionaries, neglects to change its sales pitch. [..] The company may be saying "state-of-the-art" when the pragmatist wants to hear "industry standard". - Crossing the Chasm (emphasis mine)

Not everybody will remember it, but in 2016 there was a proposal called the Rust Platform. The idea was to bring in some crates and bless them as a kind of "extended standard library". People hated it. After all, they said, why not just add dependencies to your Cargo.toml? It's easy enough. And to be honest, they were right - at least at the time.

I think the Rust Platform is a good example of something that was a poor fit for early adopters, who want the newest thing and don't mind finding the best crates, but which could be a great fit for the Early Majority.8

Anyway, I'm not here to argue for one thing or another in this post, but more for the concept that we have to be open to adapting our learned wisdom to new circumstances. In the past, we were trying to bootstrap Rust into the industry's consciousness - and we have succeeded.

The task before us now is different: we need to make Rust the best option not just in terms of "what it could be" but in terms of "what it actually is" - and sometimes those are in tension.

Another challenge for Rust: turning adoption into investment

Later in the dinner, the talk turned, as it often does, to money. Growing Rust adoption also comes with growing needs placed on the Rust project and its ecosystem. How can we connect the dots? This has been a big item on my mind, and I realize in writing this paragraph how many blog posts I have yet to write on the topic, but let me lay out a few interesting points that came up over this dinner and at other recent points.

Investment can mean contribution, particularly for open-source orgs

First, there are more ways to offer support than $$. For Canonical specifically, as they are an open-source organization through-and-through, what I would most want is to build stronger relationships between our organizations. With the Rust for Linux developers, early on Rust maintainers were prioritizing and fixing bugs on behalf of RfL devs, but more and more, RfL devs are fixing things themselves, with Rust maintainers serving as mentors. This is awesome!

Money often comes before a company has adopted Rust, not after

Second, there's an interesting trend about $$ that I've seen crop up in a few places. We often think of companies investing in the open-source dependencies that they rely upon. But there's an entirely different source of funding, and one that might be even easier to tap, which is to look at companies that are considering Rust but haven't adopted it yet.

For those "would be" adopters, there are often individuals in the org who are trying to make the case for Rust adoption - these individuals are early adopters, people with a vision for how things could be, but they are trying to sell to their early majority company. And to do that, they often have a list of "table stakes" features that need to be supported; what's more, they often have access to some budget to make these things happen.

This came up when I was talking to Alexandru Radovici, the Foundation's Silver Member Directory, who said that many safety critical companies have money they'd like to spend to close various gaps in Rust, but they don't know how to spend it. Jon's investments in Trifecta Tech and the uutils org have the same character: he is looking to close the gaps that block Ubuntu from using Rust more.

Conclusions…?

Well, first of all, you should watch Jon's talk. "Brilliant", as the Brits have it.

But my other big thought is that this is a crucial time for Rust. We are clearly transitioning in a number of areas from visionaries and early adopters towards that pragmatic majority, and we need to be mindful that doing so may require us to change some of the way that we've always done things. I liked this paragraph from Crossing the Chasm:

To market successfully to pragmatists, one does not have to be one - just understand their values and work to serve them. To look more closely into these values, if the goal of visionaries is to take a quantum leap forward, the goal of pragmatists is to make a percentage improvement-incremental, measurable, predictable progress. [..] To market to pragmatists, you must be patient. You need to be conversant with the issues that dominate their particular business. You need to show up at the industry-specific conferences and trade shows they attend.

Re-reading Crossing the Chasm as part of writing this blog post has really helped me square where Rust is - for the most part, I think we are still crossing the chasm, but we are well on our way. I think what we see is a consistent trend now where we have Rust champions who fit the "visionary" profile of early adopters successfully advocating for Rust within companies that fit the pragmatist, early majority profile.

Open source can be a great enabler to cross the chasm…

It strikes me that open-source is just an amazing platform for doing this kind of marketing. Unlike a company, we don't have to do everything ourselves. We have to leverage the fact that open source helps those who help themselves - find those visionary folks in industries that could really benefit from Rust, bring them into the Rust orbit, and then (most important!) support and empower them to adapt Rust to their needs.

…but only if we don't get too "middle school" about it

This last part may sound obvious, but it's harder than it sounds. When you're embedded in open source, it seems like a friendly place where everyone is welcome. But the reality is that it can be a place full of cliques and "oral traditions" that "everybody knows"9. People coming with an idea can get shutdown for using the wrong word. They can readily mistake the, um, "impassioned" comments from a random contributor (or perhaps just a troll…) for the official word from project leadership. It only takes one rude response to turn somebody away.

What Rust needs most is empathy

So what will ultimately help Rust the most to succeed? Empathy in Open Source. Let's get out there, find out where Rust can help people, and make it happen. Exciting times!

-

I am famously bad at accents. My best attempt at posh British sounds more like Apu from the Simpsons. I really wish I could pull off a convincing Greek accent, but sadly no. ↩︎

-

Another of my pearls of wisdom is "there is nothing more permanent than temporary code". I used to say that back at the startup I worked at after college, but years of experience have only proven it more and more true. ↩︎

-

Russel Cohen and Jess Izen gave a great talk at last year's RustConf about what our team is doing to help teams decide if Rust is viable for them. But since then another thing having a big impact is AI, which is bringing previously unthinkable projects, like rewriting older systems, within reach. ↩︎

-

I have no idea if there is code in a car's steering column, for the record. I assume so by now? For power steering or some shit? ↩︎

-

Or am I supposed to call it "tea"? Or maybe "supper"? I can't get a handle on British mealtimes. ↩︎

-

Ernest is such a joy to be around. He's quiet, but he's got a lot of insights if you can convince him to share them. If you get the chance to meet him, take it! If you live in London, go to the London Rust meetup! Find Ernest and introduce yourself. Tell him Niko sent you and that you are supposed to say how great he is and how you want to learn from the wisdom he's accrued over the years. Then watch him blush. What a doll. ↩︎

-

If you can't wait, you can read some Zulip discussion here. ↩︎

-

The Battery Packs proposal I want to talk about is similar in some ways to the Rust Platform, but decentralized and generally better in my opinion- but I get ahead of myself! ↩︎

-

Betteridge's Law of Headlines has it that "Any headline that ends in a question mark can be answered by the word no". Well, Niko's law of open-source2 is that "nobody actually knows anything that 'everybody' knows". ↩︎

23 Feb 2026 1:14pm GMT

Ludovic Hirlimann: Are mozilla's fork any good?

To answer that question, we first need to understand how complex, writing or maintaining a web browser is.

A "modern" web browser is :

- a network stack,

- and html+[1] parser,

- and image+[2] decoder,

- a javascript[3] interpreter compiler,

- a User's interface,

- integration with the underlying OS[4],

- And all the other things I'm currently forgetting.

Of course, all the above point are interacting with one another in different ways. In order for "the web" to work, standards are developed and then implemented in the different browsers, rendering engines.

In order to "make" the browser, you need engineers to write and maintain the code, which is probably around 30 Million lines of code[5] for Firefox. Once the code is written, it needs to be compiled [6] and tested [6]. This requires machines that run the operating system the browser ships to (As of this day, mozilla officially ships on Linux, Microslop Windows and MacOS X - community builds for *BSD do exists and are maintained). You need engineers to maintain the compile (build) infrastructure.

Once the engineers that are responsible for the releases [7] have decided what codes and features were mature enough, they start assembling the bits of code and like the engineers, build, test and send the results to the people using said web browser.

When I was employed at Mozilla (the company that makes Firefox) around 900+ engineers were tasked with the above and a few more were working on research and development. These engineers are working 5 days a week, 8 hours per day, that's 1872000 hours of engineering brain power spent every year (It's actually less because I have not taken vacations into account) on making Firefox versions. On top of that, you need to add the cost of building and running the test before a new version reaches the end user.

The current browsing landscape looks dark, there are currently 3 choices for rendering engines, KHTML based browsers, blink based ones and gecko based ones. 90+% of the market is dominated by KHTML/blink based browsers. Blink is a fork of KHTML. This leads to less standard work, if the major engine implements a feature and others need to play catchup to stay relevant, this has happened in the 2000s with IE dominating the browser landscape[8], making it difficult to use macOS 9 or X (I'm not even mentioning Linux here :)). This also leads to most web developers using Chrome and once in a while testing with Firefox or even Safari. But if there's a little glitch, they can still ship because of market shares.

Firefox was started back in 1998, when embedding software was not really a thing with all the platform that were to be supported. Firefox is very hard to embed (eg use as a softwrae library and add stuff on top). I know that for a fact because both Camino and Thunderbird are embeding gecko.

In the last few years, Mozilla has been itching the people I connect to, who are very privacy focus and do not see with a good eye what Mozilla does with Firefox. I believe that Mozilla does this in order to stay relevant to normal users. It needs to stay relevant for at least two things :

- Keep the web standards open, so anyone can implement a web browser / web services.

- to have enough traffic to be able to pay all the engineers working on gecko.

Now that, I've explained a few important things, let's answer the question "Are mozilla's fork any good?"

I am biased as I've worked for the company before. But how can a few people, even if they are good and have plenty of free time, be able to cope with what maintaining a fork requires :

- following security patches and porting said patches.

- following development and maintain their branch with changes coming all over the place

- how do they test?

- Are they able to pull something like https://www.youtube.com/watch?app=desktop&v=YQEq5s4SRxY

If you are comfortable with that, then using a fork because Mozilla is pushing stuff you don't want is probably doable. If not, you can always kill those features you don't like using some `about:config` magic.

Now, I've set a tone above that foresees a dark future for open web technologies. What Can you do to keep the web open and with some privacy focus?

- Keep using Mozilla Nightly

- Give servo a try

[1] HTML is interpreted code, that's why it needs to be parsed and then rendered.

[2] In order to draw and image or a photo on a screen, you need to be able to encode it or decode it. Many file formats are available.

[3] Is a computer language that transforms HTML into something that can interact with the person using the web browser. See https://developer.mozilla.org/en-US/docs/Glossary/JavaScript