06 Mar 2026

Planet KDE | English

Planet KDE | English

Web Review, Week 2026-10

Let's go for my web review for the week 2026-10.

A new California law says operating systems need to have age verification

Tags: tech, law, surveillance

The stupid idea of age verification keeps spreading with ridiculous laws…

System76 on Age Verification Laws

Tags: tech, surveillance, law

Those dangerous and stupid laws keep popping out unfortunately. This is clearly a slippery slope as shown from the New York bill… We need to push back or the demands will keep growing. Let's hope Free Software communities won't try to preemptively comply, this would be short sighted and self-sabotage.

https://blog.system76.com/post/system76-on-age-verification

Ex-Meta lobbyist put in charge of EU's digital rules

Tags: tech, europe, law, politics, gafam

What could possibly go wrong? This is really a weird appointment.

Breaking Free

Tags: tech, quality, law

Is Norway about to become one of the first countries to become serious about enshittification? Will more follow? This would be welcome.

https://www.forbrukerradet.no/breakingfree/

AI Translations Are Adding 'Hallucinations' to Wikipedia Articles

Tags: tech, wikipedia, ai, machine-learning, gpt, quality

This is concerning, hopefully the amount of issues which get through will be limited.

https://www.404media.co/ai-translations-are-adding-hallucinations-to-wikipedia-articles/

Text is king

Tags: tech, reading, culture, history, social-media

Yes there's a dip, but this piece presents compelling evidence that it's not the death of literacy we're sometimes screaming at. It is also a love letter to reading and writing.

https://www.experimental-history.com/p/text-is-king?ref=DenseDiscovery-378

prek: ⚡ Better pre-commit, re-engineered in Rust

Tags: tech, version-control, git, tools, quality

This looks tempting. I guess I'll try this one instead of pre-commit when I get the chance.

qman: A more modern man page viewer for our terminals

Tags: tech, documentation, unix, tools, command-line

Didn't know about this one. Looks like a nice alternative to the venerable man command.

Message Passing Is Shared Mutable State

Tags: tech, multithreading, reliability

Interesting piece which challenges the shared-memory vs. message-passing dichotomy. It message passing indeed gets rid of data races but nothing more. Of course this is nice already, but that doesn't mean you can't have the other families of concurrency bugs creeping in.

https://causality.blog/essays/message-passing-is-shared-mutable-state/

fast-servers

Tags: tech, server, services, performance

We got options beyond poll() nowadays.

https://geocar.sdf1.org/fast-servers.html

Rust zero-cost abstractions vs. SIMD

Tags: tech, rust, optimisation, simd

Yes, Rust like C++ comes with zero cost abstractions. Still they can get in the way of some compiler optimisations. This is an interesting case preventing vectorisation.

https://turbopuffer.com/blog/zero-cost

Hardware hotplug events on Linux, the gory details

Tags: tech, kernel, systemd, hardware

Wondering how udev communicates with the kernel? And then broadcast events? This covers the basics.

https://arcanenibble.github.io/hardware-hotplug-events-on-linux-the-gory-details.html

Log messages are mostly for the people operating your software

Tags: tech, logging

A reminder that logs are not for the developers first but for operation.

https://utcc.utoronto.ca/~cks/space/blog/programming/LogMessagesAreForOperation

Nobody Gets Promoted for Simplicity

Tags: tech, engineering, complexity, management

Rampant complexity in software is also a management issue. Are we sure we're rewarding the right things?

https://terriblesoftware.org/2026/03/03/nobody-gets-promoted-for-simplicity/

Go Beyond the Test Pyramid: Test Desiderata 2.0

Tags: tech, tests, tdd

It's been a while that I started to consider the test pyramid as fairly limiting for our thinking about tests. The dimensions proposed here give a more comprehensive model to reason about.

https://coding-is-like-cooking.info/2026/02/go-beyond-the-test-pyramid-test-desiderata-2-0/

Use the Mikado Method to do safe changes in a complex codebase

Tags: tech, refactoring, legacy

You probably want to complete this with a higher level plan if the goal is a larger modernization. That being said, it's a good approach for mid-level to small goals you'd want to tackle.

https://understandlegacycode.com/blog/a-process-to-do-safe-changes-in-a-complex-codebase/

The Eternal Promise: A History of Attempts to Eliminate Programmers

Tags: tech, programming, history, ai, machine-learning, copilot

This fantasy regularly comes back. Yet, the tools evolve, might improve some things but the core difficulties of programming don't change. At each hype cycle our industry over promises and under delivers, this is unnecessary.

https://www.ivanturkovic.com/2026/01/22/history-software-simplification-cobol-ai-hype/

Yes, and…

Tags: tech, programming, engineering, ai, machine-learning, gpt

Very good essay on why the developer profession is not going away. On the contrary we need to double down on essential skills and put in the work. This is long overdue anyway.

https://htmx.org/essays/yes-and/

I'm a philosopher who tries to see the best in others - but I know there are limits

Tags: philosophy, trust

Interesting point, looking for agency seems like a good criteria. It highlights it's not a simple test though. I'd add that trust matters and that's built over time.

Bye for now!

06 Mar 2026 11:10am GMT

05 Mar 2026

Planet KDE | English

Planet KDE | English

What's new in QML Tooling in 6.11, part 1: QML Language Server (qmlls)

The latest Qt release, Qt 6.11, is just around the corner. This short blog post series presents the new features that QML tooling brings in Qt 6.11, starting with qmlls in this part 1. Parts 2 and 3 will present newly added qmllint warnings since the last blog post on QML tooling and context property configuration support for QML Tooling.

![]()

05 Mar 2026 8:57am GMT

Third beta for Krita 5.3 and Krita 6.0

Today we're releasing the third beta of Krita 5.3.0 and Krita 6.0.0.

The bug-squashing continues, We received 63 bug reports in total, of which we managed to resolve 8 for this release, making a total of 22 fixed bugs. Beyond that, the manual has been updated for 5.3 and 6.0, complete with dark theme!

Please keep testing and reporting!

Note that 6.0.0-beta3 has more issues, especially on Linux and Wayland, than 5.3.0-beta3. If you want to combine beta testing with actual productive work, it's best to test 5.3.0-beta3, since 5.3.0 will remain the recommended version of Krita for now.

To learn about everything that has changed, check the release notes!

5.3.0-beta3 Download

Windows

If you're using the portable zip files, just open the zip file in Explorer and drag the folder somewhere convenient, then double-click on the Krita icon in the folder. This will not impact an installed version of Krita, though it will share your settings and custom resources with your regular installed version of Krita. For reporting crashes, also get the debug symbols folder.

[!NOTE] We are no longer making 32-bit Windows builds.

-

64 bits Windows Installer: krita-x64-5.3.0-beta3-setup.exe

-

Portable 64 bits Windows: krita-x64-5.3.0-beta3.zip

Linux

Note: starting with recent releases, the minimum supported distro versions may change.

[!WARNING] Starting with recent AppImage runtime updates, some AppImageLauncher versions may be incompatible. See AppImage runtime docs for troubleshooting.

- 64 bits Linux: krita-5.3.0-beta3-x86_64.AppImage

MacOS

Note: minimum supported MacOS may change between releases.

- MacOS disk image: krita-5.3.0-beta3-signed.dmg

Android

Krita on Android is still beta; tablets only.

Source code

See the source code for 6.0.0-beta3

md5sum

For all downloads, visit https://download.kde.org/unstable/krita/5.3.0-beta3/ and click on "Details" to get the hashes.

Key

The Linux AppImage and the source tarballs are signed. You can retrieve the public key here. The signatures are here (filenames ending in .sig).

6.0.0-beta2 Download

Windows

If you're using the portable zip files, just open the zip file in Explorer and drag the folder somewhere convenient, then double-click on the Krita icon in the folder. This will not impact an installed version of Krita, though it will share your settings and custom resources with your regular installed version of Krita. For reporting crashes, also get the debug symbols folder.

[!NOTE] We are no longer making 32-bit Windows builds.

-

64 bits Windows Installer: krita-x64-6.0.0-beta3-setup.exe

-

Portable 64 bits Windows: krita-x64-6.0.0-beta3.zip

Linux

Note: starting with recent releases, the minimum supported distro versions may change.

[!WARNING] Starting with recent AppImage runtime updates, some AppImageLauncher versions may be incompatible. See AppImage runtime docs for troubleshooting.

- 64 bits Linux: krita-6.0.0-beta3-x86_64.AppImage

MacOS

Note: minimum supported MacOS may change between releases.

- MacOS disk image: krita-6.0.0-beta3-signed.dmg

Android

No Krita 6.0.0 for Android for now. Please use the 5.3.0-beta3 APKs.

Source code

md5sum

For all downloads, visit https://download.kde.org/unstable/krita/6.0.0-beta3/ and click on "Details" to get the hashes.

Key

The Linux AppImage and the source tarballs are signed. You can retrieve the public key here. The signatures are here (filenames ending in .sig).

05 Mar 2026 12:00am GMT

KDE Gear 25.12.3

Over 180 individual programs plus dozens of programmer libraries and feature plugins are released simultaneously as part of KDE Gear.

Today they all get new bugfix source releases with updated translations, including:

- kdeconnect: Fix clicking on plugin's row doesn't change plugin's status (Commit, fixes bug #514923)

- neochat: Don't scroll the timeline when reacting to messages (Commit, fixes bug #515306)

- umbrello: Fix crash when deleting a complete scene (Commit, fixes bug #516457

Distro and app store packagers should update their application packages.

- 25.12 release notes for information on tarballs and known issues.

- Package download wiki page

- 25.12.3 source info page

- 25.12.3 full changelog

05 Mar 2026 12:00am GMT

04 Mar 2026

Planet KDE | English

Planet KDE | English

Sound-reactive Sideboard

A project that I had planned for quite some time came to fruition last year, now I finally found time to document the result. My livingroom sideboard looked messy and kind of boring while not blending in anymore with the updated style of my living room. I wanted to turn it into a striking centerpiece of the room.

The plan was to install a sound-reactive lighting system. I wanted the light effects to be detailed and not disturbed by ambient sound in the living room, i.e. it sound not react to people's voices, just the music playing.

My living room sideboard is an off-the-shelf product from IKEA that I bought many years ago. It didn't have doors installed, but I was delighted that I could still buy matching doors with windows in them.

To realize the light effects, I've installed frosted plexi glass inside the windows.

Getting technical…

To control the LEDs, I'm using an ESP32-based LED controller with a line-in module and an ADC (analog-digital converter). After some experimenting, I've found this board to work well. I've connected 6 WS2812B LED strips to 3 pins and installed them with an aluminium profile into the doors. The frosted windows and profiles diffuse the light nicely so you can't make out individual LEDs really.

On the software side, I'm using a sound-reactive port of the WLED project. WLED is Free and Open Source software, of course. Though its user interface can be a little unwieldy, it's also very powerful and integrates nicely with homeassistant, so it can be controlled automatically.

The ESP32, being a rather powerful dual-core microcontroller, can process the incoming audio signal on one core (using fast-fourier transformation) and compute complex LED effects on the other core. Rendering up to 200 frames per second to 2 times 210 LEDs is no problem while power consumption of just the controller stays well under 1W. Pretty impressive! Depending on the LED effects (number of LEDs lit up at a given time and their colors), the whole thing hardly ever reaches 10W of power consumption.

Another functional goal of this project was to solve cooling issues of my amplifier once and for all. The amp would run really hot and shut off after playing at higher volume for some time. I installed a bunch of 12cm fans which suck air through the amplifier and blow it out on the backside. Both amp and and fans are connected to smartplugs. I turned to my homeassistant and set up an automation which turns the fans on whenever the amp's power consumption reaches a certain level. This works really nicely, since the fans never spin at lower volumes (when you could hear them through the music) and keep everything cool and running stable at higher volume when it's necessary - without human interaction.

Walnut finish

The outer shell of the sideboard is made of walnut wooden panels with an oil and varnish finish, thanks to my friend Joris. The oil gives it a darker look and accentuates the grain, matching the speaker system. The matte varnish finish (Skylt, highly recommended for its durability and natural look) allows me to sleep well even if people put their drinks on it.

I love it when a plan comes together!

I'm really happy with the result. While I had thought it out for a long time already, it's always a lot more impressive when you see the final result in action.

The WLED firmware allows me to create interesting light effects. I can run the 3 doors as one, but also easily split them up into segments so each door panel renders its own effect. WLED has ca. 200 different LED effects, many of them react to sound. Each effect can be combined with one of 50 color palettes, some of the palettes are sound-reactive in their own right leading to a very dynamic display.

One cool feature is that the processed sound data can be broadcast across the network (over UDP) and received by other WLED controllers, so I can have multiple LED displays in the house, each rendering their own effect to the music, creating a more immersive experience.

04 Mar 2026 10:50am GMT

03 Mar 2026

Planet KDE | English

Planet KDE | English

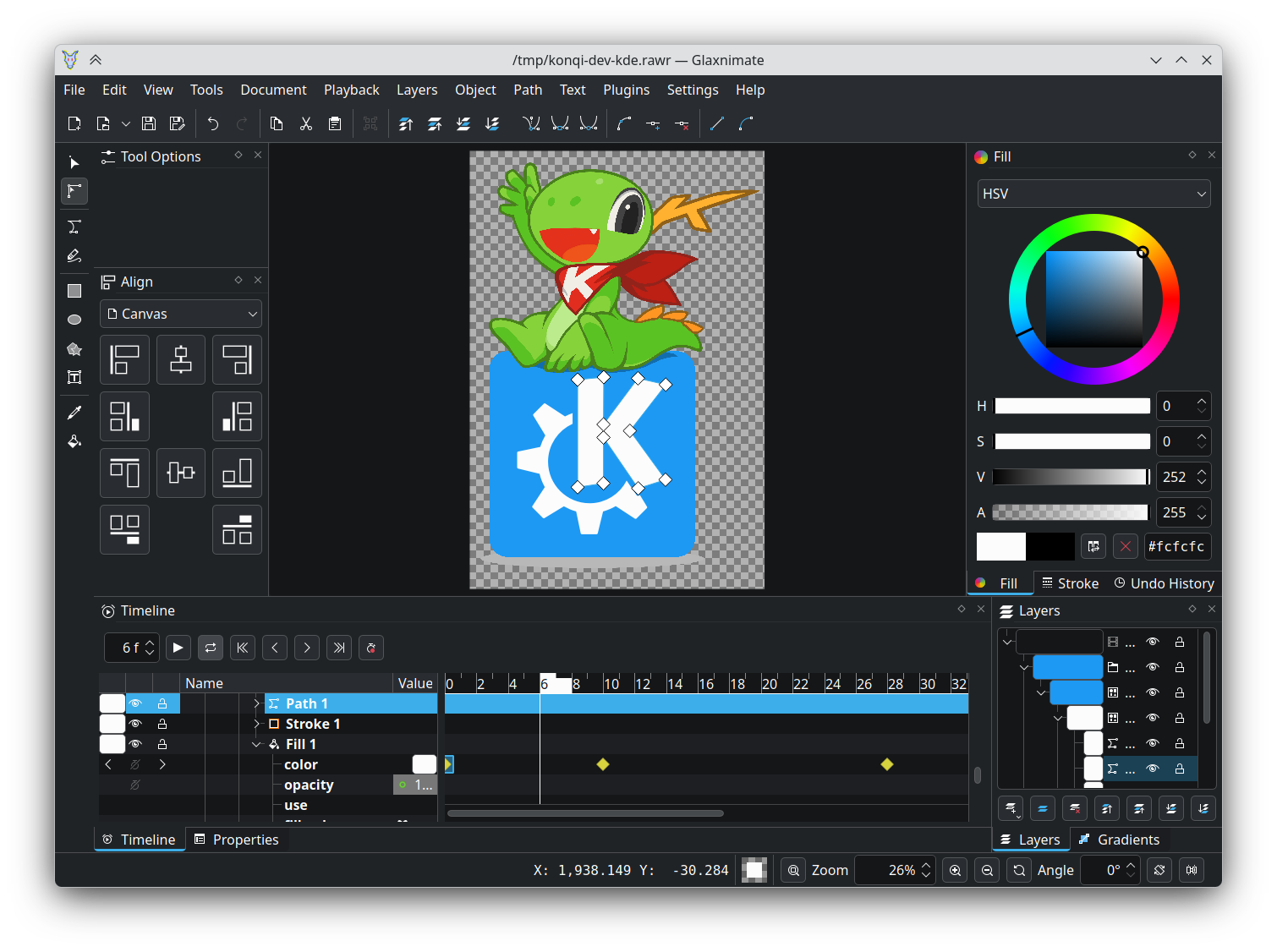

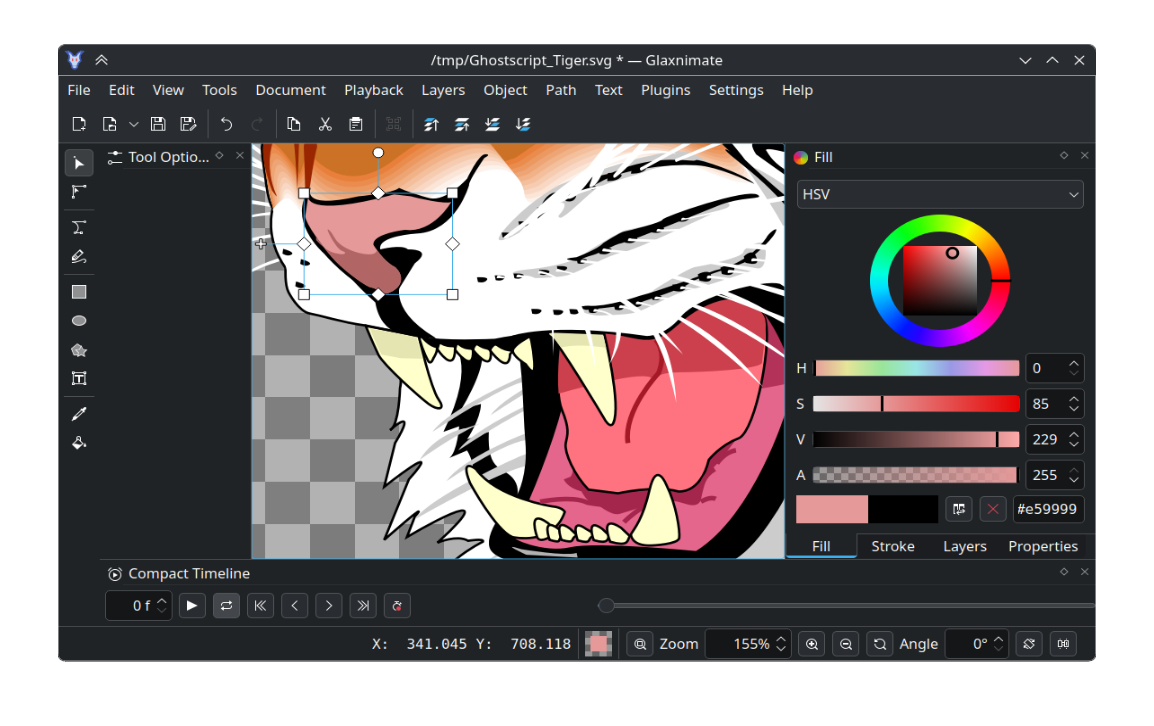

Glaxnimate 0.6.0

Glaxnimate 0.6.0 is out! This is the first stable release with Glaxnimate as part of KDE.

The biggest benefit of joining KDE is that now Glaxnimate can use KDE's infrastructure to build and deploy packages, greatly improving cross-platform support. This allows us to have releases available on the Microsoft Store and macOS builds for both Intel and Arm chips.

But there is much more...

KDE-specific features

Glaxnimate now uses the KDE file recovery system making it more reliable.

Settings and styles also go through the KDE systems, which, among other things, lets you choose from more color themes for the interface.

Translations are also provided by KDE. This makes it easier to keep other languages up to date as Glaxnimate evolves. In fact, the number of available languages has increased from 8 to 26!

The script console has also been enhanced with basic autocompletion making scripting easier.

Timeline

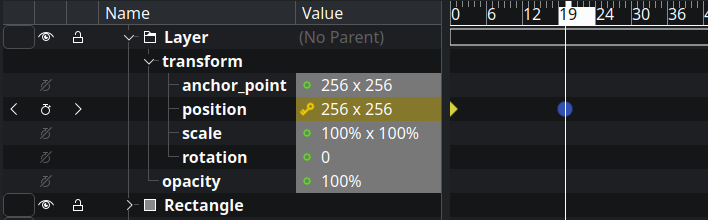

The timeline dock now allows effortless scrolling and provides buttons that make moving to different keyframes, and adding and removing them easier too. This contributes to making the animation workflow much smoother.

Hiding and showing layers from the timeline now interacts with the undo/redo system.

You can also quickly toggle keyframe easing without having to navigate menus. Just hold down the Alt key and click on the timeline.

Format Support

SVG import and export has been re-worked, and precompositions are now properly exported and animations improved. You can even export an animation as a sequence of SVG frames.

Editing

We have improved the bezier editing tools, and included the ability to Alt-click on bezier points to cycle between tangent symmetry modes.

The Reverse path action is now implemented and works for all shapes. This is mostly useful when adding the Trim path modifier.

Bug Fixes

Version 0.5.4 included a significant refactoring of internal logic that introduced several bugs. These have now have been fixed.

Packager Section

The source code tarballs are available from the KDE servers:

URL: https://download.kde.org/stable/glaxnimate/0.6.0

Source: glaxnimate-0.6.0.tar.xz

Signed by: 97B71AA02D63EA6C5C44C23B962AC48EF0501F0B Julius Künzel julius.kuenzel@kde.org

03 Mar 2026 1:20pm GMT

Rocky Linux becomes KDE's newest Patron

Rocky Linux throws its support behind KDE, becoming our latest patron.

Rocky Linux is a stable, community-driven, and production-ready Linux distribution designed to be fully compatible with Red Hat Enterprise Linux. Rocky Linux powers clouds, supercomputers, servers, and workstations around the world.

"Sustainable Open Source depends on great open-source communities supporting each other" said Brian Clemens, Co-founder and Vice President of the Rocky Enterprise Software Foundation. "We do our best to support our upstreams, and backing KDE was an easy choice for us given the popularity of the Rocky Linux KDE spin."

"As a user-first community, KDE creates solutions to address real-world needs" said Aleix Pol, President of KDE e.V.. "We are excited to welcome Rocky Linux as a KDE Patron and see KDE's software shine on Rocky Linux, their enterprise-ready operating system."

Rocky Linux joins KDE e.V.'s other patrons: Blue Systems, Canonical, g10 Code, Google, Kubuntu Focus, Mbition, Slimbook, SUSE, Techpaladin, The Qt Company and TUXEDO Computers, who generously support FOSS and KDE's development through KDE e.V.

03 Mar 2026 12:00am GMT

KDE Plasma 6.6.2, Bugfix Release for March

Tuesday, 3 March 2026. Today KDE releases a bugfix update to KDE Plasma 6, versioned 6.6.2.

Plasma 6.6 was released in February 2026 with many feature refinements and new modules to complete the desktop experience.

This release adds a week's worth of new translations and fixes from KDE's contributors. The bugfixes are typically small but important and include:

03 Mar 2026 12:00am GMT

02 Mar 2026

Planet KDE | English

Planet KDE | English

SOK2026: Porting energy measurement scripts of KEcoLab to Wayland

About me #

Hi Everyone ,I am Hrishikesh Gohain a third year undergraduate student in Computer Science & Engineering from India. For the past few weeks I have been working as a Season of KDE mentee with my mentors Joseph P. De Veaugh-Geiss ,Aakarsh MJ and Karanjot Singh. This post summarizes the work I have done until Week 5.

About KEcoLab #

KDE Eco is an ongoing initiative by the KDE Community that promotes the use and development of Free , Open Source and Sustainable Software. KEcoLab is a project that allows you to measure energy consumption of your software through ci/cd pipeline using a remote lab.It also generates a detailed report which can further be used to document and review the energy consumed when using one's software and to obtain Blue Angel eco certification.

About the SOK Project #

The Lab computer on which the software runs for testing was migrated to Fedora 43 recently, which comes with Wayland by default. Writing Standard Usage Scenario scripts, which are needed to emulate user behavior, was previously done with xdotool, but that will not work on Wayland. My work so far has been to port the existing test scripts to a Wayland-compatible tool. For those who want to contribute test scripts to measure their own software , the current scripts can be taken as a reference. My next tasks are to prepare new test scripts to measure energy usage of Plasma Desktop Environmen itself.

Work done so Far #

Week 1 #

In the first week I studied the Lab architecture and how testing of software is done using KEcoLab. The work done by past mentees as part of SOK and GSoC was very helpful for my research which you can read here , here and here. I also set up access to the lab computers through SSH. RDP access had some issues which were solved with the help of my mentors. To replicate the lab environment locally, I set up a Fedora 43 Virtual Machine so that I can test scripts under the same Wayland environment as the lab PC. I also documented and published a blog about the project and shared with my university community to promote the use of Free and Open Source Software and how it relates to sustainability.

Week 2 and Week 3 #

I communicated with my mentors and other community members to decide the new wayland compatible tool. After evaluating different options, we decided to use:

- ydotool: for key press, mouse clicks and movements (works using the uinput subsystem)

- kdotool : for working with application windows (focusing, identifying window IDs, etc.)

A combination of tools was required to meet all our requirements. To help future contributors, I published my first blog on Planet KDE explaining how to set up and use ydotool and kdotool . I also imported the repositories into KDE Invent for long term compatibility and wrote setup scripts for easier installation and configuration. These tools did not work out of the box and I had to make some workarounds and setup before usage which i documented in the blog.

Week 4 and Week 5 #

During these weeks, along with my mentors, I installed and set up the required tools on the Lab PC. I then ported the test scripts of Okular from xdotool to ydotool and kdotool and did testing on my local machine first. Currently, the CI/CD infrastructure through which these scripts run on the Lab PC is temporarily broken due to the migration to Fedora 43. Once these issues are fixed, we will test the new Wayland compatible scripts on the actual lab hardware and compare the results with previous measurements.

Next Steps #

I will be working on measuring energy usage of Plasma Desktop Environment itself. It will be more challenging than measuring a normal software application because Plasma is not a single process. It is made up of multiple components such as KWin (compositor), plasmashell, background services, widgets, and system modules. All of these together form the desktop experience.

Unlike normal applications like Okular or Kate, Plasma is always running in the background. So we cannot simply "open" and "close" it like a normal app. Because of this, some changes may be required in the current way of testing using KEcoLab.

To properly design the Standard User Scenario (SUS) scripts for Plasma, I will discuss closely with my mentors and also seek feedback and suggestions from the Plasma community. Defining what should be considered a "standard" usage pattern will require careful discussion and community input.

Lessons learned #

It has been a very amazing journey till now. I learned how to make right choices of tools/software after properly understanding the requirements instead of directly starting implementation.

Thank You Note #

I'd like to take a moment to thank my mentors Aakarsh, Karanjot, and Joseph. I am also thankful to the KDE e.V. and the KDE community for supporting us new contributors in the incredible KDE project.

KEcoLab is hosted on Invent. Are you interested in contributing? You can join the Matrix channels Measurement Lab Development and KDE Eco and introduce yourself.

Thank you!

02 Mar 2026 4:30pm GMT

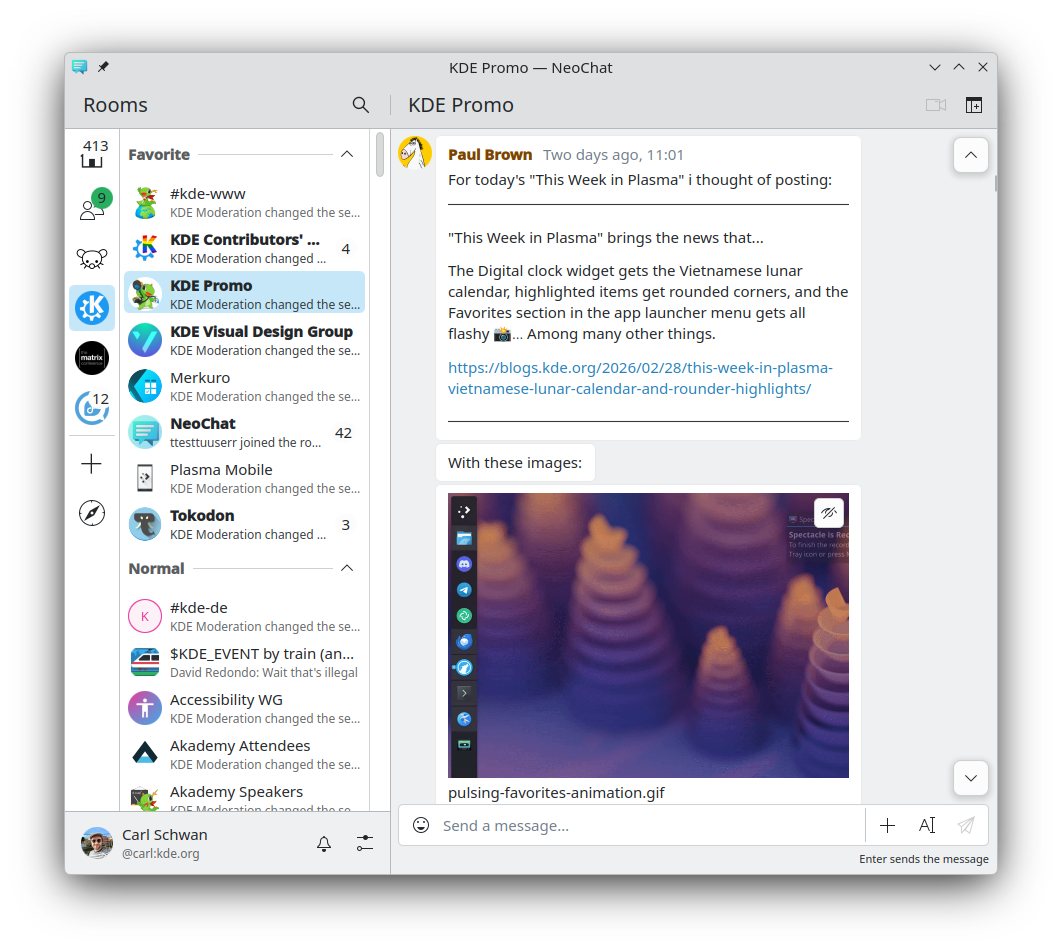

This Month in KDE Apps

A lot of progress in Marknote and Drawy, a new homepage for Audiotube, and a rich text editor in NeoChat

Welcome to a new issue of "This WeekMonth in KDE Apps"! Every week (or so) we cover as much as possible of what's happening in the world of KDE apps.

It's been a while since the last issue, so I'll try my best to summarize all the big things that happened recently.

Office Applications

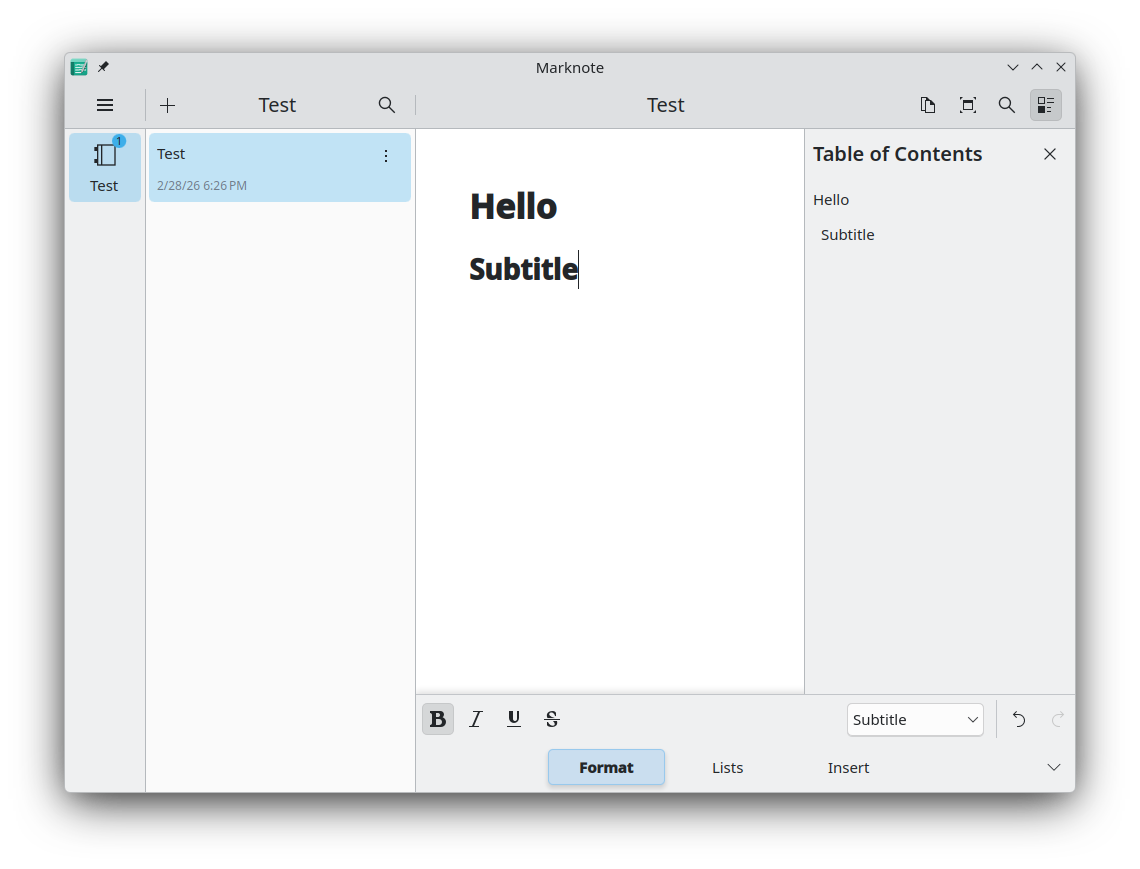

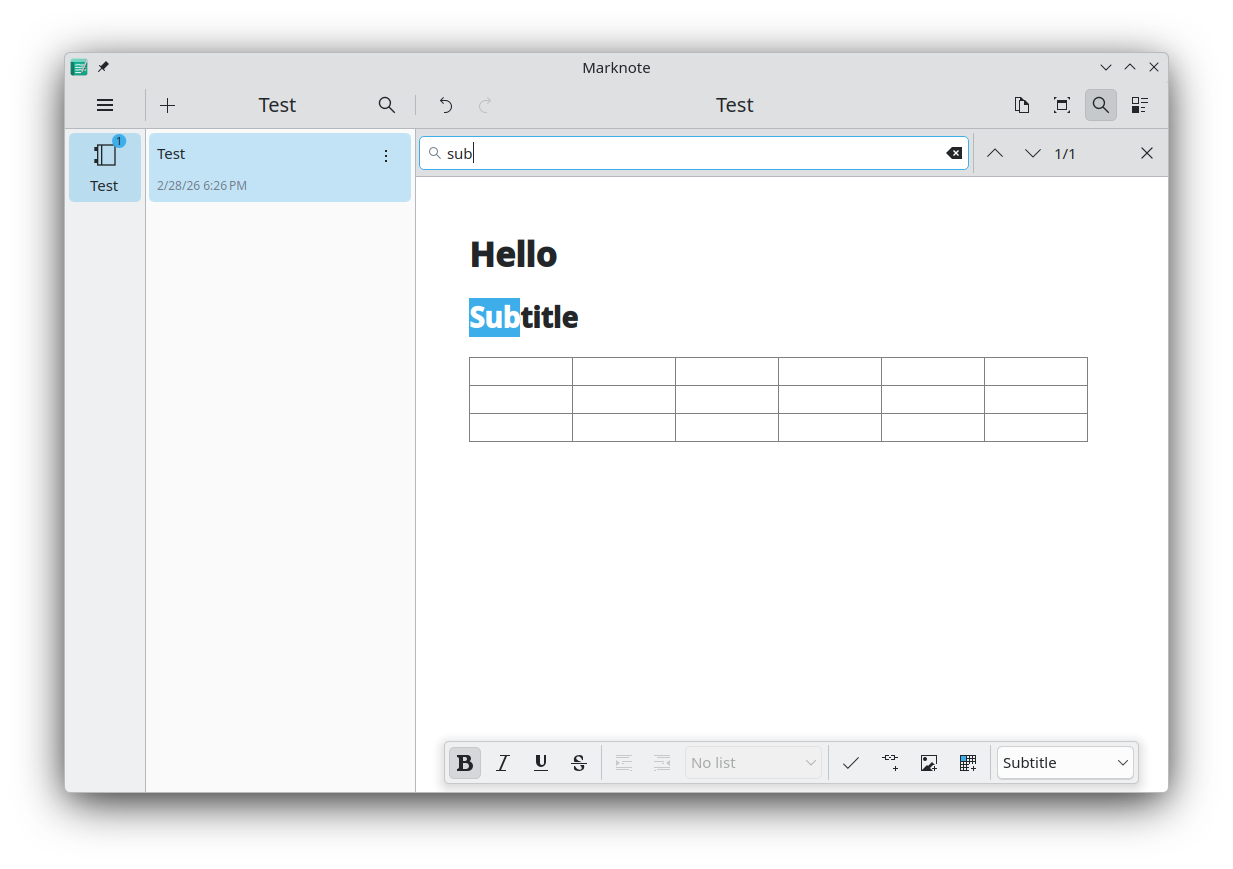

Marknote Write down your thoughts

A lot happened in Marknote. We released version 1.4.0 of Marknote, which contains a large number of bug fixes. It also includes a few new features. Siddharth Chopra implemented undo and redo in the sketch editor office/marknote MR #91 and Valentyn Bondarenko made it possible to drag and drop images inside Marknote office/marknote MR #90. Valentyn has also been busy fixing many bugs and improving the stability of Marknote.

In the development branch even more happened. Shubham Shinde added a note counter to the notebook sidebar indicating the number of notes in each notebook office/marknote MR #110. Additionally, Shubham implemented text search inside a note office/marknote MR #109; drag-and-drop support for moving notes between notebooks office/marknote MR #111; internal wiki links between notebooks office/marknote MR #115; a table of contents panel office/marknote MR #112; and a button to copy the whole note content office/marknote MR #108.

Valentyn Bondarenko further improved the drag-and-drop support for images, which now supports multiple images at once office/marknote MR #104; made image loading async office/marknote MR #99; ported some custom FormCardDelegates to the newer standardized delegates now available in Kirigami Addons office/marknote MR #144 office/marknote MR #122; added support for code blocks office/marknote MR #146 and block quotes office/marknote MR #142; significantly improved support for tables office/marknote MR #143; and delivered an even bigger list of bug fixes, code refactoring, and UI polish.

A new release should follow soon :)

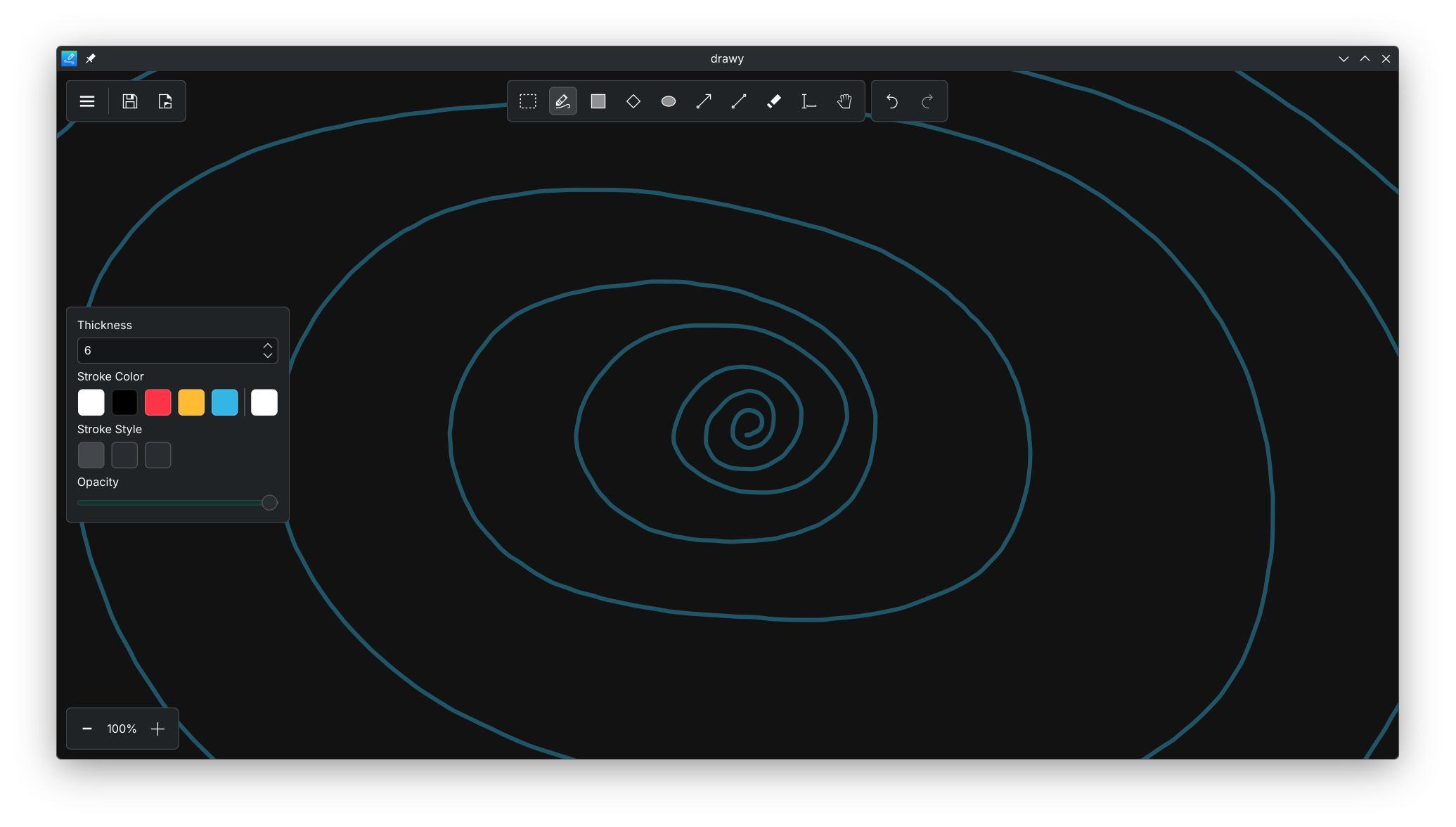

Drawy Your handy, infinite brainstorming tool

Drawy saw a massive wave of improvements and new features this month. Prayag delivered a major UI overhaul that includes a new hamburger menu graphics/drawy MR #295; improved zoom and undo/redo controls graphics/drawy MR #193; and a more uniform appearance across the app. He also improved the saving mechanism to correctly remember the last used file graphics/drawy MR #345.

Laurent Montel was busy expanding the app's core capabilities, implementing a brand-new plugin system to make adding new tools much easier graphics/drawy MR #352; Laurent also added a color scheme menu to switch themes graphics/drawy MR #372, and introduced the ability to customize keyboard shortcuts.

Nikolay Kochulin greatly enhanced how you interact with content, adding support for styluses with erasers and the ability to export your finished canvas to SVG graphics/drawy MR #258; Nikolay also made bringing media into Drawy a breeze by adding support for copying and pasting items, pasting images directly graphics/drawy MR #285, and dragging and dropping content straight onto the canvas graphics/drawy MR #322.

Finally, Abdelhadi Wael polished the visual experience by making the canvas background automatically detect and respect the system's current light or dark mode theme graphics/drawy MR #380.

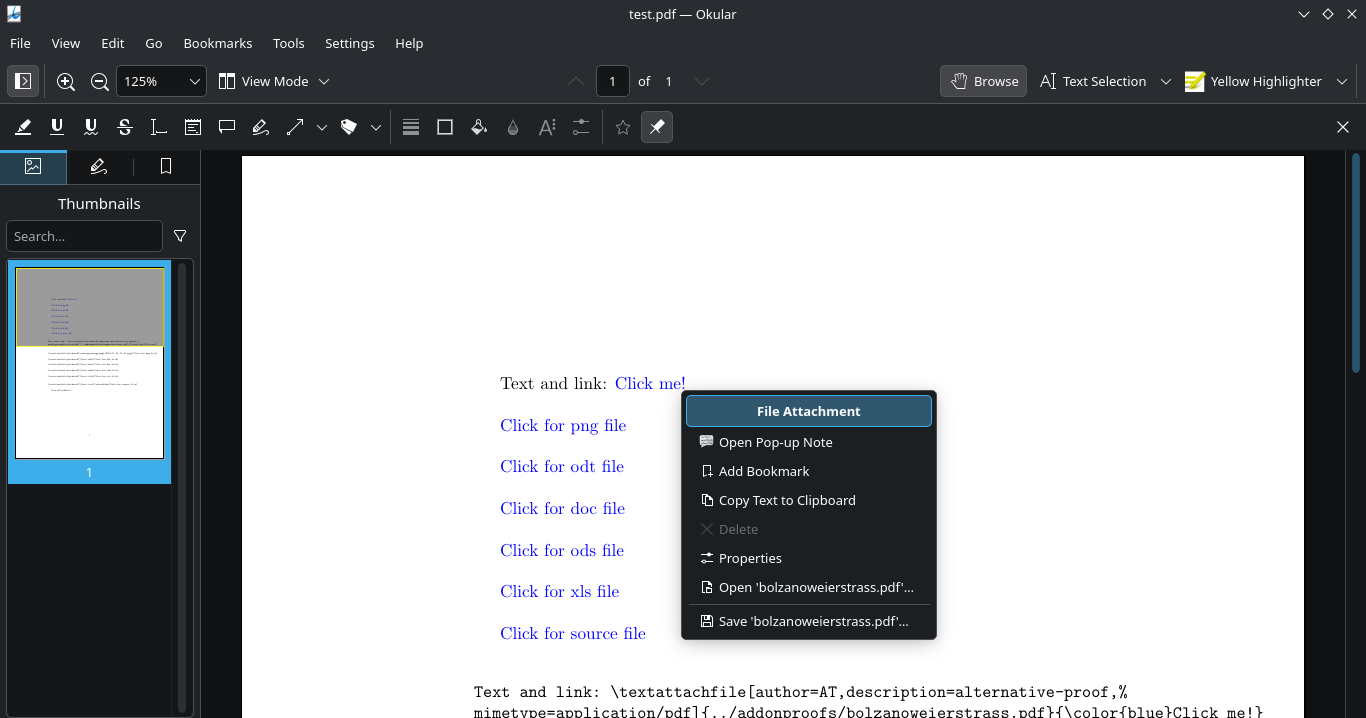

Okular View and annotate documents

Jaimukund Bhan added a setting to open the last viewed page when reopening a document graphics/okular MR #1324.

Ajay Sharma made it possible to open embedded file attachments in Okular graphics/okular MR #1312.

Travel Applications

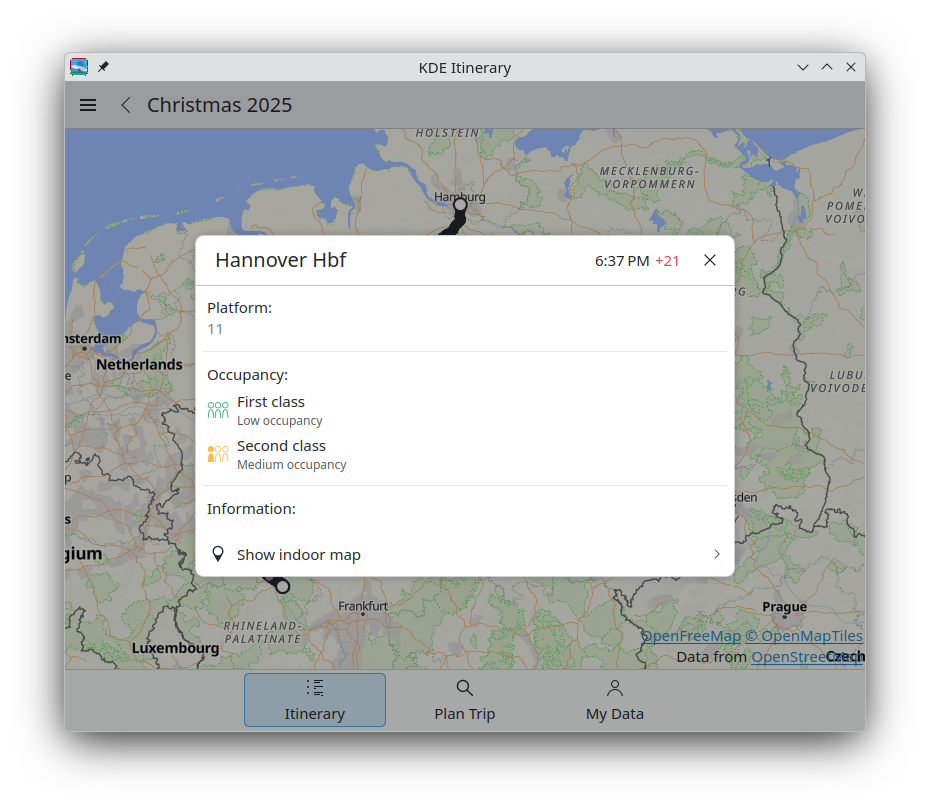

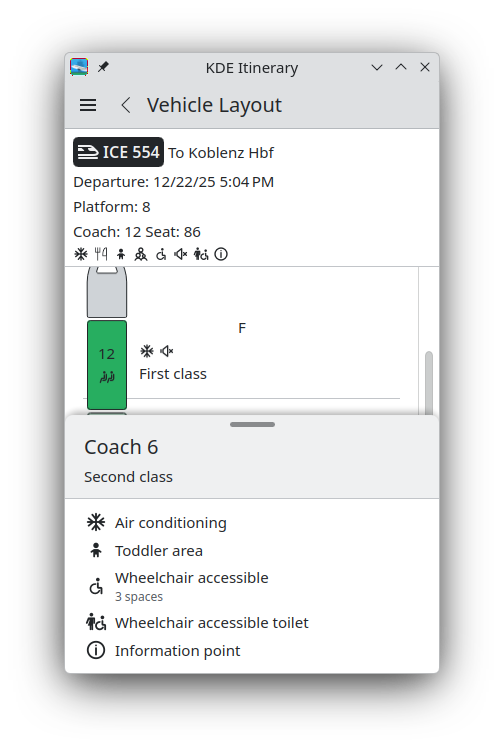

KDE Itinerary Digital travel assistant

Carl Schwan modernized some dialogs to be more convergent using Kirigami Addons' ConvergentContextMenu pim/itinerary MR #413.

As always, there were also improvements in terms of ticket support. Carl Schwan improved support for Hostel World, GetYourGuide, and FRS ferries. Volker Krause improved support for FCM flights and French TER. Tobias Fella added support for Gomus annual tickets.

Volker Krause also posted a blog post about all the other improvements in the Itinerary/Transitous ecosystem.

PIM Applications

Akonadi Background service for KDE PIM apps

We removed support for Kolab. If you are using Kolab with KMail, you will need to reconfigure your account with a normal IMAP/DAV account pim/kdepim-runtime MR #154.

We also switched the default database backend to SQLite for new installations pim/akonadi MR #311.

Merkuro Calendar Manage your tasks and events with speed and ease

Zhora Zmeikin fixed a crash when editing or creating a new incidence (25.12.3 - pim/merkuro MR #608).

Yuki Joou fixed various small issues in Merkuro Calendar (25.12.3 - pim/merkuro MR #610, pim/merkuro MR #611, pim/merkuro MR #609, pim/merkuro MR #579).

Merkuro Mail Read and write emails

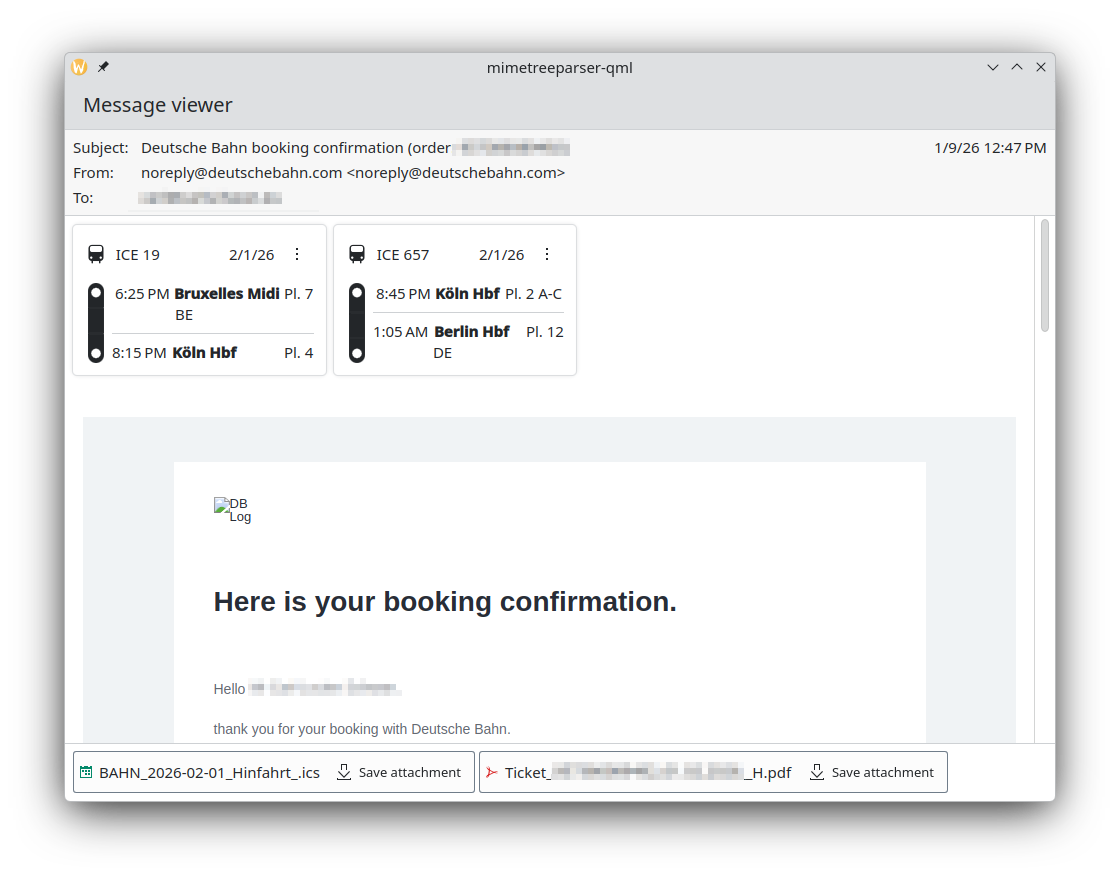

Carl Schwan added basic support for displaying travel reservations in the mail view pim/mimetreeparser MR #90.

Creative Applications

Kdenlive Video editor

Swastik Patel and Jean-Baptiste Mardelle added support for showing animated previews in the transition list (26.04.0 - multimedia/kdenlive MR #816).

Multimedia Applications

Photos Image Gallery

Valentyn Bondarenko added support for the standard zoom-in and zoom-out shortcuts in Photos (26.04.0 - graphics/koko MR #267).

Kasts Podcast application

Bart De Vries refactored the sync engine to be a bit more efficient (26.04.0 - multimedia/kasts MR #315 multimedia/kasts MR #305).

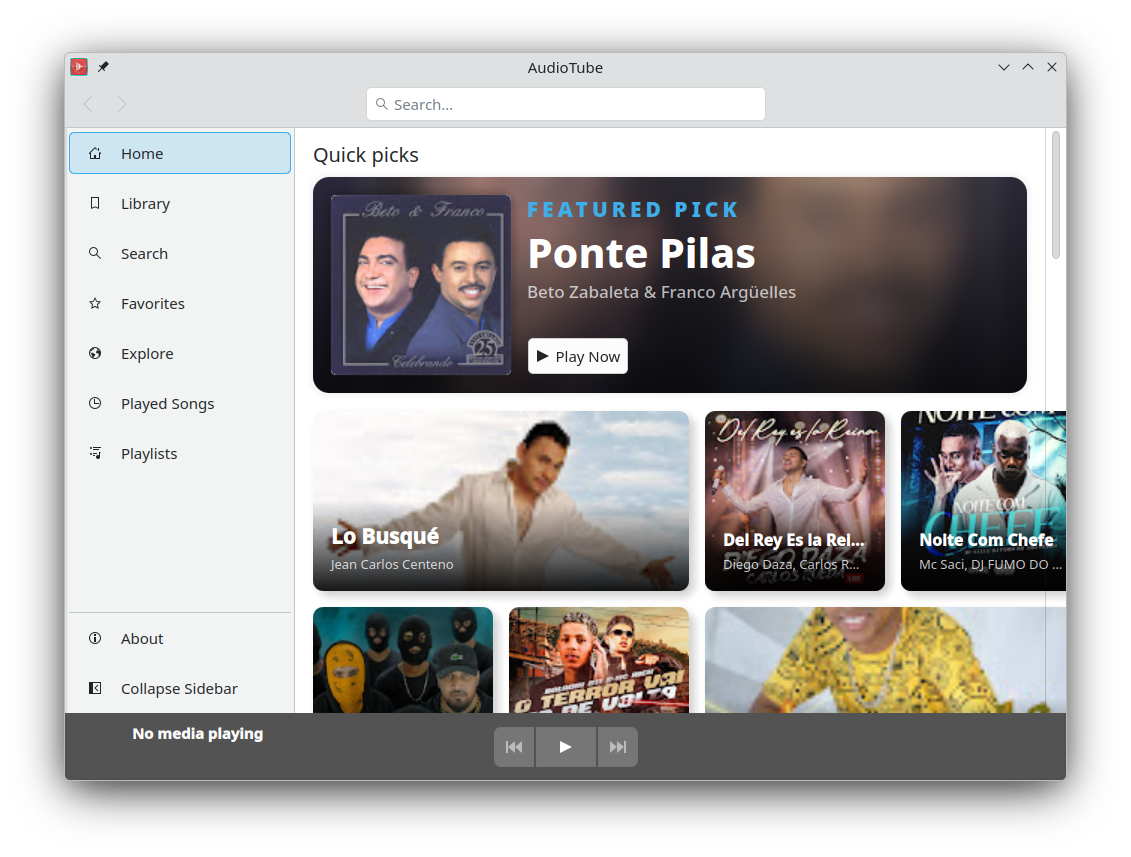

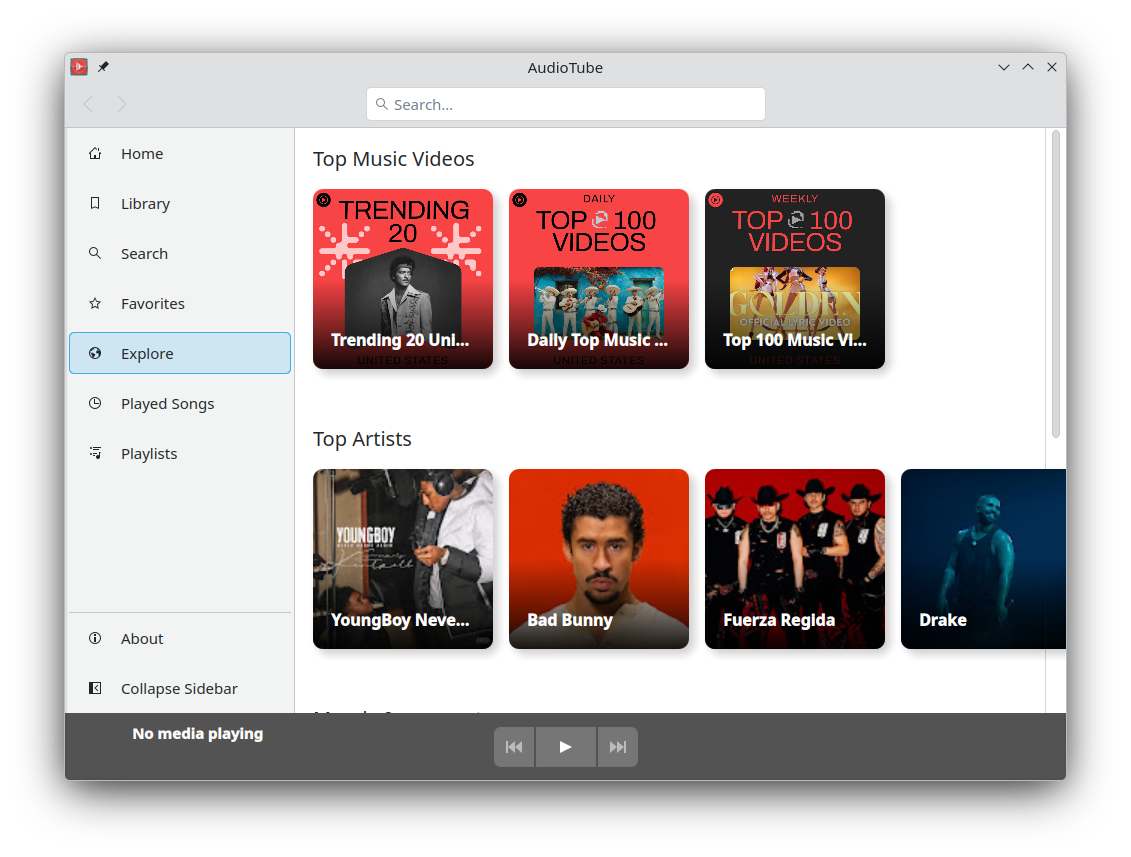

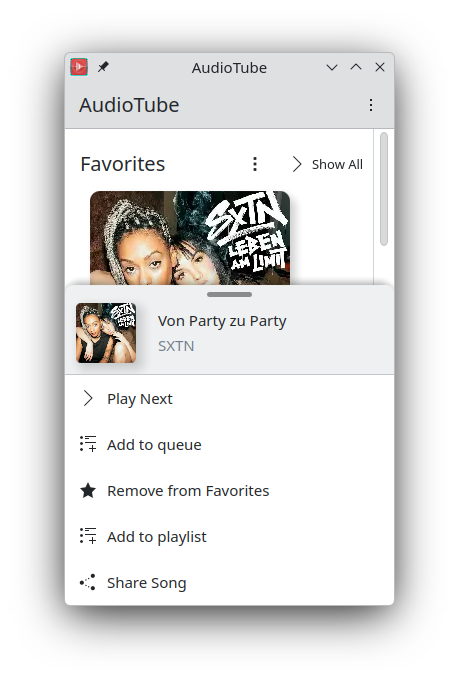

AudioTube YouTube Music app

Carl Schwan added a home and explore pages to Audiotube (multimedia/audiotube MR #179) and ported the convergent context menu to the standardized one in Kirigami Addons.

Utilities Applications

Kate Advanced text editor

Leia uwu added a way to clear the search history (26.04.0 - utilities/kate MR #2044).

KomoDo Work on To-Do lists

Akseli Lahtinen released Komodo 1.6.0 utilities/komodo MR #72. This release adds Markdown-style inline links, fixes some parsing issues, and removes the monospace font from tasks.

Clock Keep time and set alarms

Micah Stanley added a lockscreen overlay for the timer utilities/kclock MR #244 and improved the existing one for the alarms utilities/kclock MR #243.

Network Applications

NeoChat Chat on Matrix

James Graham rewrote the text editor of NeoChat to be a powerful rich text editor (network/neochat MR #2488). James also marked threading as ready, and this feature is no longer hidden behind a feature flag (network/neochat MR #2671); improved the avatar settings (network/neochat MR #2727); and, as always, delivered a lot of polishing all around the place.

Joshua Goins improved the messaging around various encryption key options (network/neochat MR #2687).

Tokodon Browse the Fediverse

Aleksander Szczygieł fixed replying to posts with multiple mentions (network/tokodon MR #796).

System Applications

Journald Browser Browser for journald databases

Andreas Cord-Landwehr introduced a common view for system and user unit logs (system/kjournald MR #79).

Supporting libraries

Kirigami Addons 1.12.0

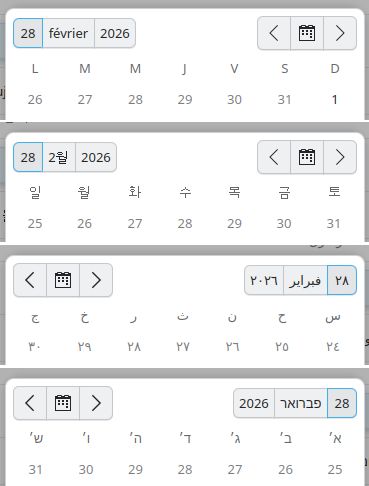

Carl Schwan released Kirigami Addons 1.12.0.

George Florea Bănuș added some missing description and trailing properties to a few of the FormCard delegate components (libraries/kirigami-addons MR #421, libraries/kirigami-addons MR #410). Carl Schwan made the configuration dialog modal (libraries/kirigami-addons MR #419). Hannah Kiekens fixed the templates for KAppTemplate (libraries/kirigami-addons MR #434). Volker Krause made the date and time picker locale-aware and fixed some issues with RTL layouts (libraries/kirigami-addons MR #431).

…And Everything Else

This blog only covers the tip of the iceberg! If you're hungry for more, check out This Week in Plasma, which covers all the work being put into KDE's Plasma desktop environment every Saturday.

For a complete overview of what's going on, visit KDE's Planet, where you can find all KDE news unfiltered directly from our contributors.

Get Involved

The KDE organization has become important in the world, and your time and contributions have helped us get there. As we grow, we're going to need your support for KDE to become sustainable.

You can help KDE by becoming an active community member and getting involved. Each contributor makes a huge difference in KDE - you are not a number or a cog in a machine! You don't have to be a programmer either. There are many things you can do: you can help hunt and confirm bugs, even maybe solve them; contribute designs for wallpapers, web pages, icons and app interfaces; translate messages and menu items into your own language; promote KDE in your local community; and a ton more things.

You can also help us by donating. Any monetary contribution, however small, will help us cover operational costs, salaries, travel expenses for contributors and, in general, keep KDE continue bringing Free Software to the world.

To get your application mentioned here, please ping us in invent or in Matrix.

02 Mar 2026 12:01pm GMT

Translations in KDE are a lot easier than you think!!

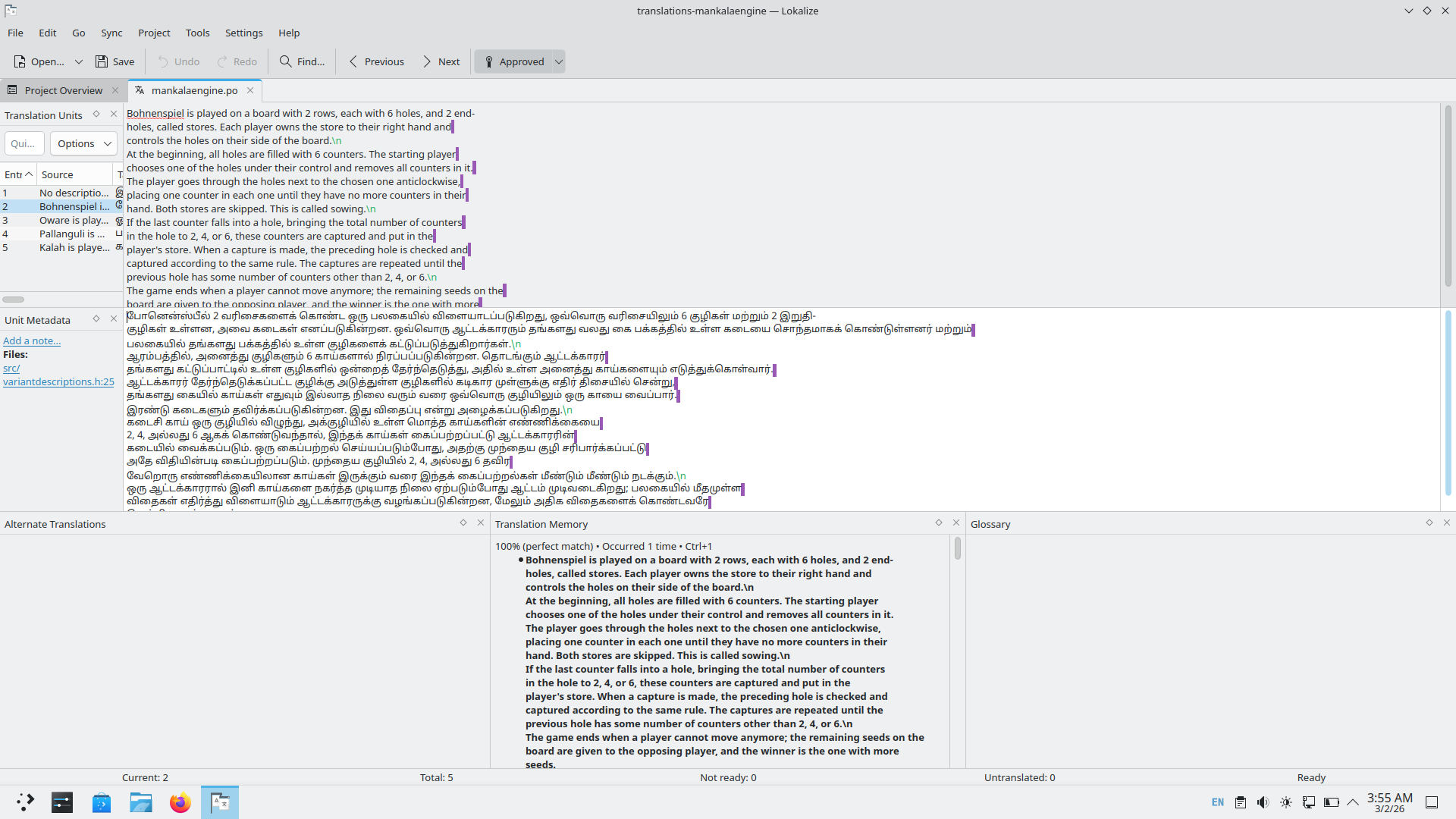

In my 5th week of Season of KDE, I have translated the Mankala Engine into Tamil. Things are a lot easier with KDE's very own translation software called Lokalize.

Installing Lokalize

Here is a guide to get you started with translation in KDE. I had installed KDE Lokalize in my Plasma desktop using:

$ sudo apt install localize

My project '''Mankala Engine''' already had translation files under the po folder; I had taken them as a reference for the strings that were to be translated. If the project being translated doesn't have any prior translation, one can install the language template and start the translation from scratch.

svn co svn://anonsvn.kde.org/home/kde/branches/stable/l10n-kf5/{your-language}

svn co svn://anonsvn.kde.org/home/kde/branches/stable/l10n-kf5/templates

I copied the string from the previous translations of my project and changed the text to my intended language of Tamil. After this, we go to Lokalize and configure the settings.

- Enter your name and email.

- Find your language and also get their mailing list.

To setup your project, go to the project folder and select your po files and edit them in Lokalize.

Creating translation files

At the beginning of the files, we have some metadata giving the details about the files, their editor, the dates, and the version. After that, we have the lines for translation, followed by the English strings and then the translated language.

English

#: src/variantdescriptions.h:25

#, kde-format

msgid ""

"Bohnenspiel is played on a board with 2 rows, each with 6 holes, and 2 end-"

"holes, called stores. Each player owns the store to their right hand and "

"controls the holes on their side of the board.\n"

Tamil

msgstr ""

"போனென்ஸ்பீல், 2 வரிசைகளைக் கொண்ட பலகையில் விளையாடப்படுகிறது. ஒவ்வொரு வரிசையிலும் 6 குழிகள் "

"மற்றும் 2 இறுதிக்குழிகள் இருக்கும். ஒவ்வொரு ஆட்டக்காரரும் தமது வலதுபுறமுள்ள இறுதிக்குழியைச் சொந்தமாகக் "

"கொண்டுள்ளனர். பலகையில் தமது பக்கத்திலுள்ள குழிகளை அவர்கள் கட்டுப்படுத்துவார்கள்.\n"

After all the files are created, we should send them to the language moderators or else contact the mailing list of that language and ask for guidance on how these translations can be uploaded. To get involved and be updated with the team, one can join KDE Translation's matrix channel:

#kde-i18n:kde.org

References & useful resources

- https://l10n.kde.org/docs/translation-howto/ - KDE Official Translation Guide

- https://raghukamath.com/how-to-translate-krita-to-your-own-language/ - How to translate Krita to your own language?

- https://kisaragi-hiu.com/2025-07-07-kde-translation-workflow-en/ - KDE translation workflow

02 Mar 2026 3:49am GMT

01 Mar 2026

Planet KDE | English

Planet KDE | English

What even are Breeze, QtQuick, QtWidget, Union..?

I was asked a good question: What are these things? What are the differences? I will try to explain what they are in this post, in bit less technical manner.

I will keep some of the parts bit short here, since I am not 100% knowledgeable about everything, and I rather people read documentation about it instead of relying my blogpost. :) But here's the basics of it.

It's infodump time.

QtWidgets

QtWidgets is the "older" way of writing Qt applications. It's mostly C++ and sometimes quite difficult to work with. It's not very flexible.

More information in here:

QStyle

QStyle is the class for making UI elements that follow the style given for the application. Instead of hardcoding all the styles, we use QStyle methods for writing things. This is what I was talking about in my previous post.

More information in here:

Breeze

Breeze is our current style/theme. It's what defines how things should look like. Sometimes when we say "Breeze" in QtWidgets context, it means the QStyle of it, since we do not have other name for it.

Repository: https://invent.kde.org/plasma/breeze/

QtQuick

QtQuick is the modern way of writing Qt applications. In QtQuick, we use QML which is a declarative language for writing the UI components and such. Then we usually have C++ code running the backend for the application, such as handling data.

More information in here:

qqc2-desktop-style

qqc2-desktop-style is the Breeze style for QtQuick applications. It tells QtQuick applications what certain elements should look like.

Repository: https://invent.kde.org/frameworks/qqc2-desktop-style/

Kirigami

Kirigami is a set of shared components and items we can utilize in our QtQuick applications. Instead of rewriting similar items every time for new apps, we use Kirigami for many things. We call them Kirigami applications since we rely on it quite a lot.

Kirigami and qqc2-desktop-style

These two are a bit intermixed. For example, Kirigami provides convenient size units we have agreed on together, such as Kirigami.Units.smallSpacing.

We then use these units in the qqc2-desktop-style, but in other applications as well: Both for basic components that QtQuick provides us which are then styled by qqc2-desktop-style, but also for any custom components one may need to write for an application, if Kirigami does not provide such.

I wish the two weren't so tightly coupled but there's probably a reason for that, that has been decided before my time. (Or it just happened as things tend to go.)

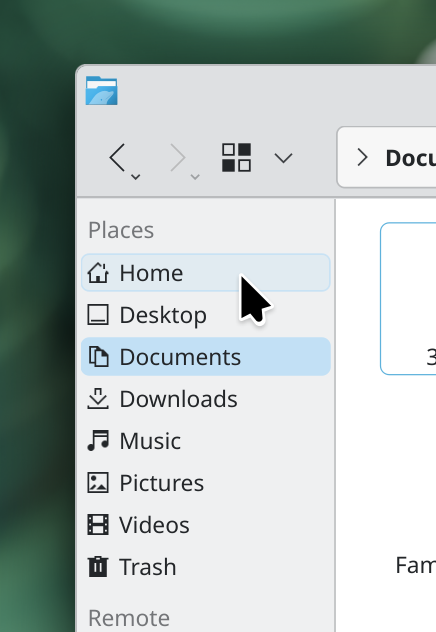

Diagram of the current stack

This is how the current stack looks like.

Problem: Keeping styles in sync

As you can see from the diagram, the styles must be kept in sync by hand. We have to go over each change and somehow sync them.

Not all things have to be kept in sync. This whole thing is rather.. Primitive. Some parts come from QStyle, some parts are handcrafted, some metrics and spacings can get out of sync when changed.. But for purposes of "regular person reading this" they need to be carefully modified by developers to make sure everything looks consistent.

Even bigger problem is that these two (QtWidgets and QtQuick) can behave very differently, causing a lot of inconsistent look and behavior!

But this is why Union was made.

Union

Union is our own "style engine" on top of these two. Instead of having to keep two completely different stacks in sync, we feed Union one single source of truth in form of CSS files, and it then chews the data out to both QtQuick and QtWidgets apps, making sure the both look as close to each other as possible!

I think it's entirely possible to create other outputs for it too, such as GTK style. Our ideal goal with Union is to have it feed style information even across toolkits eventually, but first we just aim for these two! :)

And yes the CSS style files are completely customizable by users! But note that the CSS is not 1-to-1 something one would use to write for web platforms!

Repository: https://invent.kde.org/plasma/union/

Note about Plasma styles

Plasma styles are their own thing, which are made entirely out of SVG files.

I do not know if Union will have an output for that as well, or do we just use the qqc2-desktop-style directly in our Plasma stack (panels, widgets) so we can deprecate the SVG stack. Nothing has been decided in this front yet as far as I know.

Yeah that's a lot of stuff. Over +20 years of KDE we have accumulated so many different ways of doing things, so of course things will get out of sync.

Union will be a big step in resolving the inconsistencies and allowing users easily to customize their desktop with CSS files, instead of having to edit two or three different styles for different engines and then having to compile them all and load them over the defaults.

I hope this post helps open up this a bit. Please see the previous post as well, if you're interested in this work: https://akselmo.dev/posts/breeze-and-union-preparing/

01 Mar 2026 5:19pm GMT

28 Feb 2026

Planet KDE | English

Planet KDE | English

Breeze QtWidgets style changes to help us prepare for Union

We have worked on some spring cleaning for Breeze, which helps us to prepare for Union and the changes it brings. This post is a bit more technical.

Help us test things!

Edit: I made another post that should help explain some of the terminology and such: https://akselmo.dev/posts/what-are-breeze-widgets-quick-union/

If you are running Plasma git master branch, you may have noticed that Breeze has gotten various (small) changes to it.

Note that these changes are NOT in 6.6 branch, just in master branch. Current target is Plasma 6.7 but that may change (6.8) if we still have some issues with it! And to clarify, I do not know when Union releases to wider public yet. These changes will be most likely before Union.

This all is happening for two reasons:

- Bring Breeze on-par with the current QtQuick styling, which is our current vision for Breeze

- Find out any discrepancies and fix them, to make moving to Union theming more seamless

In more technical terms, this means we have made QStyle::PE_PanelItemViewItem (docs) more round. This primitive is used in a lot of places.

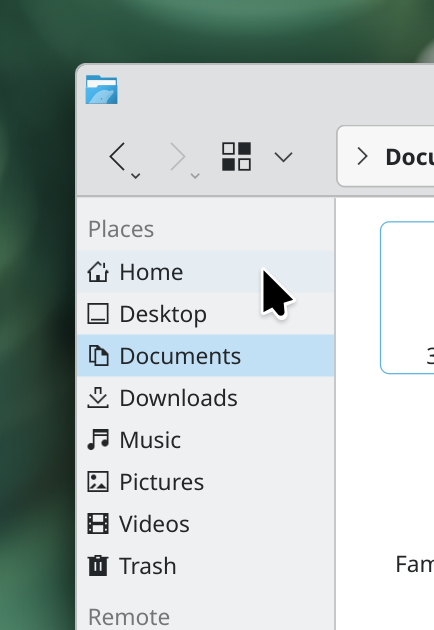

For example, see the background of the places panel element here:

It looks much more like the items in our System Settings for example, since they're rounded too.

However this has not been easy: Due to how QStyle works, we have to add margins to the primitive itself, otherwise it will be touching the edges of the view, making it look bad.

These changes have made us notice bunch of visual oddities since we have +20 years of cruft across our stack, working on top of Breeze style without taking QStyle into account. People use completely custom solutions instead of relying on QStyle, though the QStyle API is not easy to work with so I do understand why it can be annoying.

Why does using QStyle API matters in regards to Union?

This is all fine and good if we just decide to use Breeze always and forever. But we're not! With Union style engine, people are going to do all kinds of cool things. First of course we just are trying to make 1-to-1 Breeze "copy" with Union, since it's a good target to compare that everything works.

But when people are going to make their cool new themes with Union, our QtWidgets stack will not always follow that, due to all the custom things they're doing! So we need to minimize custom styling within QtWidgets apps and frameworks, and make sure they use QStyle APIs, so that Union can tell them properly what to do.

A more practical example:

- KoolApp directly paints a rectangle with

QPainteras a selection background.- It's a regular rectangle, 90 degree angles.

- Union style engine is released to the public and everyone starts making their own themes

- Someone makes a theme that makes all selection backgrounds a rounded rectangle

- They then open KoolApp and notice nothing changes! Since KoolApp draws its on its own.

- Everyone is unhappy. :(

However, when KoolApp is updated to use drawPrimitive like this:

// Remove the old background

//painter->setRenderHint(QPainter::Antialiasing);

//painter->setPen(Qt::NoPen);

//painter->setBrush(color);

//painter->drawRect(rect);

// Use style API instead

...

style()->drawPrimitive(QStyle::PE_PanelItemViewItem, &option, &painter, this);

...

// Then for text, add some spacing at start and end so it does not hug the edges of the primitive

const int margin = style()->pixelMetric(QStyle::PM_LayoutHorizontalSpacing);

// NOTE: you can use QStyle::SE_ItemViewItemText to get the subelement of the text

// NOTE: If you use HTML to draw rich text, you will have to do adjustments manually!

// Because nothing can be ever easy. :(

...

Now the background uses what the QStyle gives it, which in turn is whatever Union has declared for it.

I understand there are cases where someone might want to draw their custom thing, but if at all possible, please use QStyle API instead! It will make theming much easier on the long run.

Why change now? Why not wait for Union?

It's better to start catching this all now. It can be more tough to find the bugs with Union styling later on, because it changes much more than just one style: It changes the whole styling engine.

When we tinker with Breeze theme to find out these discrepancies, it's easier to spot if the problem is in Breeze theme itself or the application/framework drawing the items. With Union, we can also have a bug in Union too, so it adds one more layer to the bughunt.

So when Breeze looks fine with apps, we can be sure that any changes Union brings, it can be either a bug in Union or the style Union is using, instead of having to also hunt down the bug from application/framework.

Think of it as a gradual rollout of changes. :)

A call for testing

The current Breeze style changes we have now in git master branch are already out there if you're using KDE Linux for example.

What we need is YOU to test out our stack. Test out other peoples Qt apps too.

So please check this VDG issue and join there: https://invent.kde.org/teams/vdg/issues/-/issues/118

Read the tasks section, look over what others have shared, share information, etc. Report everything in that issue!

Any help is appreciated, from spotting small errors to fixing them.

I doubt our QtWidgets and QtQuick styles can be 1-to-1 without Union, so do not worry too much about that. At the moment goal is just to make sure the Breeze QStyle is properly utilized. Perfect is the enemy of good and all that.

I hope this does not cause too much annoyance for our git master branch users, but if you're using master branch, we hope you're helping us test too. :)

Let's make the movement from separate QtWidgets/QtQuick style engines to one unified Union style engine as seamless as possible. And this will help other QtWidgets styles too, such as Fusion, Kvantum, Klassy, etc..!

Happy testing!

28 Feb 2026 3:40pm GMT

OSM Hack Weekend February 2026

Last weekend I attended another OSM Hack Weekend, hosted by Geofabrik in Karlsruhe, focusing on improvements to Transitous and KDE Itinerary.

KDE Itinerary

The Itinerary UI got a bit of polish:

- Better defaults when importing a full trip from a previous export.

- Better defaults when adding an entrance time to an event that doesn't have a start time yet, also preventing invisible seconds interfering with input validation.

- Allowing to import shortened OSM element URLs as well.

A few changes in the infrastructure for querying public transport information aren't reflected in the UI yet:

- Added support for GBFS brand colors.

- Initial work on booking deep-links for journeys.

There were also a bunch of fixes in the date/time entry controls related to right-to-left layouts used by e.g. Arabic or Hebrew (affects all KDE apps using Kirigami Addons).

Transitous

Ride Sharing

We investigated using Amarillo ride sharing data in Transitous, which are available for example in Baden-Württemberg, Germany and South Tyrol, Italy.

As far as Transitous is concerned those are just GTFS/GTFS-RT feeds with a special route type. Felix added a dedicated mode class for ride sharing in MOTIS, so this can also be filtered out, as well as support for passing through a booking deep-link.

So once the next MOTIS release is deployed for Transitous we can add Amarillo feeds as well.

Meta Stations

For cities with multiple equally important main railway stations it can be useful to be able to specify just the city as destination and let the router pick an appropriate station. When choosing your precise destination (which usually isn't the railway station) this already works correctly, but when only looking at the long-distance part of a trip this would fail for places like Paris or London.

One approach to address this are so-called "meta stations", a set of stations that the router considers as equivalent destinations, even when being far apart.

MOTIS v2.8 added support for a custom GTFS extension to specify such meta stations, and we now have infrastructure for Transitous to generate a suitable GTFS feed based on a manually maintained map of corresponding Wikidata items, including translations into a hundred or so languages.

While this works we also identified issues in the current production deployment where the geocoder would rank meta stations so low that they are practically unfindable. Fixes for this have been implemented in MOTIS.

SIRI-FM Elevator Data

Holger and Felix implemented the missing bits for finally consuming the DB OpenStation SIRI-FM feed, which provides realtime status information of elevators, something particularly important for wheelchair routing.

The main challenge here is that the SIRI-FM feed only references elevators by an identifiers which is described in the DB OpenStation NeTEx dataset, but without being geo-referenced there. So this data had to be mapped to OSM elements first, which is what the router ultimately uses as input.

This also provides some of the foundation to eventually also consume elevator status data from the Swiss SIRI-SX feed.

You can help!

Getting people to work together in the same room for a few days is immensely valuable and productive, there's a great deal of knowledge transfer happening, and it provides a big motivational boost.

However, physical meetings incur costs, and that's where your donations help! KDE e.V. and local OSM chapters like the FOSSGIS e.V. support these activities.

28 Feb 2026 10:00am GMT

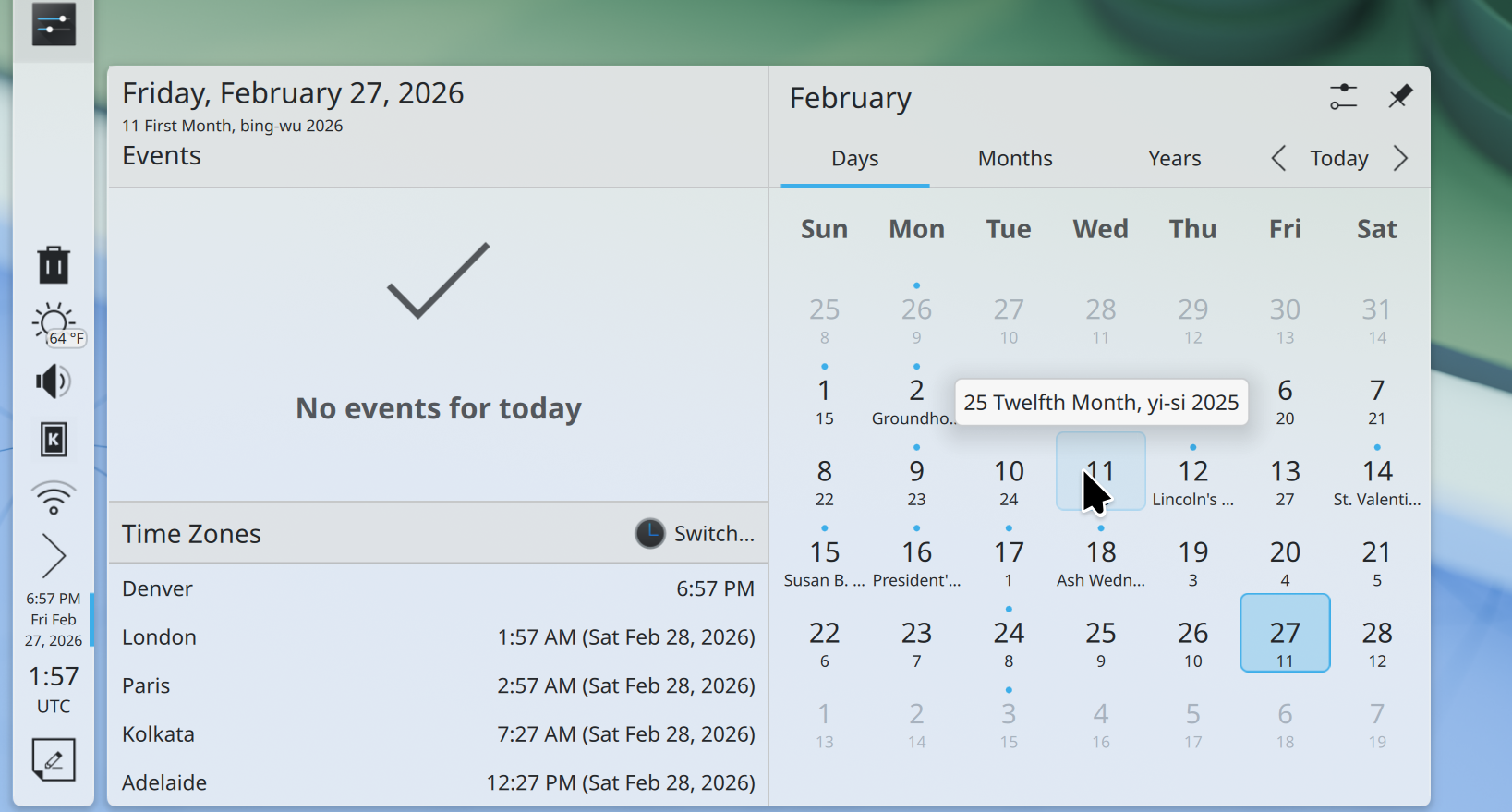

This Week in Plasma: Vietnamese lunar calendar and rounder highlights

Welcome to a new issue of This Week in Plasma!

This week, in addition to the typical post-Plasma-release bug-fix spree, folks started working on UI improvements and features. Two notable examples are highlighted in the title, and found below:

Notable new features

Plasma 6.7

Added the Vietnamese lunar calendar to the list of "alternate calendars" available in the Digital Clock widget. (Trần Nam Tuấn, kdeplasma-addons MR #1015)

Refreshed the OpenVPN settings UI to include loads of new settings offered by the underlying system. (David Edmundson, plasma-nm MR #517)

Notable UI improvements

Plasma 6.6.1

Improved the appearance of the large digits in the clock on the login and lock screens. (Nate Graham, KDE Bugzilla #516314)

Plasma 6.6.2

The "caret tracking" accessibility feature now respects whatever tracking mode you've set in the zoom effect, rather than always using "proportional". (Ritchie Frodomar, KDE Bugzilla #516435)

Changing the "Show virtual [network] connections" setting no longer requires restarting Plasma or the computer to take effect. (Arjen Hiemstra, KDE Bugzilla #516091)

Deleting a widget with a pinned-open popup now closes the popup immediately, rather than after dismissing the "Undo deleting this widget?" notification. (Tobias Fella, KDE Bugzilla #470812)

Plasma 6.7

Rolled out the beginnings of a new rounded style for selection highlights in Breeze-themed QtWidgets-based apps, like Dolphin, Okular, and KMail. This brings them the style we've been using for years for list highlights in QtQuick-based apps and menu items everywhere, and paves the way for all of them to be consistently styled from a central location by the upcoming Union styling system. (Akseli Lahtinen and Marco Martin, breeze MR #583)

Marking an app as a favorite in the Kickoff application launcher widget now flashes the "Favorites" category, giving you a hint that it worked and where the new favorite can be found. (Kai Uwe Broulik, plasma-desktop MR #3565)

On System Settings' Network Connections page, merged the two "Wi-Fi" and "Wi-Fi Security" tabs into one. (Alexander Wilms, plasma-nm MR #491)

Added a button to System Settings' Application Permissions page to revoke all screen-casting sessions immediately, so you don't have to do it one-by-one when there are a lot of them. (Joaquim Monteiro, flatpak-kcm MR #169)

Improved the text and icon shown in notifications sent by Plasma. (Tobias Fella, plasma-workspace MR #6351 and plasma-workspace MR #6353)

Notable bug fixes

Plasma 6.6.1

Fixed a recent regression that broke the "Open containing folder" button for slideshow wallpapers. (Vlad Zahorodnii, KDE Bugzilla #515551)

Fixed a recent regression that made the search field in the System Tray widget's config window lose focus after typing text into it. (Christoph Wolk, KDE Bugzilla #515863)

Fixed a recent regression that made all drawing tablet stylii claim to have 3 buttons in System Settings' Drawing Tablet page, no matter how many buttons they actually had. (Kat Pavlu, KDE Bugzilla #516442)

Fixed a recent visual regression that made the dark blurry backgrounds of the logout screen and the Application Dashboard widget look too light. (Nate Graham, KDE Bugzilla #516266)

Fixed a recent visual regression that made the desktop's selection rectangle only follow the system's accent color when using the Breeze Plasma style. Now it once again respects the accent color with all Plasma styles. (Filip Fila, KDE Bugzilla #516498)

Fixed a case where Plasma could crash when dragging System Tray widgets between panels. (Nicolas Fella, KDE Bugzilla #508451)

Fixed a constellation of issues that made certain screens not light up again after waking from sleep. (Xaver Hugl, KDE Bugzilla #515550)

Setting up monitor dimming rules no longer sometimes re-orders the monitors when the system is woken from sleep. (Xaver Hugl, KDE Bugzilla #516611 and KDE Bugzilla #516454)

The Display Configuration widget's "Show when relevant" setting now does something. (Albert Vaca Cintora, kscreen MR #465)

Digits in the Digital Clock widget are now localized as expected for writing systems that don't use Latin digits. (Fushan Wen, KDE Bugzilla #485915)

Fixed an issue that could sometimes make the Bluetooth widget tell you the connection to a device failed when it actually succeeded. (Christoph Wolk, KDE Bugzilla #515189)

Plasma 6.6.2

Fixed a case where Discover could crash while installing updates. (Aleix Pol Gonzalez, discover MR #1268)

Fixed two cases where System Settings' Shortcuts page could crash while searching for certain text or editing custom global shortcuts. (Akseli Lahtinen, KDE Bugzilla #516488 and KDE Bugzilla #516607)

Clearing the clipboard contents now also clears passwords and other secrets marked with special "hide me from the history" metadata that were copied but not actually added to the history. (Tobias Fella, KDE Bugzilla #516403)

Fixed an issue that made the desktop not notice when files were deleted using Dolphin. (Akseli Lahtinen, KDE Bugzilla #516559)

Fixed an issue with the Global Menu widget's compact button form that made the menu not always appear on click. (Christoph Wolk, KDE Bugzilla #516207)

Frameworks 6.24

The System Tray icon for KDE's Kleopatra cryptography app now re-colors itself properly when using a non-default color scheme. (Oleg Kosmakov, breeze-icons MR #529)

Notable in performance & technical

Plasma 6.6.2

Improved support for mice with high-resolution scroll wheels in the built-in remote desktop (RDP) server. (reali es, KDE Bugzilla #511029)

How you can help

KDE has become important in the world, and your time and contributions have helped us get there. As we grow, we need your support to keep KDE sustainable.

Help is needed to build a team around putting together this report, and keep it weekly! It's a lot of work, and it doesn't happen by accident. If you'd like to help, introduce yourself in the Matrix room and join the team.

Beyond that, you can help KDE by directly getting involved in any other projects. Donating time is actually more impactful than donating money. Each contributor makes a huge difference in KDE - you are not a number or a cog in a machine! You don't have to be a programmer, either; many other opportunities exist.

You can also help out by making a donation! This helps cover operational costs, salaries, travel expenses for contributors, and in general just keeps KDE bringing Free Software to the world.

To get a new Plasma feature or a bug fix mentioned here

Push a commit to the relevant merge request on invent.kde.org.

28 Feb 2026 2:12am GMT

27 Feb 2026

Planet KDE | English

Planet KDE | English

Web Review, Week 2026-09

Let's go for my web review for the week 2026-09.

Easily Replaceable USB-C Port Spawned By EU Laws

Tags: tech, usb, repair

Since these ports are becoming more and more pervasive, it's nice to see a replaceable and repairable option on the market.

https://hackaday.com/2026/02/26/easily-replaceable-usb-c-port-spawned-by-eu-laws/

On Alliances

Tags: politics, ethics, culture

The previous piece about the disagreement with Cory Doctorow was a good one even though I didn't put it in my review. This one is more important though! It's a necessary reminder that we can't put allies on a pedestal and then scream at them making mistakes or having different opinions. We can't afford this kind of purity culture… Especially right now.

https://tante.cc/2026/02/20/on-alliances/

The Slow Death of the Power User

Tags: tech, foss, hacking, culture, business, surveillance, vendor-lockin, knowledge

Clearly the author is angry and he has every right to be. By closing platforms and fighting against tinkering, the big tech companies try to kill of the power user and hacker cultures. By letting this happen we all loose as a society.

https://fireborn.mataroa.blog/blog/the-slow-death-of-the-power-user/

Velocity Is the New Authority. Here's Why

Tags: tech, information, attention-economy, culture, journalism

Interesting food for thought about the information ecosystem we live in. It's been distorted by the constant stream of content, so it's very hard to find the good journalism within the noise.

https://om.co/2026/01/21/velocity-is-the-new-authority-heres-why/

I Verified My LinkedIn Identity. Here's What I Actually Handed Over

Tags: tech, linkedin, social-media, surveillance

Could it get more intrusive than this? It's really handing over sensitive data to shady companies…

https://thelocalstack.eu/posts/linkedin-identity-verification-privacy/

I hacked ChatGPT and Google's AI - and it only took 20 minutes

Tags: tech, ai, machine-learning, gpt, knowledge, security, trust

One more example that it should be used for NLP tasks, not knowledge related tasks. The model makers are consuming so much data indiscriminately that they can't easily fine comb everything to remove the poisoned information.

Facebook is absolutely cooked

Tags: tech, gafam, facebook, attention-economy, ai

If you're wondering the kind of dumpster fire Facebook is now, that gives an idea. It was crap all along for sure, but clearly they crossed another threshold.

https://pilk.website/3/facebook-is-absolutely-cooked

Child's Play - Tech's new generation and the end of thinking

Tags: tech, culture, business

It feels like staring in the abyss… rather sad I'd say.

https://harpers.org/archive/2026/03/childs-play-sam-kriss-ai-startup-roy-lee/

Vulnerability as a Service

Tags: tech, ai, machine-learning, gpt, security

The OpenClaw instances running around are really a security hazard…

https://herman.bearblog.dev/vulnerability-as-a-service/

Reviewing "How AI Impacts Skill Formation"

Tags: tech, ai, machine-learning, gpt, science, research

I was so waiting for someone motivated enough to publish a review of that paper. I indeed threw it away as weak after reading it. Thanks for taking the time to write this up! This is good scientific inquiry… and it shows there were interesting findings in the paper that the authors decided to just ignore.

https://jenniferplusplus.com/reviewing-how-ai-impacts-skill-formation/

The path to ubiquitous AI

Tags: tech, ai, machine-learning, gpt, hardware, performance, power

Still a bit mysterious but could be interesting if they really deliver.

https://taalas.com/the-path-to-ubiquitous-ai/

The power play behind Hyperion

Tags: tech, gafam, facebook, ai, machine-learning, gpt, politics, business, economics, ecology

This planned giant data center by Meta shows how the big players are grabbing land to satisfy their hubris. So much waste all around.

https://sherwood.news/tech/hyperion/

Too many satellites? Earth's orbit is on track for a catastrophe - but we can stop it

Tags: tech, geospatial, law, politics

There's clearly a regulation gap for satellites. We've been putting way too many of them in orbit the past decade and it's currently going to accelerate. This jeopardizes the night sky, astronomy and the possibility of space exploration. Clearly we're making the wrong choices here.

Cosmologically Unique IDs

Tags: tech, uuid, physics, mathematics, funny

Really fun thought experiment. What if we need truly unique IDs at universe scale? Several options are explored.

https://jasonfantl.com/posts/Universal-Unique-IDs/

Making WebAssembly a first-class language on the Web

Tags: tech, web, standard, webassembly

There is indeed a path for better support for WebAssembly on the Web platform. Let's just hope it doesn't take a decade to get there.

https://hacks.mozilla.org/2026/02/making-webassembly-a-first-class-language-on-the-web/

Cleaning up merged git branches: a one-liner from the CIA's leaked dev docs

Tags: tech, git, version-control, tools

Nice little git trick. We can all thank the CIA I guess?

brat: Brutal Runner for Automated Tests

Tags: tech, unix, posix, shell, tests, tools

Interesting shell based test framework targeting pure POSIX. This makes it fairly portable. It feels a bit raw but there are a few interesting ideas in there.

https://codeberg.org/sstephenson/brat

codespelunker - CLI code search tool that understands code structure

Tags: tech, command-line, tools, programming, search

Looks like a good tool when you need to search for stuff in codebases.

sandbox-exec: macOS's Little-Known Command-Line Sandboxing Tool

Tags: tech, security, sandbox, apple

Looks like a neat little tool in the Mac ecosystem. It seems to make sandboxing easy despite a couple of caveats.

https://igorstechnoclub.com/sandbox-exec/

Lyte2D

Tags: tech, game, lua

Looks like a neat little lua based game engine for simple 2D.

Ordered Dithering with Arbitrary or Irregular Colour Palettes

Tags: tech, colors, graphics

There's something I find fascinating about dithering somehow. Here are more algorithms and approach to compare side by side.

https://matejlou.blog/2023/12/06/ordered-dithering-for-arbitrary-or-irregular-palettes/

Django ORM Standalone: Querying an existing database

Tags: tech, django, orm, databases

Interesting first article, I wonder what the rest of the series will have in store. In any case this shows how practical it is to use the Django ORM standalone. This opens the door to nice use cases.

https://www.paulox.net/2026/02/20/django-orm-standalone-database-inspectdb-query/

Parse, don't Validate and Type-Driven Design in Rust

Tags: tech, rust, reliability, failure, type-systems

Short explanation of why you want to make invalid state impossible to represent. This leads to nice properties in your code, the price to pay is introducing more types to encode the invariants of course.

https://www.harudagondi.space/blog/parse-dont-validate-and-type-driven-design-in-rust/

Dictionary of Algorithms and Data Structures

Tags: tech, algorithm, data

An interesting resource, good way to match problems to algorithms and data structures.

SFQ: Simple, Stateless, Stochastic Fairness

Tags: tech, services, distributed, queuing, performance

Interesting approach to provide more fairness to client requests.

https://brooker.co.za/blog/2026/02/25/sfq.html

Read Locks Are Not Your Friends

Tags: tech, multithreading, performance

A good reminder that on modern hardware read-write locks are rarely the solution despite the documentation claims.

https://eventual-consistency.vercel.app/posts/write-locks-faster

On the question of debt

Tags: tech, technical-debt, organisation, ai, machine-learning, copilot

Interesting point, there are indeed different types of "debt" in the systems we build. It likely help to be more precise about their nature, and indeed assisted coding might help grow a particular kind of debt.

https://medium.com/mapai/on-the-question-of-debt-aca1125d4a62

The Man Who Stole Infinity

Tags: science, mathematics, history

Fascinating story about the little known Cantor big mistake. This also shows once more, that even though we like to put people on pedestals and look for a "lone genius" or a "hero", discoveries are always a process of several minds playing of each other.

https://www.quantamagazine.org/the-man-who-stole-infinity-20260225/

How far back in time can you understand English?

Tags: linguistics, history

This is an excellent piece if you like linguistics and its historical component. It shows quite well how much English changed over the centuries.

https://www.deadlanguagesociety.com/p/how-far-back-in-time-understand-english

We need to talk about naked mole rats

Tags: science, biology, nature, funny

Yes we do need to talk more about them. They are ugly… but they are awesome! (in a scary way)

https://theoatmeal.com/comics/naked_mole_rats

Bye for now!

27 Feb 2026 12:41pm GMT